900-2G503-0310-000 NVIDIA Tesla 32GB V100 SXM2 Computational Accelerator.

- — Free Ground Shipping

- — Min. 6-month Replacement Warranty

- — Genuine/Authentic Products

- — Easy Return and Exchange

- — Different Payment Methods

- — Best Price

- — We Guarantee Price Matching

- — Tax-Exempt Facilities

- — 24/7 Live Chat, Phone Support

- — Visa, MasterCard, Discover, and Amex

- — JCB, Diners Club, UnionPay

- — PayPal, ACH/Wire Transfer

- — Apple Pay, Amazon Pay, Google Pay

- — Buy Now, Pay Later - Affirm, Afterpay

- — GOV/EDU/Institutions PO's Accepted

- — Invoices

- — Deliver Anywhere

- — Express Delivery in the USA and Worldwide

- — Ship to -APO -FPO

- — For USA - Free Ground Shipping

- — Worldwide - from $30

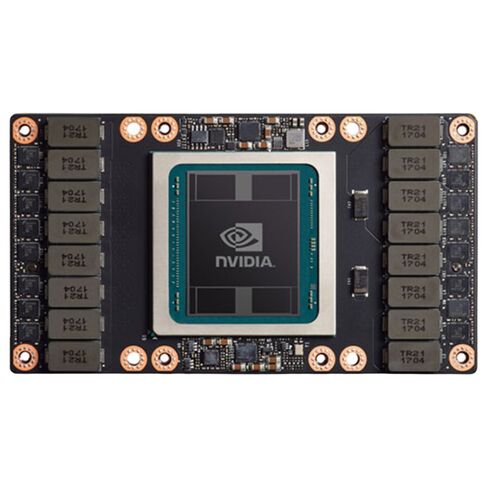

Overview of NVIDIA Tesla V100 Computational Accelerator

Performance Specifications

- Manufacturer: NVIDIA

- Part Number: 900-2G503-0310-000

- Graphics Processor: Tesla V100

- CUDA Support: Yes

- CUDA Cores: 5120

- NVLink Support: Yes

- Double Precision Performance: 7800 GFLOPS

- Single Precision Performance: 15700 GFLOPS

Memory and Bandwidth

- Memory Size: 32 GB

- Memory Type: HBM2 (High Bandwidth Memory 2)

- Maximum Memory Bandwidth: 900 GB/s

Design and Power Requirements

Cooling and Thermal Management

- Cooling System: Passive Cooling

Power Consumption

- Typical Power Usage: 300W

900-2G503-0310-000 NVIDIA Tesla 32GB V100 SXM2

The 900-2G503-0310-000 NVIDIA Tesla 32GB V100 SXM2 Computational Accelerator is a high-performance GPU designed to accelerate compute-intensive workloads in scientific research, deep learning, AI training, and more. It leverages NVIDIA’s Volta architecture, offering unmatched performance for both training and inference tasks. With 32GB of high-bandwidth memory and support for NVLink and SXM2 interconnect, this GPU is ideal for scalable AI systems, data centers, and high-performance computing (HPC) environments.

How the Tesla V100 SXM2 Improves AI and Deep Learning Workloads

When it comes to AI and deep learning, the 900-2G503-0310-000 NVIDIA Tesla 32GB V100 SXM2 is a game-changer. The Volta architecture and Tensor Cores are specifically designed to accelerate deep learning training, offering significant improvements in both performance and efficiency. With its advanced parallel processing capabilities, it enables researchers and engineers to train large neural networks more quickly and efficiently, reducing time-to-market for AI models and innovations.

Tensor Cores for Deep Learning Acceleration

One of the standout features of the Tesla V100 SXM2 is its Tensor Cores, which provide a massive performance boost for matrix math operations, fundamental to deep learning algorithms. These cores are optimized for mixed-precision arithmetic, which improves throughput and energy efficiency, enabling high-speed training with fewer hardware resources. This results in faster model training times, ultimately leading to more rapid iteration cycles in AI research and development.

Applications and Use Cases of the Tesla V100 SXM2

Artificial Intelligence and Machine Learning

The Tesla V100 SXM2 is designed to meet the demanding requirements of AI and machine learning workloads. Whether training large neural networks, optimizing models, or conducting advanced research, this GPU accelerates all stages of AI development. It supports a variety of machine learning frameworks including TensorFlow, PyTorch, and Caffe, making it highly versatile for a range of AI applications.

AI Training

AI training with the Tesla V100 SXM2 dramatically reduces time-to-insight for researchers and developers. Its ability to handle large datasets and complex models ensures that training large-scale deep learning networks is faster and more efficient. The Tesla V100 SXM2 is ideal for natural language processing (NLP), computer vision, and reinforcement learning applications.

AI Inference

In addition to training, the Tesla V100 SXM2 is also highly effective for AI inference, enabling real-time decision-making in applications such as autonomous vehicles, facial recognition, and intelligent video analytics. With its high throughput and low latency, it ensures that AI models perform optimally even in production environments.

High-Performance Computing (HPC)

The V100 SXM2 is also highly beneficial for high-performance computing tasks. Researchers and scientists in fields like physics, bioinformatics, and genomics rely on the Tesla V100 SXM2 to accelerate computational workloads and simulations. It provides the raw compute power required for tasks such as molecular dynamics, weather prediction, and fluid dynamics simulations.

Data Center Infrastructure

In modern data center infrastructures, the 900-2G503-0310-000 Tesla V100 SXM2 is a critical component. Its advanced design allows for effective scaling in large compute clusters, where multiple Tesla V100 SXM2 GPUs can be connected using NVLink to share memory and synchronize processing tasks. This scalability enables data centers to handle the vast computational needs of AI and HPC tasks at scale.

Data Center Efficiency and Scalability

NVLink allows for high-bandwidth, low-latency connections between GPUs, optimizing data flow and increasing computational efficiency. This makes the Tesla V100 SXM2 ideal for AI-powered data centers that need to process large volumes of data quickly, such as for training large-scale machine learning models or running complex simulations.

Visualization and Rendering

While the Tesla V100 SXM2 is primarily designed for AI and HPC tasks, it also excels in GPU-accelerated rendering tasks, such as those used in scientific visualization and 3D rendering. The high compute power and memory bandwidth of the Tesla V100 SXM2 enable fast rendering of complex models and visualizations, assisting professionals in industries such as gaming, architecture, and engineering.

Conclusion: Why Choose the 900-2G503-0310-000 Tesla V100 SXM2?

In summary, the 900-2G503-0310-000 NVIDIA Tesla 32GB V100 SXM2 Computational Accelerator is an outstanding choice for professionals working with AI, machine learning, deep learning, and high-performance computing. Its state-of-the-art technology, including the Volta architecture, Tensor Cores, and NVLink support, enables it to tackle the most demanding computational workloads with exceptional efficiency and speed. Whether you are building AI models, conducting scientific research, or enhancing data center infrastructure, the Tesla V100 SXM2 provides the power and scalability needed to take your projects to the next level.