MM3MP Dell 94GB H100 Nvl Tensor Core GPU Memory Interface.

- — Free Ground Shipping

- — Min. 6-month Replacement Warranty

- — Genuine/Authentic Products

- — Easy Return and Exchange

- — Different Payment Methods

- — Best Price

- — We Guarantee Price Matching

- — Tax-Exempt Facilities

- — 24/7 Live Chat, Phone Support

- — Visa, MasterCard, Discover, and Amex

- — JCB, Diners Club, UnionPay

- — PayPal, ACH/Bank Transfer (11% Off)

- — Apple Pay, Amazon Pay, Google Pay

- — Buy Now, Pay Later - Affirm, Afterpay

- — GOV/EDU/Institutions PO's Accepted

- — Invoices

- — Deliver Anywhere

- — Express Delivery in the USA and Worldwide

- — Ship to -APO -FPO

- — For USA - Free Ground Shipping

- — Worldwide - from $30

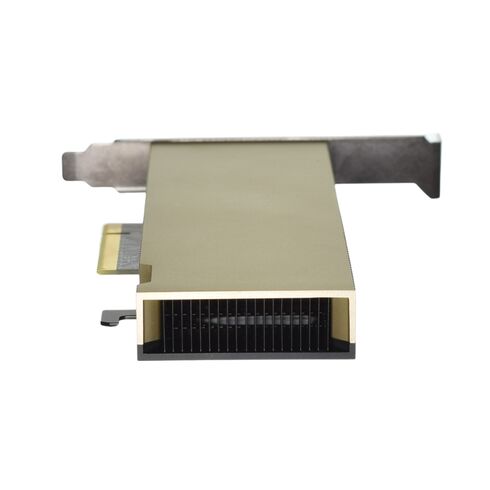

Dell MM3MP 94GB H100 NVL Tensor Core GPU PCIe 5.0 Graphics Accelerator

The Dell MM3MP H100 NVL Tensor Core GPU represents a new era of high-performance computing. Engineered for artificial intelligence, deep learning, and data-intensive workloads, this powerful graphics accelerator leverages PCIe 5.0 x16 interface technology, a massive 94GB HBM3 memory capacity, and a memory bandwidth of 3,938 GB/s to deliver unparalleled computational throughput. Designed with energy efficiency and scalability in mind, it’s a prime choice for AI developers, data centers, and enterprise-grade computing environments.

General Information

- Brand Name: Dell

- Manufacturer Part Number: MM3MP

- Product Type: GPU Card

Power and Performance Efficiency

Total Board Power Configuration

The Dell MM3MP GPU offers flexible power configurations for various system requirements, ensuring reliable performance with optimized energy consumption:

PCIe 16-Pin Cable Strapped for 450W or 600W Power Mode:

- Maximum Power: 400W (Default)

- Power Compliance Limit: 310W

- Minimum Power: 200W

PCIe 16-Pin Cable Strapped for 300W Power Mode:

- Maximum Power: 310W (Default)

- Power Compliance Limit: 310W

- Minimum Power: 200W

Featuring a passive thermal solution and a full-height, full-length dual-slot mechanical form factor (FHFL 10.5), the card balances high performance with efficient cooling.

Technical Specifications

PCI Device Identifiers

- Device ID: 0x2321

- Vendor ID: 0x10DE

- Sub-Vendor ID: 0x10DE

- Sub-System ID: 0x1839

GPU Clock Speeds

- Base Clock: 1,080 MHz

- Boost Clock: 1,785 MHz

- Performance State: P0

Firmware and BIOS Details

- EEPROM Size: 8 Mbit

- UEFI Support: Not Supported

PCI Express Interface Support

- PCI Express Gen5 x16, Gen5 x8, Gen4 x16

- Lane and Polarity Reversal Supported

Enhanced Multi-Instance GPU (MIG) Functionality

Supports up to seven independent GPU instances for parallel task execution and optimized virtualization environments. It also supports Secure Boot (CEC) for enhanced system protection.

Power Connector and Header

- One PCIe 16-pin Auxiliary Power Connector (12V-2x6)

Physical and Weight Specifications

- Board Weight: 1,214 grams (excluding bracket, extenders, and bridges)

- NVLink Bridge: 20.5 grams per bridge (×3 bridges)

- Bracket with Screws: 20 grams

- Enhanced Straight Extender: 35 grams

- Long Offset Extender: 48 grams

- Straight Extender: 32 grams

Memory Architecture and Performance

HBM3 Memory Specifications

- Memory Clock Speed: 2,619 MHz

- Memory Type: HBM3

- Total Memory Size: 94 GB

- Memory Bus Width: 6,016 bits

- Peak Memory Bandwidth: 3,938 GB/s

This high-capacity HBM3 memory subsystem ensures outstanding throughput for large-scale AI model training, deep neural networks, and data simulation tasks.

Software and Driver Support

- SR-IOV Support: Supported – 32 Virtual Functions

- Linux Drivers: R535 or later

- Windows Drivers: R535 or later

- Secure Boot: Supported

- CEC Firmware Version: 00.02.0134.0000 or later

- NVFlash Version: 5.816.0 or later

- NVIDIA CUDA Support: CUDA 12.2 or later (x86)

- Virtual GPU Software Support: NVIDIA vGPU 16.1 or later (vCompute Server Edition)

- NVIDIA AI Enterprise: Supported with VMware

- NVIDIA Certification: NVIDIA-Certified Systems 2.8 or later

BAR Address Mapping

Physical Function BAR Addresses

- BAR0: 16 MiB

- BAR2: 128 GiB

- BAR4: 32 MiB

Virtual Function BAR Addresses

- BAR0: 8 MiB (256 KiB per VF)

- BAR1: 128 GiB, 64-bit (4 GiB per VF)

- BAR3: 1 GiB, 64-bit (32 MiB per VF)

Interrupt and Forwarding Support

- MSI-X: Supported

- MSI: Not Supported

- ARI Forwarding: Supported

PCIe and Hardware Classification

- PCI Class Code: 0x03 – Display Controller

- PCI Subclass Code: 0x02 – 3D Controller

- ECC Memory Support: Enabled

System Management and Connectivity

- SMBus (8-bit Address): 0x9E (write), 0x9F (read)

- IPMI FRU EEPROM I2C Address: 0x50 (7-bit), 0xA0 (8-bit)

- Reserved I2C Addresses: 0xAA, 0xAC, 0xA0

- SMBus Direct Access: Supported

- SMBPBI (SMBus Post-Box Interface): Supported

Operating Conditions

- Ambient Operating Temperature: 0°C to 50°C

- Short-term Ambient Temperature: -5°C to 55°C

- Storage Temperature: -40°C to 75°C

- Operating Humidity: 5% to 85% (relative)

- Short-term Operating Humidity: 5% to 93% (relative)

- Storage Humidity: 5% to 95% (relative)

Reliability Data

- Mean Time Between Failures (MTBF): TBD

Optimized for AI and Machine Learning Workloads

The Dell MM3MP GPU harnesses the power of NVIDIA’s H100 Tensor architecture, enabling next-generation AI performance. Its advanced Tensor Cores and multi-instance GPU capability allow simultaneous workloads for efficient training and inferencing. Perfect for deep learning frameworks such as TensorFlow, PyTorch, and MXNet, it accelerates AI model development and deployment across enterprise and data-center environments.

Overview of Dell MM3MP 94GB H100 NVL Tensor Core GPU

The Dell MM3MP 94GB H100 NVL Tensor Core GPU stands as one of the most powerful and advanced graphics processing units designed to meet the evolving needs of artificial intelligence, machine learning, high-performance computing, and complex data center workloads. Built with cutting-edge architecture and supported by high-bandwidth memory, it delivers exceptional processing power and efficiency that transform data-intensive tasks into streamlined operations. Designed for enterprise-level applications, this GPU combines performance, scalability, and efficiency to drive the next generation of computing solutions.

Advanced Architecture

The Dell MM3MP H100 NVL Tensor Core GPU is based on NVIDIA’s Hopper architecture, which introduces a new generation of AI acceleration technology. This architecture significantly improves floating-point computation, enabling faster processing of complex models and deeper neural networks. Each Tensor Core is optimized to deliver exceptional AI training and inference performance, allowing researchers and enterprises to accelerate productivity in data science and machine learning pipelines.

Hopper architecture integrates advanced matrix computation capabilities and dynamic sparsity support, boosting computational throughput while reducing power consumption. The architecture’s refined core design offers greater parallelism and data-handling efficiency, ideal for large-scale simulations, data analytics, and inference workloads.

Performance Optimization and Computational Power

The GPU’s performance is enhanced by its ability to perform multiple operations per clock cycle across thousands of cores. This enables it to handle simultaneous tasks with high efficiency, which is critical for deep learning models and AI-driven applications. With support for both FP8 and FP16 precision, the Dell MM3MP GPU can deliver remarkable performance improvements for complex matrix multiplications and transformer-based neural networks.

Moreover, this GPU integrates Tensor Memory Accelerator technology that minimizes bottlenecks by dynamically managing memory allocation between compute units. This ensures optimized throughput across the device, improving scalability and performance consistency across workloads.

HBM3 Memory Technology and Bandwidth Efficiency

The 94GB of HBM3 memory included in the Dell MM3MP GPU is a key component of its superior performance. The HBM3 (High Bandwidth Memory) architecture offers faster data access speeds and improved energy efficiency compared to traditional GDDR memory. With a 6016-bit memory interface and 3938GB/s memory bandwidth, it can manage large datasets in real-time, reducing latency and accelerating computation across diverse workloads.

This level of memory bandwidth allows data scientists and engineers to train models with larger batch sizes and higher precision, making it possible to execute deep neural networks more efficiently. HBM3 technology also contributes to reduced power draw and improved cooling efficiency, enhancing the overall system reliability during sustained high-load operations.

PCIe 5.0 x16 Interface and System Compatibility

The PCIe 5.0 x16 interface of the Dell MM3MP H100 NVL Tensor Core GPU ensures a highly efficient connection with the host system. PCIe 5.0 doubles the bandwidth of PCIe 4.0, enabling faster communication between the GPU and the CPU. This allows for rapid data transfers and minimized latency, which is crucial for AI workloads that depend on large-scale data streaming and parallel computations.

The GPU is engineered for compatibility with modern Dell PowerEdge servers and other enterprise-grade systems. The advanced PCIe 5.0 interface enables flexible integration into diverse environments, making it ideal for high-performance computing clusters, data analytics platforms, and deep learning workstations. This future-ready design ensures that organizations can leverage the GPU’s capabilities even as infrastructure standards continue to evolve.

Energy Efficiency and Thermal Design

Despite its immense power, the Dell MM3MP GPU is built with energy efficiency in mind. The advanced thermal architecture ensures that the GPU can operate continuously under demanding workloads without overheating or throttling. Intelligent power distribution systems dynamically allocate energy where it’s most needed, maintaining performance stability and reducing total power consumption.

High-efficiency cooling systems and low-noise operation make it a preferred choice for data center environments where both performance and reliability are non-negotiable. The GPU’s design includes optimized heat spreaders and smart fan controls to balance temperature and noise, ensuring uninterrupted operation even in compact server configurations.

AI and Deep Learning Applications

The Dell MM3MP H100 NVL Tensor Core GPU redefines what’s possible in AI training and inference. Its architecture is specifically tailored for large-scale machine learning models, such as generative AI, computer vision, natural language processing, and reinforcement learning. By offering massive compute performance, this GPU accelerates the time to insight and innovation for enterprises and research institutions.

Deep learning models benefit from the GPU’s massive parallel processing capabilities and optimized Tensor Cores. These cores are designed to handle multi-dimensional matrix computations required by AI algorithms, allowing for faster convergence and higher accuracy. The GPU also supports advanced frameworks such as TensorFlow, PyTorch, and MXNet, ensuring seamless integration into existing AI infrastructures.

Scientific Research and High-Performance Computing

Beyond AI, the Dell MM3MP H100 NVL GPU delivers tremendous value for scientific simulations, computational fluid dynamics, and molecular modeling. Researchers can run complex simulations faster and with greater precision, enabling breakthroughs in fields like climate modeling, genomics, and astrophysics. The GPU’s ability to process vast amounts of data with low latency makes it ideal for both academic research and commercial R&D applications.

With its combination of high memory capacity, fast interconnects, and optimized compute units, it significantly reduces the time required to complete data-intensive computations. The GPU’s efficient use of resources ensures that even the most complex workloads can be executed without sacrificing performance or accuracy.

Data Analytics and Cloud Acceleration

As organizations increasingly rely on data-driven decision-making, the Dell MM3MP 94GB GPU serves as a cornerstone for advanced analytics and real-time processing. Its capacity to handle massive data pipelines and execute large-scale computations in parallel allows businesses to extract actionable insights faster. In cloud environments, the GPU’s efficiency ensures minimal latency and maximum throughput, making it suitable for multi-tenant cloud infrastructures and GPU-as-a-Service platforms.

For financial modeling, cybersecurity analytics, and predictive maintenance applications, the GPU’s architecture delivers robust and consistent results, enabling data scientists and engineers to focus on algorithm development rather than computational constraints.

Scalability and Multi-GPU Configurations

The Dell MM3MP H100 NVL Tensor Core GPU is designed for scalability, allowing multiple GPUs to work together seamlessly in large-scale computing infrastructures. Whether used in a single workstation or a multi-GPU server configuration, it ensures linear performance scaling across distributed systems. The GPU’s interconnect capabilities enable efficient communication between devices, minimizing data transfer overhead and ensuring synchronized task execution.

This scalability makes it ideal for large AI training clusters, data centers, and hyperscale environments. The GPU can be easily integrated into distributed computing frameworks like NVIDIA NVLink and NVSwitch, enabling efficient resource sharing and workload balancing across nodes.

Security Features and Data Protection

In enterprise environments where data protection is paramount, the Dell MM3MP H100 NVL GPU incorporates advanced security features to ensure the integrity and confidentiality of workloads. It supports secure boot, encrypted firmware, and runtime protection mechanisms that prevent unauthorized access and code tampering. These security measures are designed to protect sensitive AI and analytics workloads from potential threats or vulnerabilities.

Additionally, the GPU supports hardware-based encryption for data stored in memory, ensuring that critical information remains protected throughout the processing pipeline. This level of protection is particularly valuable in sectors such as healthcare, finance, and defense, where data privacy is a fundamental requirement.

Software Ecosystem and Compatibility

The Dell MM3MP H100 NVL Tensor Core GPU is compatible with a wide range of software frameworks and development tools. It supports popular AI and HPC libraries, including CUDA, cuDNN, TensorRT, and NCCL, offering developers extensive flexibility for optimization and deployment. These software tools enable developers to take full advantage of the GPU’s Tensor Cores, memory bandwidth, and compute potential, reducing development time and improving application performance.

Moreover, Dell provides extensive driver support and firmware updates to maintain peak performance and compatibility with modern operating systems. Whether deployed on Linux-based servers or virtualized environments, this GPU ensures smooth integration and long-term reliability.

Developer Tools and Optimization Frameworks

For developers building AI applications or high-performance workloads, the Dell MM3MP GPU provides access to advanced optimization tools. NVIDIA Nsight Systems, Nsight Compute, and CUDA Profiler offer deep visibility into application performance, allowing developers to fine-tune kernels and improve efficiency. These tools are designed to identify performance bottlenecks, optimize memory usage, and streamline parallel processing to achieve the best possible results.

In addition, AI model training platforms benefit from mixed-precision capabilities that balance accuracy and speed, allowing faster model convergence while minimizing energy consumption. This makes the GPU a strong foundation for enterprise AI development and deployment pipelines.

Deployment Flexibility

Whether integrated into a data center rack, a workstation, or a cloud infrastructure, the Dell MM3MP H100 NVL Tensor Core GPU offers unmatched flexibility. Its modular design allows seamless deployment across various platforms without requiring major system modifications. This adaptability makes it suitable for organizations at any scale, from small research teams to global enterprise data centers.

The GPU’s PCIe 5.0 interface and scalable design make it a forward-looking investment, ensuring compatibility with next-generation hardware and software ecosystems. Its ability to integrate into diverse computing environments underscores its versatility and future-proof performance.

Key Advantages of Dell MM3MP H100 NVL Tensor Core GPU

The Dell MM3MP 94GB GPU excels across multiple dimensions, providing an unparalleled balance between compute power, memory performance, and energy efficiency. Its 94GB HBM3 memory, massive bandwidth, and Hopper-based Tensor Core architecture make it a powerhouse for AI, deep learning, and data analytics workloads. Organizations can accelerate innovation while maintaining efficient power utilization and thermal stability.

Its robust design, enterprise-grade reliability, and seamless compatibility with Dell’s server lineup position it as a preferred choice for high-performance computing infrastructures. The GPU’s integration of advanced interconnects, virtualization support, and security features ensures that it meets the highest standards of modern data center environments.

Future-Proof Investment for AI and HPC

As AI and high-performance computing continue to evolve, the Dell MM3MP H100 NVL Tensor Core GPU offers the scalability and performance necessary to stay ahead of the curve. Its cutting-edge technology and forward-compatible interface ensure it remains relevant for future workloads and software ecosystems. This makes it not only a high-performing GPU for today’s needs but also a strategic investment for tomorrow’s computing challenges.