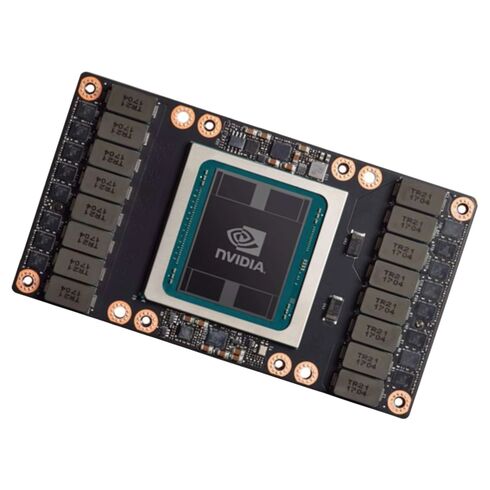

P05472-001 HPE Tesla V100 SXM2 Volta GPU Accelerator Nvidia 32GB

- — Free Ground Shipping

- — Min. 6-month Replacement Warranty

- — Genuine/Authentic Products

- — Easy Return and Exchange

- — Different Payment Methods

- — Best Price

- — We Guarantee Price Matching

- — Tax-Exempt Facilities

- — 24/7 Live Chat, Phone Support

- — Visa, MasterCard, Discover, and Amex

- — JCB, Diners Club, UnionPay

- — PayPal, ACH/Bank Transfer (11% Off)

- — Apple Pay, Amazon Pay, Google Pay

- — Buy Now, Pay Later - Affirm, Afterpay

- — GOV/EDU/Institutions PO's Accepted

- — Invoices

- — Deliver Anywhere

- — Express Delivery in the USA and Worldwide

- — Ship to -APO -FPO

- — For USA - Free Ground Shipping

- — Worldwide - from $30

HPE Nvidia Tesla V100 SXM2 Volta GPU Accelerator

Key Product Features

- 32GB of high-performance HBM2 memory for intensive computational tasks

- 640 Tensor Cores optimized for deep learning and AI workloads

- 5120 CUDA Cores for unparalleled parallel processing capabilities

- Impressive memory bandwidth of 900 GB/s for fast data transfers

- NVLink support for superior scalability and connectivity

Manufacturer Overview

- Manufacturer: HPE

- Part Number: P05472-001

Engine Architecture

Advanced Volta Architecture

- Built on the cutting-edge Volta architecture, offering extraordinary computing power

- Supports modern deep learning frameworks and AI processes

Performance Details

- GPU Clock: 1246 MHz

- Boost Clock: 1380 MHz

- Double Precision Performance: 7.8 Teraflops (TFLOPS)

- Single Precision Performance: 15.7 Teraflops (TFLOPS)

- Deep Learning Performance: 125 Teraflops (TFLOPS)

Memory Specifications

- Memory Size: 32GB HBM2

- Memory Interface: 384-bit width for high-speed data access

- Error Correction Code (ECC): Yes, ensuring data integrity for critical workloads

- Memory Clock Speed: 876 MHz

- Memory Bandwidth: 900 GB/s, enabling rapid data movement

Connectivity & Interconnects

NVLink & High-Speed Bus Interface

- Bus Interface: NVLink, providing high-bandwidth interconnects for multi-GPU setups

- Interconnect Bandwidth: 300 GB/s, enhancing data transfer rates

Supported Technologies

- Cuda Technology for accelerated GPU computing

- DirectCompute and OpenCL support for versatile programming

- OpenACC for high-performance computing on NVIDIA GPUs

- Volta Architecture, specifically designed for AI and machine learning applications

- Tensor Cores, enabling rapid matrix operations for deep learning

Power & Thermal Specifications

- Maximum Power Consumption: 300W, delivering high performance with efficient power usage

- Cooling Solution: Passive cooling system (heatsink or thermal solution not included)

Form Factor & Compatibility

- Form Factor: SXM2, designed for high-density systems

HPE Nvidia Tesla V100 SXM2

- Ideal for AI research, machine learning, and deep learning applications

- Designed to handle the most demanding computational tasks with ease

- Ensures scalability and efficiency with NVLink technology

HPE P05472-001 GPU Accelerator Overview

The HPE P05472-001 NVIDIA TESLA V100 SXM2 Volta GPU Accelerator is a high-performance computing solution designed for data-intensive applications. Equipped with 32GB of HBM2 memory, 640 Tensor Cores, and 5120 CUDA Cores, this GPU delivers unparalleled computational capabilities. Its memory bandwidth of 900GB/s ensures rapid data processing, making it ideal for complex workloads.

Key Features

High-Performance Tensor Cores

The 640 Tensor Cores integrated into the TESLA V100 are optimized for deep learning tasks. These cores provide the computational power required to accelerate AI models, enabling faster training and inference times. Tensor Cores support mixed-precision computing, balancing performance and accuracy for AI workloads.

Powerful CUDA Cores for General Purpose Processing

With 5120 CUDA Cores, the TESLA V100 excels in parallel processing. CUDA Cores are essential for running complex simulations, rendering high-quality graphics, and performing scientific calculations. This makes the TESLA V100 a versatile choice for a range of applications beyond AI, including computational fluid dynamics and molecular dynamics simulations.

Exceptional Memory Bandwidth

The 900GB/s memory bandwidth provided by the HBM2 memory ensures that data can be moved rapidly within the GPU. This high-speed data transfer is critical for performance in data-intensive tasks such as real-time analytics and video processing.

NVLink Technology

NVLink interconnect technology enhances data sharing between GPUs, providing faster communication than traditional PCIe connections. This allows multiple TESLA V100 GPUs to operate in tandem, significantly boosting overall system performance. NVLink is particularly beneficial for multi-GPU setups in machine learning and high-performance computing (HPC) environments.

Applications

Deep Learning and Artificial Intelligence

The TESLA V100 is engineered for deep learning applications, from natural language processing to image recognition. Its Tensor Cores optimize neural network training, reducing the time required to achieve convergence. Companies in AI research and development leverage this GPU to stay competitive in rapidly evolving industries.

High-Performance Computing (HPC)

Scientific research institutions and enterprises rely on the TESLA V100 for HPC workloads. These include weather modeling, seismic analysis, and genomic sequencing. The GPU's capability to handle parallel computations makes it indispensable for complex simulations that demand significant computational resources.

Data Analytics

With its exceptional processing power, the TESLA V100 accelerates data analytics tasks, such as real-time data streaming and predictive modeling. This allows organizations to derive actionable insights faster, aiding in decision-making and strategic planning.

Graphics Rendering

Beyond computation, the TESLA V100 supports high-quality graphics rendering. Industries such as media, entertainment, and architecture benefit from its ability to render complex scenes and animations efficiently. This GPU is a preferred choice for creating immersive visual experiences.

Technical Specifications

Memory Configuration

The TESLA V100 features 32GB of HBM2 memory, designed to provide high memory bandwidth. This allows for efficient handling of large datasets and complex models, essential for deep learning and HPC applications.

Compute Capabilities

- Tensor Cores: 640

- CUDA Cores: 5120

- Base Clock Speed: 1290 MHz

- Boost Clock Speed: 1530 MHz

Performance Metrics

In terms of raw performance, the TESLA V100 offers up to 125 TFLOPs of deep learning performance. This metric underscores its capability to process trillions of floating-point operations per second, a critical requirement for modern computational tasks.

Energy Efficiency

The TESLA V100 is designed with energy efficiency in mind, delivering high performance per watt. This is particularly important in data centers where operational costs and power consumption are critical factors.

Compatibility and Integration

Server Compatibility

The HPE P05472-001 NVIDIA TESLA V100 is compatible with a wide range of HPE servers. It integrates seamlessly with platforms such as HPE Apollo and ProLiant servers, providing scalable solutions for various workloads. Proper compatibility ensures optimized performance and stability.

Operating System Support

This GPU supports major operating systems, including Linux and Windows. Drivers and software tools are available to facilitate integration and maximize performance, ensuring smooth operation across different environments.

Advanced Features

Dynamic Load Balancing

The TESLA V100 employs dynamic load balancing to distribute computational tasks efficiently across its cores. This optimizes resource utilization and ensures consistent performance under varying workloads.

Error-Correcting Code (ECC) Memory

ECC memory in the TESLA V100 helps detect and correct memory errors in real-time. This enhances the reliability of computations, which is crucial for mission-critical applications in sectors such as healthcare and finance.

Scalability for Multi-GPU Configurations

NVLink technology supports scalability by allowing multiple GPUs to work in unison. This is essential for large-scale deployments in data centers, where performance can be scaled linearly with the addition of more GPUs.

Optimizing Performance

Leveraging TensorFlow and PyTorch

The TESLA V100 is optimized for popular AI frameworks such as TensorFlow and PyTorch. Developers can harness its power to train complex models faster, thanks to its support for CUDA and cuDNN libraries.

Tuning for HPC Workloads

Performance tuning tools provided by NVIDIA allow users to optimize the TESLA V100 for specific HPC workloads. This includes adjusting parameters to maximize throughput and minimize latency.

Monitoring and Management Tools

NVIDIA's suite of management tools enables administrators to monitor GPU performance and health. This includes real-time tracking of utilization, power consumption, and temperature, ensuring that the GPU operates within optimal parameters.

Comparative Analysis

TESLA V100 vs. TESLA T4

While both GPUs are designed for high-performance computing, the TESLA V100 offers superior performance in terms of compute capabilities and memory bandwidth. However, the TESLA T4 is more energy-efficient, making it a better choice for applications where power consumption is a primary concern.

TESLA V100 vs. A100

The NVIDIA A100 is the successor to the TESLA V100, offering improved performance and efficiency. However, the TESLA V100 remains a strong contender for organizations seeking a cost-effective solution for their existing infrastructure.

Use Cases Across Industries

Healthcare

In healthcare, the TESLA V100 accelerates applications such as medical imaging and genomic analysis. These capabilities enable faster diagnosis and personalized medicine, improving patient outcomes.

Financial Services

Financial institutions use the TESLA V100 for risk modeling and real-time fraud detection. Its ability to process vast datasets quickly provides a competitive edge in a fast-paced industry.

Autonomous Vehicles

The automotive industry leverages the TESLA V100 to train models for autonomous vehicles. This includes tasks such as object detection, path planning, and sensor fusion, which are critical for safe and efficient operation.

Media and Entertainment

From rendering high-quality visual effects to live streaming, the TESLA V100 supports the demanding needs of the media and entertainment industry. Its high-performance capabilities ensure smooth and efficient workflows.

Future-Proofing Your Infrastructure

Longevity and Reliability

The TESLA V100 is built for long-term reliability, ensuring consistent performance over time. Its robust design and advanced features make it a dependable choice for mission-critical applications.

Support for Emerging Technologies

As new technologies and frameworks emerge, the TESLA V100 continues to receive updates and support. This ensures compatibility with the latest advancements, providing a future-proof solution for your computational needs.

Investment Protection

Investing in the TESLA V100 guarantees high performance and scalability, protecting your infrastructure investments. Its versatility across various workloads ensures that it remains relevant in diverse computing environments.