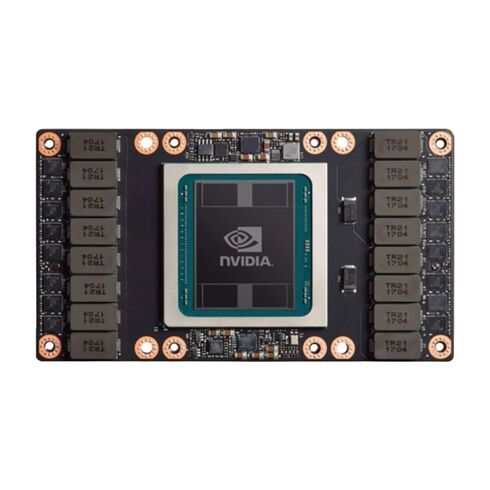

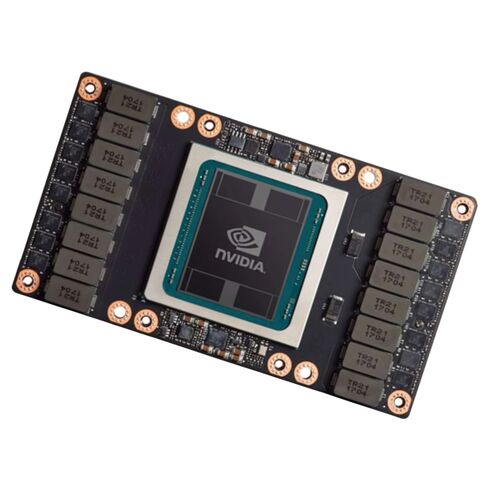

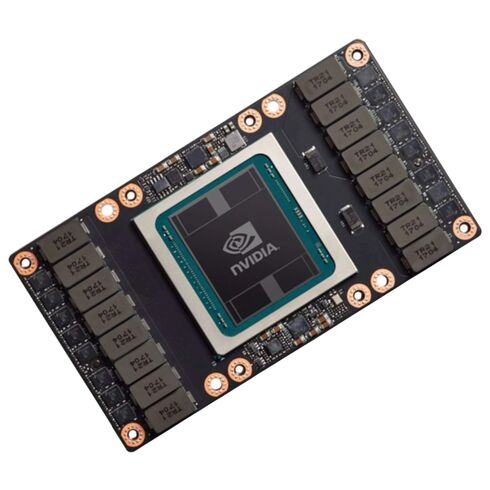

P05912-001 HPE Tesla V100 SXM2 Volta GPU Accelerator Nvidia 32GB HBM2

- — Free Ground Shipping

- — Min. 6-month Replacement Warranty

- — Genuine/Authentic Products

- — Easy Return and Exchange

- — Different Payment Methods

- — Best Price

- — We Guarantee Price Matching

- — Tax-Exempt Facilities

- — 24/7 Live Chat, Phone Support

- — Visa, MasterCard, Discover, and Amex

- — JCB, Diners Club, UnionPay

- — PayPal, ACH/Bank Transfer (11% Off)

- — Apple Pay, Amazon Pay, Google Pay

- — Buy Now, Pay Later - Affirm, Afterpay

- — GOV/EDU/Institutions PO's Accepted

- — Invoices

- — Deliver Anywhere

- — Express Delivery in the USA and Worldwide

- — Ship to -APO -FPO

- — For USA - Free Ground Shipping

- — Worldwide - from $30

HPE Nvidia TESLA GPU Accelerator

Overview

Enhance your computing power with the HPE Nvidia TESLA V100 SXM2 GPU accelerator. Equipped with 32GB of HBM2 memory and offering extraordinary speed, this GPU delivers top-tier performance for intensive computational tasks.

Key Specifications

- Brand Name: HPE

- Part Number: P05912-001

- Model: HPE Nvidia TESLA V100 SXM2

- Memory Capacity: 32GB HBM2

- Memory Bandwidth: 900 GB/s

- CUDA Cores: 5120

- Tensor Cores: 640

- Form Factor: SXM2

- Bus Interface: NVLink

Engine Performance

- Architecture: Volta

- GPU Clock: 1246 MHz

- Boost Clock: 1380 MHz

- Double Precision Performance: 7.8 TFLOPS

- Single Precision Performance: 15.7 TFLOPS

- Deep Learning Performance: 125 TFLOPS

Memory Details

- Memory Type: HBM2

- Interface Width: 384-bit

- Memory Clock: 876 MHz

- Error Correction Code: Yes (ECC Support)

Connectivity and Compatibility

- Supported Technologies: CUDA, DirectCompute, OpenCL, OpenACC, Volta Architecture, Tensor Cores

- Interconnect Bandwidth: 300 GB/s

Power and Cooling

- Maximum Power Consumption: 300W

- Cooling System: Passive (Heatsink/Thermal Solution Not Included)

NVLink Support

The HPE Nvidia TESLA V100 supports NVLink for high-speed interconnect, ensuring seamless scalability in large multi-GPU configurations.

Advanced Performance

With powerful Tensor cores and an efficient Volta architecture, this GPU is designed to accelerate machine learning, deep learning, and scientific computing workloads.

HPE P05912-001 GPU Accelerator

The HPE P05912-001 Tesla V100 SXM2 Volta GPU Accelerator is a high-performance computing solution designed to accelerate workloads across a wide range of applications, from artificial intelligence (AI) and machine learning to scientific simulations and big data analytics. This GPU is powered by Nvidia’s Volta architecture, bringing with it unprecedented processing power and memory bandwidth to handle even the most demanding tasks. With 32GB of HBM2 memory, 640 Tensor Cores, and 5120 CUDA Cores, the Tesla V100 delivers the power needed to perform at the highest level in modern computing environments.

Key Features of HPE P05912-001 Tesla V100 SXM2

- 32GB HBM2 Memory

- 640 Tensor Cores

- 5120 CUDA Cores

- Memory Bandwidth of 900GB/s

- NVLink Support for Scalable Performance

- Advanced Volta Architecture

Advanced Volta Architecture

At the heart of the HPE P05912-001 Tesla V100 SXM2 is the Nvidia Volta architecture, which introduces several key advancements over previous GPU architectures. These innovations include the use of Tensor Cores, designed specifically to accelerate deep learning workloads, and the introduction of the NVLink interconnect technology that facilitates faster communication between GPUs. The Volta architecture offers significant improvements in performance and efficiency, making the Tesla V100 ideal for high-performance computing tasks such as AI model training, data analytics, and scientific research.

Performance and Computing Power

The HPE P05912-001 Tesla V100 SXM2 GPU delivers extraordinary performance thanks to its 5120 CUDA Cores, each capable of executing complex tasks in parallel. This vast number of cores enables the Tesla V100 to process a high volume of data in real time, making it perfect for environments that demand high-throughput computing, such as large-scale simulations, deep learning, and scientific computation. With a memory bandwidth of 900GB/s, the Tesla V100 ensures that data moves quickly between memory and processing cores, preventing bottlenecks and allowing for the fast processing of large datasets.

Tensor Core Technology

The inclusion of 640 Tensor Cores in the HPE P05912-001 Tesla V100 SXM2 marks a significant leap forward in GPU technology. These specialized cores are designed specifically to accelerate matrix operations, which are essential for deep learning algorithms. With Tensor Cores, the Tesla V100 is able to perform matrix multiplications and convolutions far more efficiently than traditional GPUs, reducing the time required for training AI models and enabling faster insights from big data. This makes the Tesla V100 an essential tool for AI researchers and developers.

Memory and Bandwidth for High-Demand Workloads

Equipped with 32GB of high-bandwidth memory (HBM2), the Tesla V100 is capable of handling massive datasets with ease. HBM2 memory provides a high-capacity, high-speed memory solution, allowing the GPU to quickly access and process data. This large memory pool is especially beneficial for workloads such as large-scale neural network training, scientific simulations, and video rendering, where large datasets are the norm. The memory bandwidth of 900GB/s ensures that data is transferred efficiently between memory and processing cores, contributing to overall system performance.

NVLink for Scalable Performance

The Tesla V100 SXM2 supports Nvidia’s NVLink, a high-bandwidth, energy-efficient interconnect technology that allows multiple GPUs to communicate with one another more quickly and effectively. NVLink is designed to scale GPU performance across multiple units, enabling large-scale systems that can tackle the most demanding computational tasks. By using NVLink, users can combine multiple Tesla V100 GPUs in a single system to further increase processing power and memory capacity. This scalability is particularly beneficial in AI research, where training large models requires vast computational resources.

Enhanced System Interconnect

NVLink provides a significantly higher bandwidth than traditional PCIe connections, with up to 300GB/s of bi-directional bandwidth between GPUs, compared to the 32GB/s provided by PCIe Gen 3. This allows for faster data transfer rates and enables high-performance applications to scale effectively. Whether you are running complex simulations or training cutting-edge machine learning models, NVLink helps ensure that all GPUs in a multi-GPU system work together as a cohesive unit, maximizing the efficiency and speed of the overall system.

Applications of the HPE P05912-001

The HPE P05912-001 Tesla V100 SXM2 is ideal for a range of high-performance computing (HPC) and AI applications. Below are some key areas where the Tesla V100 is being used to deliver transformative results:

Artificial Intelligence and Machine Learning

AI and machine learning workloads, particularly deep learning, are some of the most demanding tasks for modern computing systems. The Tesla V100 excels in this area due to its specialized Tensor Cores, which accelerate training and inference for deep neural networks (DNNs). Researchers and engineers working on AI projects will benefit from the Tesla V100’s ability to dramatically reduce the time required to train models, enabling more rapid experimentation and development of AI applications.

Data Science and Analytics

In the field of data science, the Tesla V100’s powerful GPU cores and memory bandwidth make it an excellent tool for processing and analyzing large datasets. From financial modeling to predictive analytics, the Tesla V100 helps accelerate tasks such as data preprocessing, feature extraction, and statistical analysis, allowing data scientists to derive insights faster and more efficiently. The high memory capacity ensures that even large datasets can be stored and processed on the GPU without needing to rely on slower disk-based storage.

Scientific Research and Simulations

The Tesla V100 is also widely used in scientific research, where complex simulations require massive computational resources. Whether simulating physical phenomena, modeling chemical reactions, or analyzing biological data, the Tesla V100’s combination of CUDA cores, Tensor Cores, and high-bandwidth memory make it an ideal choice for researchers working in fields such as genomics, climate modeling, and quantum physics. The GPU’s performance enables researchers to run simulations faster, accelerating discovery and innovation in a range of scientific disciplines.

HPE P05912-001 Tesla V100 SXM2

The HPE P05912-001 Tesla V100 SXM2 stands out as one of the most powerful GPUs available for modern computing applications. Here are some reasons why you should consider this GPU for your system:

Unmatched Performance for AI and HPC

With its 5120 CUDA cores, 640 Tensor Cores, and 32GB of HBM2 memory, the Tesla V100 is designed to provide unmatched performance for artificial intelligence, machine learning, and high-performance computing applications. Whether you are working on large-scale AI model training or running complex scientific simulations, the Tesla V100 ensures that your workloads are completed faster and more efficiently than with traditional CPUs or other GPUs.

Future-Proof Technology

The Tesla V100’s support for NVLink and its integration with Nvidia’s Volta architecture ensures that your system will remain future-proof, even as new technologies and software optimizations emerge. The Tesla V100 provides an investment in high-performance computing that will continue to provide value as the demands of AI, machine learning, and scientific computing evolve.

Scalability for Larger Systems

For businesses and research organizations that need even greater computational power, the Tesla V100’s support for NVLink allows for the creation of multi-GPU systems. These scalable systems provide a significant boost in processing power and memory, enabling organizations to tackle even the largest and most complex workloads with ease.

Cost-Effective Solution for High-End Computing

While high-performance computing systems can be expensive, the HPE P05912-001 Tesla V100 offers an effective balance of price and performance. With its powerful features and scalability, the Tesla V100 provides a cost-effective solution for businesses and research institutions that need top-tier computational power without the need to invest in more expensive alternatives.