Q2N66A HPE Nvidia Tesla V100 SXM2 16GB Pci Express 3.0 X16 Computational Accelerator

- — Free Ground Shipping

- — Min. 6-month Replacement Warranty

- — Genuine/Authentic Products

- — Easy Return and Exchange

- — Different Payment Methods

- — Best Price

- — We Guarantee Price Matching

- — Tax-Exempt Facilities

- — 24/7 Live Chat, Phone Support

- — Visa, MasterCard, Discover, and Amex

- — JCB, Diners Club, UnionPay

- — PayPal, ACH/Bank Transfer (11% Off)

- — Apple Pay, Amazon Pay, Google Pay

- — Buy Now, Pay Later - Affirm, Afterpay

- — GOV/EDU/Institutions PO's Accepted

- — Invoices

- — Deliver Anywhere

- — Express Delivery in the USA and Worldwide

- — Ship to -APO -FPO

- — For USA - Free Ground Shipping

- — Worldwide - from $30

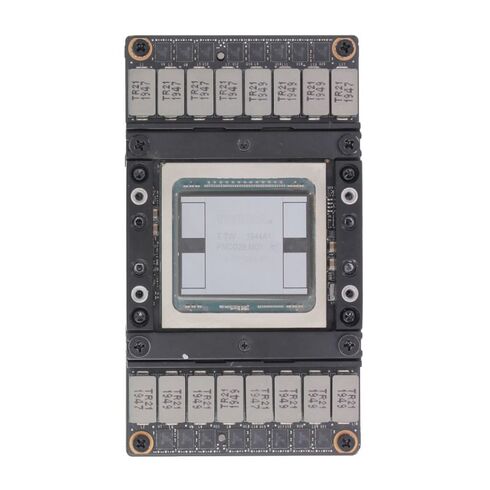

HPE Q2N66A NVIDIA Tesla V100 SXM2 16GB Accelerator

The HPE Q2N66A NVIDIA Tesla V100 SXM2 16GB accelerator is engineered to meet the demands of modern high-performance computing, data analytics, AI model training, and GPU-accelerated workloads. Designed in collaboration between HPE and NVIDIA, this advanced processing unit supports optimized parallel computing, pushing performance boundaries across enterprise environments, research clusters, and data-intensive infrastructures.

Product Details

- Brand: HPE

- Part Number: Q2N66A

- GPU : NVIDIA Tesla V100 SXM2

- Product Type: PCI Express 3.0 x16

Technical Specifications

- GPU Architecture: NVIDIA Volta-based computational engine.

- Memory Capacity: 16GB High-Bandwidth HBM2 for ultra-fast data access.

- Interface: PCI Express 3.0 x16 connectivity for reliable integration.

- Form Factor: SXM2 module designed for GPU-dense server configurations.

- Performance: High throughput for AI learning, neural networks, data simulations, and engineering workloads.

Performance-Enhancing Features

- Massively parallel processing for reduced training and inference times.

- Next-level performance for VDI environments, complex modeling, and scientific workloads.

- Consistent, stable, and predictable results under heavy computational pressure.

- HPE ProLiant server families with SXM2 support.

- HPE Apollo systems optimized for multi-GPU workloads.

- High-density GPU racks and AI-focused server platforms.

HBM2 Memory Benefits

- Substantial memory bandwidth for faster GPU-to-data access

- Reduced latency in multi-stage computational operations

- Higher efficiency compared to traditional GDDR-based memory systems

- Ideal for deep learning model training and inference pipelines

Use Cases Across Industries

- Deep learning and neural network training

- Big data analytics and high-speed processing

- Scientific computing and simulation

- Visualization, rendering, and parallel workloads

The Tesla V100 SXM2

- Industry-leading performance for AI and HPC workloads

- Robust memory architecture for large-scale computations

- Optimized for GPU-accelerated enterprise environments

- Reliable performance in demanding operational conditions

Data Center-Focused Design Elements

- Supports multi-GPU frameworks for optimized performance scaling.

- Designed to run consistently under continuous processing loads.

- Enhanced thermal management improves GPU longevity and workload stability.

- High energy efficiency for reduced operational costs over time.

HPE Q2N66A Nvidia Tesla V100 SXM2 Computational

The HPE Q2N66A, featuring the formidable Nvidia Tesla V100 SXM2 16GB accelerator, represents a cornerstone technology in the realm of high-performance computing (HPC), artificial intelligence (AI), and data-intensive scientific research. This computational accelerator is not merely a component; it is a transformative engine designed to tackle problems previously considered insurmountable within realistic timeframes. Occupying the critical subcategory of PCI Express 3.0 x16 computational accelerators, this module is engineered for maximum throughput in server environments that demand the absolute highest levels of parallel processing power. Its architecture is a testament to a paradigm where computational speed directly translates to breakthroughs in science, intelligence in AI models, and efficiency in enterprise analytics.

The Volta GV100 GPU

At the heart of the HPE Q2N66A lies the Nvidia Volta GV100 graphics processing unit (GPU), a microarchitectural marvel that redefined the capabilities of computational accelerators upon its release. Moving beyond pure graphics rendering, Volta was purpose-built for computational workloads, introducing features that dramatically accelerated both traditional HPC and modern AI.

CUDA Cores and Tensor Cores A Dual-Engine for Computation

The Tesla V100 SXM2 boasts 5,120 CUDA cores, the parallel processing workhorses that excel at a wide range of tasks from fluid dynamics simulations to financial modeling. These cores are significantly more efficient than their predecessors, offering improved performance per watt.

The SXM2 Form Factor and NVLink Interconnect

The "SXM2" in the product name is a critical differentiator from the PCIe card variants of the Tesla V100. This form factor is designed for maximum performance in optimized server platforms. This architectural choice results in a staggering 900 GB/s of memory bandwidth. For data-centric workloads—where processing speed is often limited by the time it takes to move data into the compute units—this immense bandwidth is a game-changer. It allows for faster processing of massive datasets, larger batch sizes in AI training, and more complex simulations that require holding vast amounts of data in readily accessible memory.

Thermal Management

SXM2 modules are soldered directly onto a server board rather than plugged into a PCIe slot. This design allows for a more robust power delivery system (capable of delivering up to 300W to the GPU) and a direct, integrated path for liquid or advanced air cooling solutions. This enables the V100 to sustain its boost clocks for longer periods, delivering consistent, peak performance in sustained workloads—a crucial factor for multi-day HPC simulations or AI training jobs.

High-Throughput Inference

While often associated with training, the V100's INT8 and INT4 precision capabilities also make it a formidable inference engine for deploying trained models in production, where low latency and high throughput are critical for services like real-time translation, fraud detection, or autonomous vehicle perception stacks.

Flexibility

The HPE Q2N66A Tesla V100 fits easily into enterprise-class infrastructures requiring robust compute acceleration. It integrates seamlessly with servers that support PCI Express 3.0 x16 and the necessary power connectors.

Reliability

This HPE accelerator meets stringent data center requirements, providing the stability, compatibility, and long-term operational efficiency required by business-critical infrastructures. Its optimized integration with HPE servers ensures seamless deployment and smooth workload orchestration.

Compatible

This accelerator is designed for HPE servers that support the SXM2 form factor and the necessary high-wattage power delivery and cooling infrastructure. Primary targets include the HPE ProLiant DL560 Gen10 server for dense 4-GPU configurations and various HPE Apollo systems, which are purpose-built for HPC and AI workloads and can scale to multiple V100 SXM2 modules in a single chassis. HPE designs its server chassis and thermal solutions with specific components like the Q2N66A in mind. This ensures that the accelerator operates within its ideal thermal envelope, preventing throttling and maximizing reliability. HPE's integrated management tools, like iLO (Integrated Lights-Out), can provide telemetry on GPU health, temperature, and utilization.

Compatibility

This Q2N66A GPU accelerator is designed to integrate seamlessly with a variety of HPE server platforms, ensuring consistent performance, stable power delivery, and effective hardware synergy across multiple configurations. It supports enterprises looking to expand their GPU clusters or deploy new AI-accelerated workloads.