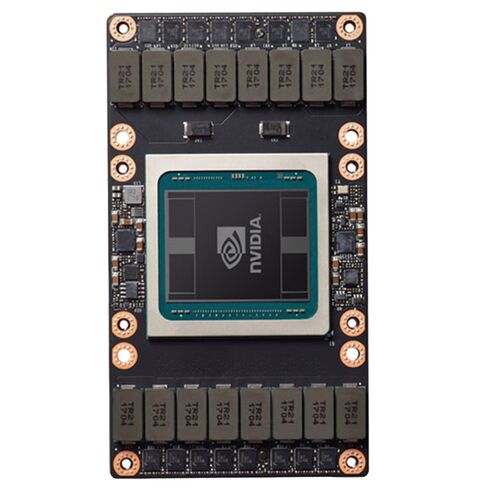

Q9U37A HPE Nvidia Tesla V100 SXM2 32GB Computational Accelerator

- — Free Ground Shipping

- — Min. 6-month Replacement Warranty

- — Genuine/Authentic Products

- — Easy Return and Exchange

- — Different Payment Methods

- — Best Price

- — We Guarantee Price Matching

- — Tax-Exempt Facilities

- — 24/7 Live Chat, Phone Support

- — Visa, MasterCard, Discover, and Amex

- — JCB, Diners Club, UnionPay

- — PayPal, ACH/Bank Transfer (11% Off)

- — Apple Pay, Amazon Pay, Google Pay

- — Buy Now, Pay Later - Affirm, Afterpay

- — GOV/EDU/Institutions PO's Accepted

- — Invoices

- — Deliver Anywhere

- — Express Delivery in the USA and Worldwide

- — Ship to -APO -FPO

- — For USA - Free Ground Shipping

- — Worldwide - from $30

HPE Q9U37A Nvidia Tesla V100 SXM2 32GB Computational Accelerator

Key Specifications

Graphics Processing Unit

- Graphics Processor Manufacturer: Nvidia

- Model: Tesla V100 SXM2

- CUDA Support: Yes

- CUDA Cores: 5120

- Supported Parallel Processing Technology: Nvlink

- Peak Double Precision Performance: 7800 GFLOPS

- Peak Single Precision Performance: 15700 GFLOPS

Memory & Bandwidth

- Dedicated Graphics Memory: 32 GB

- Memory Type: High Bandwidth Memory 2 (HBM2)

- Maximum Memory Bandwidth: 900 GB/s

Design and Cooling

Thermal Design

- Cooling System: Passive

Power Consumption

Energy Efficiency

- Typical Power Usage: 300 W

Why Choose the HPE Q9U37A Nvidia Tesla V100 SXM2?

- Excellent for high-performance computing tasks with peak single and double precision floating-point performance.

- 32 GB of ultra-fast HBM2 memory ensures efficient processing for intensive workloads.

- Supports Nvlink for advanced multi-GPU setups and parallel processing.

- Passive cooling design offers quiet operation while maintaining optimal performance.

- Power-efficient, with a typical consumption of 300 W, making it ideal for large-scale deployments.

Overview of HPE Q9U37A Nvidia Tesla V100 SXM2

The HPE Q9U37A Nvidia Tesla V100 SXM2 32GB HBM2 Computational Accelerator is a high-performance solution designed for advanced computing workloads. Engineered for industries requiring significant computational power, this accelerator empowers machine learning, deep learning, data analytics, and high-performance computing (HPC) applications. Its integration with HPE systems ensures seamless compatibility, optimized performance, and scalability.

Unmatched Performance with Nvidia Tesla V100

At the core of the HPE Q9U37A lies the Nvidia Tesla V100 GPU, a powerhouse for AI and HPC tasks. Powered by the Volta architecture, the Tesla V100 combines 640 Tensor Cores and 5120 CUDA cores to deliver up to 125 teraflops of deep learning performance. This robust architecture enables unparalleled throughput, making it suitable for training large-scale AI models and running complex simulations.

Volta Architecture: Revolutionizing AI and HPC

The Volta architecture introduces innovations such as Tensor Cores, which are specifically designed to accelerate AI workloads. These cores enable the V100 to handle mixed-precision calculations efficiently, combining FP16 precision with FP32 accuracy. Additionally, the Volta SM (Streaming Multiprocessor) enhances computational performance, improving energy efficiency and workload scalability.

Tensor Core Technology

Tensor Cores within the V100 accelerate matrix multiplications, a fundamental operation in deep learning algorithms. This feature boosts the processing of convolutional neural networks (CNNs), recurrent neural networks (RNNs), and other machine learning models, significantly reducing training times.

Memory Innovations with 32GB HBM2

The HPE Q9U37A Nvidia Tesla V100 is equipped with 32GB of HBM2 (High Bandwidth Memory 2), providing up to 900 GB/s of memory bandwidth. This advanced memory configuration allows the accelerator to handle data-intensive applications effortlessly. HBM2 also minimizes latency and ensures efficient parallel processing, making it ideal for workloads that demand rapid data access.

HBM2 and Large-Scale Data Handling

With its massive memory bandwidth, the V100 is optimized for large-scale datasets common in AI and HPC environments. Whether processing genomic data, weather modeling, or video rendering, the V100 ensures fast data retrieval and computational efficiency.

Integration with HPE Systems

The HPE Q9U37A is meticulously designed to integrate seamlessly with HPE servers and systems, offering enhanced reliability and compatibility. This integration is critical for enterprises seeking robust and scalable AI and HPC infrastructures.

Compatibility with HPE Apollo Systems

When paired with HPE Apollo systems, the Q9U37A provides optimized cooling, power efficiency, and workload management. These features are essential for managing intensive computational tasks in data centers.

HPE Apollo 6500 System

The HPE Apollo 6500 system, when combined with the Nvidia Tesla V100, delivers exceptional AI training and inference performance. Its high-density GPU configuration allows for scaling AI workloads efficiently.

Applications of the HPE Q9U37A Nvidia Tesla V100

This computational accelerator is suitable for various industries, including healthcare, finance, automotive, and scientific research. Its versatility ensures optimal performance across diverse applications.

Deep Learning and AI Training

From image recognition to natural language processing (NLP), the Tesla V100 accelerates the training of complex AI models, enabling faster time-to-market for AI-driven solutions.

AI-Powered Healthcare Solutions

In healthcare, the V100 aids in drug discovery, medical imaging analysis, and genomic sequencing. Its ability to process large datasets ensures accurate and timely results.

HPC in Scientific Research

The HPE Q9U37A is instrumental in simulations, modeling, and research activities. Scientists rely on its computational power for solving complex equations, simulating physical phenomena, and analyzing experimental data.

Astrophysics and Weather Prediction

Astrophysicists and meteorologists leverage the Tesla V100 for processing astronomical data and modeling weather patterns. Its speed and efficiency contribute to breakthroughs in understanding our universe and predicting climate changes.

Efficiency and Energy Optimization

The HPE Q9U37A Nvidia Tesla V100 is engineered with efficiency in mind. Its advanced design minimizes power consumption while maximizing output, making it a cost-effective solution for data centers and research facilities.

Energy-Efficient Design

By utilizing the Volta architecture’s energy-saving features, the V100 reduces operational costs. Its efficient cooling mechanisms and optimized performance per watt are vital for sustainable computing.

Cost Savings in Large-Scale Deployments

Enterprises deploying multiple units of the HPE Q9U37A can achieve significant cost savings through reduced energy consumption and maintenance requirements.

Scalability for Future-Ready Infrastructure

The Q9U37A Nvidia Tesla V100 ensures scalability, allowing organizations to expand their AI and HPC capabilities as their needs evolve. Its modular design and compatibility with emerging technologies make it a future-proof investment.

Multi-GPU Configurations

The Tesla V100 supports multi-GPU setups, enabling the construction of powerful computing clusters. This scalability is crucial for workloads that require extensive parallel processing.

NVLink for Enhanced Connectivity

With Nvidia’s NVLink technology, multiple GPUs can communicate at high speeds, delivering faster performance and greater bandwidth compared to traditional PCIe connections. NVLink enhances multi-GPU efficiency, especially in AI model training and HPC simulations.