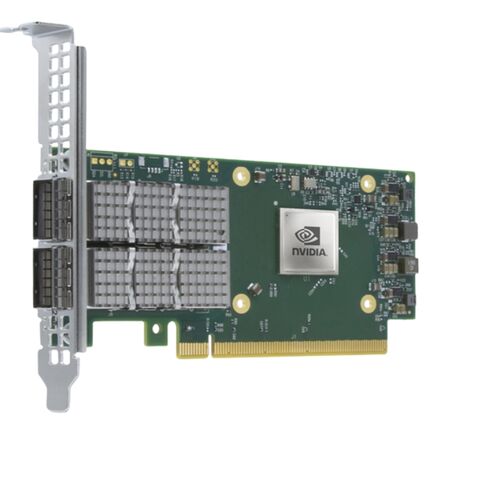

MCX623436MS-CDAB Nvidia Connectx-6 Dx 100GBE Dual-port Adapter Card

Brief Overview of MCX623436MS-CDAB

Nvidia MCX623436MS-CDAB Connectx-6 Dx En Adapter Card 100GBE Ocp3.0 With Host Management Dual-port Qsfp56, Multi-host Or Socket Direct Pcie 4.0 X16 Secure Boot No Crypto Thumbscrew (pull Tab) Bracket. New Sealed in Box (NIB) with 3 Years Warranty

- — Free Ground Shipping

- — Min. 6-month Replacement Warranty

- — Genuine/Authentic Products

- — Easy Return and Exchange

- — Different Payment Methods

- — Best Price

- — We Guarantee Price Matching

- — Tax-Exempt Facilities

- — 24/7 Live Chat, Phone Support

- — Visa, MasterCard, Discover, and Amex

- — JCB, Diners Club, UnionPay

- — PayPal, ACH/Bank Transfer (11% Off)

- — Apple Pay, Amazon Pay, Google Pay

- — Buy Now, Pay Later - Affirm, Afterpay

- — GOV/EDU/Institutions PO's Accepted

- — Invoices

- — Deliver Anywhere

- — Express Delivery in the USA and Worldwide

- — Ship to -APO -FPO

- — For USA - Free Ground Shipping

- — Worldwide - from $30

NVIDIA MCX623436MS-CDAB Network Adapter – Enterprise-Grade Performance Redefined

Brand Identity and Product Classification

- Manufacturer: NVIDIA

- Model Identifier: MCX623436MS-CDAB

- Category: High-Speed Server Network Interface Card (NIC)

Detailed Hardware Specifications

Compact Structural Design

Designed in a compact Small Form Factor (SFF) layout adhering to OCP 3.0 standards, the MCX623436MS-CDAB fits seamlessly into high-density server environments. The dimensions are precisely 4.52 inches by 2.99 inches (115mm x 76mm), offering space efficiency for constrained enclosures.

Connector Interface and Retention

This advanced network adapter is equipped with dual QSFP56 Ethernet ports, supporting both copper and optical cabling. A secure thumbscrew-based retention system with a pull tab bracket ensures quick installation and reliable physical stability under intensive operations.

Multi-Host and Socket Direct Support

Featuring innovative connectivity options such as multi-host and socket direct configurations, the device enables flexible deployment in complex topologies, optimizing inter-node communication and throughput.

Security Enhancements

For enterprises concerned with infrastructure security, the card ships with Secure Boot enabled and cryptographic functionality disabled by default, aligning with compliance-focused deployments.

Protocol Versatility and Ethernet Compatibility

Robust Range of Supported Ethernet Standards

The MCX623436MS-CDAB network card is compatible with an extensive selection of Ethernet technologies. This includes both legacy and modern protocols across a broad spectrum of bandwidth tiers.

- High-speed: 100GBASE-CR4/KR4/SR4, CR2/KR2/SR2

- Mid-tier: 50GBASE-R2/R4, 40GBASE-CR4/KR4/SR4/LR4/ER4/R2

- Standard: 25GBASE-R, 20GBASE-KR2, 10GBASE-LR/ER/CR/KR/CX4, 10GBASE-SR

- Legacy: 1000BASE-KX/CX, SGMII

Adaptable Data Transmission Rates

The adapter delivers flexible speed support ranging from 1Gbps to 100Gbps, making it a versatile solution for both legacy infrastructure and next-gen data centers.

PCIe 4.0 Bus Compatibility

Built for the high-bandwidth demands of modern networks, the card connects via a PCI Express Gen 4.0 interface utilizing 16 lanes, with SerDes operating at 16.0GT/s. Backward compatibility with Gen 3.0, 2.0, and 1.1 offers deployment agility across various server generations.

Electrical Characteristics and Efficiency

Voltage Requirements

- Power Rails: 3.3V AUX and 12V

Typical Energy Consumption

- Passive Cable - Active Use: ~19W

- Passive Cable - Standby Mode: ~5.7W

Maximum Power Draw

- Maximum Active Load: 26.6W

- Idle State: 10W

- QSFP56 Port Capacity: Supports up to 5W of power output

Energy Efficiency Consideration

Engineered for minimal power waste, the device aligns with green IT initiatives, minimizing consumption during inactive states without impacting performance during peak load.

Operational Environment Tolerance

Temperature Resilience

- Active Use: 0°C to 55°C

- Storage Range: -40°C to 70°C

Humidity Compatibility

- Operational Humidity: 10% – 85% RH (non-condensing)

- Storage Humidity: 10% – 90% RH (non-condensing)

Altitude Support

The network interface card is rated for efficient functionality at elevations up to 3050 meters, making it suitable for high-altitude data center locations.

Compliance and Environmental Standards

RoHS Compliance

As part of NVIDIA’s commitment to sustainability and safety, the MCX623436MS-CDAB is fully RoHS compliant, ensuring that hazardous substances are restricted in its manufacturing.

Global Certification Standards

This product adheres to stringent international regulatory requirements, providing peace of mind for global enterprises and ensuring eligibility for deployment in various regions.

Advanced Feature Set for Next-Gen Infrastructure

Multi-Host and Socket-Direct Capabilities

This card supports configurations that allow direct memory and processor access across multiple hosts, enabling efficient interconnectivity in hyper-converged infrastructure and HPC clusters.

Enhanced Boot Protection

With secure boot enabled at the firmware level, system integrity is preserved from the first initialization, making it suitable for compliance-sensitive environments and regulated industries.

Crypto Disabled for Security Protocol Alignment

Certain cryptographic capabilities are disabled by design, catering to specific regulatory or certification needs such as those required by government and defense entities.

Ideal Use Case Scenarios

Cloud Infrastructure

Designed for high-density data centers and cloud service providers, this adapter supports dynamic workloads and elastically scalable environments by offering ultra-low latency and high throughput.

AI and HPC Workloads

With bandwidth capabilities reaching 100Gbps and low jitter, the MCX623436MS-CDAB is ideal for AI inference clusters, deep learning models, and scientific computing.

Virtualization and SDN Support

The adapter is optimized for software-defined networking environments, supporting network overlays and hardware acceleration for virtualized workloads via PCIe Gen4 efficiency.

Installation and Deployment Flexibility

Form Factor Benefits

The compact SFF OCP 3.0 profile ensures compatibility with modern rack-mounted and blade server architectures, conserving space while maintaining peak operational output.

Quick Deployment Features

A tool-less installation with a thumbscrew retention bracket minimizes downtime during hardware refresh cycles or node scaling operations.

Passive and Active Cabling Options

Supports both active optical cables (AOC) and direct attach copper cables (DAC), providing deployment versatility depending on distance and energy efficiency needs.

High-Speed Networking with Nvidia ConnectX-6 Dx EN Adapter Cards

The evolution of enterprise networking is characterized by unprecedented demand for faster throughput, lower latency, and secure data exchange. The MCX623436MS-CDAB Nvidia ConnectX-6 Dx EN Adapter Card exemplifies this transition, delivering cutting-edge 100GbE Ethernet performance over PCIe 4.0 with advanced security and virtualization features. Positioned under the high-performance ConnectX-6 Dx series and optimized for next-gen data centers, this adapter leverages dual-port QSFP56 connectivity with multi-host and Socket Direct capabilities, driving innovations across storage, compute, and cloud infrastructure.

Overview of ConnectX-6 Dx Adapter Architecture

The ConnectX-6 Dx EN Adapter series is engineered to meet the rigorous demands of modern enterprise networks. It supports up to 100GbE per port with PCIe Gen4 x16 interface, offering double the bandwidth of previous generations. The MCX623436MS-CDAB stands out with its dual-port QSFP56 design, which enables high-density, energy-efficient interconnects for scale-out server and storage applications.

Dual QSFP56 Ports for Redundant 100GbE Connectivity

The inclusion of dual QSFP56 ports allows for redundant 100GbE links, ideal for mission-critical workloads that demand high availability and failover support. QSFP56 provides flexibility to deploy breakout cables, allowing one port to split into 2x50GbE or 4x25GbE, based on network design needs. This enhances adaptability in hybrid networking environments where scaling throughput dynamically is essential.

PCIe Gen4 x16 Interface: Accelerated Data Flow

The PCI Express 4.0 x16 bus interface offers a massive bandwidth of 32 GT/s per lane, enabling up to 64 GB/s bidirectional data flow. This acceleration is critical for large-scale data processing applications, particularly in artificial intelligence (AI), machine learning (ML), and real-time analytics. With PCIe Gen4, bottlenecks associated with high-volume network traffic are substantially reduced.

OCP 3.0 Form Factor for Next-Gen Server Integration

The Open Compute Project (OCP) 3.0 form factor adopted by the MCX623436MS-CDAB ensures tight integration with next-generation servers and cloud platforms. It offers enhancements such as improved thermal efficiency, tool-less installation, and better front-panel accessibility. The adapter is equipped with a thumbscrew (pull tab) bracket that ensures easy serviceability without tools, minimizing downtime during maintenance.

Thermal Design Optimization

The OCP 3.0 design incorporates advanced airflow management techniques, allowing the ConnectX-6 Dx adapter to maintain stable operation even under intensive workloads. This thermal efficiency is paramount in dense server environments where component reliability directly impacts operational performance.

Ease of Maintenance with Pull Tab Bracket

The adapter’s thumbscrew mechanism allows for seamless insertion and removal without the need for external tools. This feature significantly improves data center maintainability, reducing technician effort and service time, especially during component swaps or diagnostics.

Advanced Network Virtualization Capabilities

The MCX623436MS-CDAB supports state-of-the-art virtualization technologies, including Single Root I/O Virtualization (SR-IOV), VirtIO, and NVGRE/VXLAN offloads. These features reduce overhead on host CPUs by enabling efficient packet processing within virtualized environments, thus maximizing VM density and application responsiveness.

SR-IOV for Optimal Virtual Machine Performance

SR-IOV allows multiple virtual functions (VFs) to share a single physical adapter, enabling VMs to bypass the hypervisor for direct access to hardware-level resources. This dramatically reduces latency and improves I/O performance, critical for cloud hosting, virtual desktop infrastructure (VDI), and container-based deployments.

Offloads for NVGRE, VXLAN, and GENEVE Tunneling

Hardware offloads for encapsulated traffic such as NVGRE, VXLAN, and GENEVE reduce CPU utilization by handling packet segmentation, checksum, and reassembly at the NIC level. This enhances throughput for multi-tenant and cloud-native environments that rely on overlay networking to segment traffic securely and efficiently.

Secure Boot and Host Management Functions

Security is deeply integrated into the MCX623436MS-CDAB design. The adapter supports Secure Boot and hardware root of trust mechanisms, ensuring that only authenticated firmware is executed during initialization. The card also includes comprehensive host management features for monitoring, telemetry, and remote configuration.

Secure Boot without Crypto Module

The "No Crypto" configuration of this card is ideal for export-controlled environments or regions with encryption restrictions. Secure Boot ensures integrity by verifying firmware signatures, helping to prevent malicious code execution at boot time, without relying on embedded cryptographic hardware.

Telemetry and Diagnostics

Advanced telemetry enables real-time insight into adapter status, including temperature, link integrity, packet statistics, and utilization metrics. These insights help IT administrators identify bottlenecks, predict failures, and ensure optimal performance of networking workloads.

Socket Direct and Multi-host Support for HPC and Scale-out Applications

The MCX623436MS-CDAB supports both Socket Direct and Multi-host modes. Socket Direct connects each port to a separate CPU socket in a dual-socket server, ensuring balanced bandwidth allocation and reduced cross-socket traffic. Multi-host mode allows multiple hosts to share the same adapter, which is essential in hyper-converged and disaggregated systems.

Socket Direct for NUMA-aware Bandwidth Distribution

By connecting each NIC port directly to a CPU socket, Socket Direct minimizes latency caused by NUMA (Non-Uniform Memory Access) imbalance and interconnect traffic. This feature is indispensable in performance-sensitive environments such as high-performance computing (HPC), in-memory databases, and real-time financial applications.

Multi-host for Resource-Consolidated Infrastructure

Multi-host capability allows one adapter to serve multiple compute nodes, reducing hardware footprint and improving infrastructure efficiency. This is particularly advantageous in blade systems, cloud-scale deployments, and composable disaggregated architectures where shared I/O is a requirement.

RDMA Support: RoCE v2 for Low-latency Communication

Remote Direct Memory Access (RDMA) over Converged Ethernet version 2 (RoCE v2) enables zero-copy networking and direct data transfers between servers and storage nodes, eliminating the overhead of CPU and OS intervention. This improves performance in latency-sensitive use cases such as NVMe over Fabrics (NVMe-oF), real-time analytics, and distributed AI training.

Performance Optimization for NVMe-oF

RDMA acceleration is a foundational technology for NVMe-oF, ensuring rapid and deterministic access to remote storage without the latency penalties of traditional TCP/IP stacks. By offloading transport to the NIC hardware, RoCE v2 enhances performance, scalability, and power efficiency for storage-class memory systems.

Efficiency in AI and HPC Workflows

RoCE v2 support significantly reduces data transfer latency in distributed AI model training and HPC simulations. These workloads typically require high throughput and tightly synchronized memory access, which RDMA facilitates by minimizing latency and jitter.

Compatibility with Major Server Platforms

The MCX623436MS-CDAB adapter is fully compatible with leading enterprise server vendors, including HPE, Dell EMC, Lenovo, Cisco UCS, and Supermicro. It adheres to industry standards for form factor, PCIe compliance, and interface protocols, ensuring smooth integration into new or existing data center environments.

Support for Linux and Windows Server Environments

Extensive driver support across major enterprise operating systems—including Red Hat Enterprise Linux (RHEL), Ubuntu, SUSE Linux Enterprise Server (SLES), and Windows Server—ensures flexibility for hybrid and multicloud deployments.

Management with DMTF Redfish and OpenBMC

Advanced manageability is enabled via standards such as Redfish APIs and OpenBMC interfaces. These enable secure, scalable management of hardware inventory, power states, firmware versions, and telemetry feeds across distributed infrastructure.

Use Cases and Deployment Scenarios

The MCX623436MS-CDAB card is ideal for a wide variety of enterprise and service provider workloads, ranging from hyperscale cloud infrastructure to edge computing and 5G networks. Its versatility, bandwidth, and rich feature set make it a preferred choice for:

Hyperscale Cloud Networking

Cloud service providers require adaptable, secure, and high-throughput networking to handle multi-tenant workloads, container orchestration, and microservices. The ConnectX-6 Dx card provides the virtualization and bandwidth headroom to support Kubernetes-based platforms and software-defined networking (SDN).

Edge and Telco 5G Infrastructure

With its small form factor and high-speed capability, this adapter is also suitable for edge servers and telco deployments, including virtualized RAN (vRAN) and mobile core applications. Its low power envelope and robust packet processing ensure optimal operation in remote, bandwidth-sensitive environments.

Enterprise Storage and Hyper-Converged Infrastructure (HCI)

Storage backbones leveraging NVMe-oF or iSCSI can benefit from this card’s high throughput and RDMA capabilities. It accelerates I/O paths for all-flash arrays (AFAs) and software-defined storage solutions, increasing IOPS and reducing response times in data-intensive workloads.

AI/ML and HPC Cluster Interconnects

In AI and HPC cluster environments, where computational nodes exchange large datasets at high speed, the ConnectX-6 Dx card serves as a high-bandwidth, low-latency link. Its compatibility with GPUDirect and deep learning frameworks enhances training efficiency and inference latency.