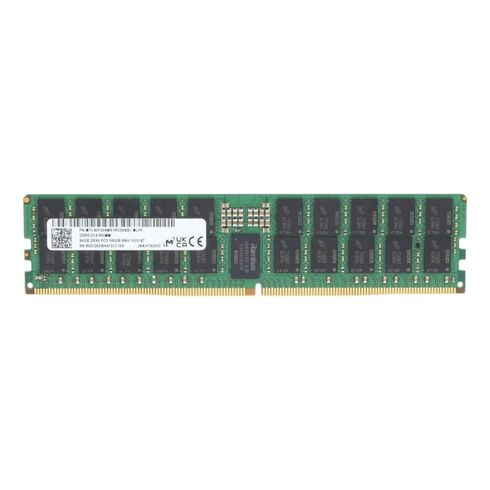

MTC40F204WS1RC64BC1 Micron 96GB 6400mhz Pc5-51200 Memory Module

- — Free Ground Shipping

- — Min. 6-month Replacement Warranty

- — Genuine/Authentic Products

- — Easy Return and Exchange

- — Different Payment Methods

- — Best Price

- — We Guarantee Price Matching

- — Tax-Exempt Facilities

- — 24/7 Live Chat, Phone Support

- — Visa, MasterCard, Discover, and Amex

- — JCB, Diners Club, UnionPay

- — PayPal, ACH/Bank Transfer (11% Off)

- — Apple Pay, Amazon Pay, Google Pay

- — Buy Now, Pay Later - Affirm, Afterpay

- — GOV/EDU/Institutions PO's Accepted

- — Invoices

- — Deliver Anywhere

- — Express Delivery in the USA and Worldwide

- — Ship to -APO -FPO

- — For USA - Free Ground Shipping

- — Worldwide - from $30

Highlights of Micron MTC40F204WS1RC64BC1 96GB DDR5 Ram

The Micron MTC40F204WS1RC64BC1 96GB DDR5 6400MHz PC5-51200 ECC Registered Dual Rank RDIMM is engineered for enterprise servers and high-performance workstation systems. Leveraging advanced DDR5 SDRAM technology, this memory module delivers exceptional speed, data integrity, and reliability for mission-critical workloads.

General Information

- Manufacturer: Micron

- Part Number: MTC40F204WS1RC64BC1

- Product Type: Memory Module

- Sub Type: DDR5 6400MHz PC5-51200

Technical Specifications

- Memory Technology: DDR5 SDRAM

- Module Configuration: Dual Rank x4

- Signal Processing: Registered (RDIMM)

- Bus Speed: 6400MHz DDR5 / PC5-51200

- CAS Latency: CL52

Physical Characteristics

- Form Factor: 288-Pin RDIMM

- Shipping Dimensions: 1.00" (H) x 6.75" (D)

- Shipping Weight: 0.20 lbs

ECC and Registered Features for Data Integrity

- ECC for automatic detection and correction of memory errors

- Registered design for stable signal processing

- Optimized for continuous enterprise operation

Compatibility

Supported System Types

- Enterprise-Class Servers

- Professional Workstations

- Data Center Infrastructures

- Virtualization and Cloud Computing Platforms

Memory and Motherboard Requirements

- DDR5-compatible server or workstation motherboard

- 288-Pin RDIMM memory slots

- Support for ECC Registered (RDIMM) modules

- Compatible with 6400MHz (PC5-51200) memory speed

Overview of Micron MTC40F204WS1RC64BC1 PC5-51200 ECC

The Micron MTC40F204WS1RC64BC1 96GB DDR5 6400MHz PC5-51200 ECC Registered Dual Rank 288-Pin Memory Module is a premium enterprise-grade memory solution designed for modern server architectures, high-performance computing (HPC), virtualization, and cloud environments. This module belongs to the DDR5 registered memory category, offering a combination of high capacity, ultra-fast performance, and robust error-correcting capabilities. With 96GB of memory per DIMM and operating at 6400MHz, it is built to support data-intensive workloads, large-scale virtualization, in-memory computing, and mission-critical applications that require consistent reliability and high throughput.

DDR5 Memory Architecture and Technological Advancements

DDR5 represents a significant leap forward in memory technology, offering higher bandwidth, improved power efficiency, and enhanced scalability compared to DDR4. The Micron 96GB DDR5 6400MHz RDIMM takes full advantage of these advancements, providing faster data access, reduced latency, and better overall system responsiveness. DDR5 modules include dual independent 32-bit subchannels per DIMM, on-die error correction (ECC), and higher bank group counts, all of which contribute to increased parallelism and efficiency in server workloads.

Dual Independent Subchannels for Enhanced Performance

Each DDR5 DIMM features dual independent 32-bit subchannels, which allow memory controllers to manage data more efficiently. By splitting the DIMM into two subchannels, the memory system can perform multiple concurrent operations, reducing access latency and improving throughput. This is particularly important in multi-threaded enterprise workloads, high-density virtualization environments, and high-performance computing clusters where multiple data streams must be processed simultaneously.

On-Die ECC and Data Integrity

DDR5 memory includes on-die error correction at the DRAM chip level, which detects and corrects single-bit errors before they propagate to the system. When combined with ECC Registered module functionality, the Micron RDIMM provides multi-layer error protection, ensuring data integrity even in mission-critical environments. This feature is essential for applications that require absolute reliability, such as financial systems, healthcare computing, and scientific research clusters, where memory errors could lead to data corruption or downtime.

6400MHz High-Speed Memory and PC5-51200 Bandwidth

The Micron MTC40F204WS1RC64BC1 operates at an impressive 6400MHz, qualifying it as a high-frequency DDR5 module capable of delivering a PC5-51200 bandwidth of 51.2GB/s per DIMM. This bandwidth is crucial for workloads that involve large datasets, real-time analytics, AI training, and database operations. By providing faster data access and higher throughput, this memory module reduces system bottlenecks and ensures that CPU cores can efficiently process memory-intensive tasks.

Impact of High-Frequency Memory on Latency and Throughput

While DDR5 introduces higher CAS latency values compared to DDR4, the increased frequency and improved internal architecture compensate by providing greater effective bandwidth. The dual-channel substructure, improved bank groups, and burst length enhancements allow memory controllers to handle more data in parallel, resulting in overall better throughput and lower effective latency for real-world applications.

Performance Benefits in Multi-Threaded Workloads

High-frequency memory is particularly advantageous for multi-threaded server workloads, where multiple processes access memory concurrently. The Micron 96GB DDR5 RDIMM provides rapid access to data, enabling faster execution of virtualization platforms, multi-tenant cloud environments, AI inference, and HPC computations. Its high bandwidth ensures smooth performance even when multiple processes compete for memory resources, supporting higher VM densities and better service levels.

Dual Rank Configuration for Enhanced Capacity

The dual-rank design of this RDIMM allows two sets of DRAM chips to be accessed independently, providing increased effective memory bandwidth through rank interleaving. Dual-rank modules often outperform single-rank modules in server workloads that require intensive memory access, making them ideal for large-scale virtualization, database processing, and HPC applications. By distributing memory accesses across two ranks, these modules reduce latency and improve overall system efficiency.

Comparing Dual Rank and Single Rank RDIMMs

Single-rank modules present all DRAM chips as one rank, limiting the potential for interleaving and reducing the effective throughput in some multi-threaded workloads. Dual-rank modules, like the Micron 96GB DDR5 RDIMM, provide improved interleaving, increased bandwidth utilization, and better performance under random memory access patterns. Enterprise servers with dual-rank RDIMMs can achieve higher total memory capacity while maintaining optimal system performance.

Registered ECC for Enterprise Reliability

Registered memory modules incorporate a register that buffers address and command signals between the memory controller and DRAM chips. This buffering reduces electrical load on the memory bus, allowing for stable operation with high-capacity modules. When combined with ECC, registered memory provides robust protection against memory errors, making it essential for mission-critical systems that demand high availability, data integrity, and long-term reliability.

96GB Memory Capacity for Scalable Enterprise Solutions

High-density 96GB modules enable organizations to maximize total system memory while minimizing DIMM slot utilization. This capacity is ideal for virtualization hosts, in-memory databases, AI and ML workloads, real-time analytics, and other memory-intensive enterprise applications. Deploying high-capacity RDIMMs reduces the number of DIMMs required to achieve target memory, simplifying server design, improving airflow, and lowering power consumption.

Optimizing Memory

In virtualized environments such as VMware vSphere, Microsoft Hyper-V, and KVM, memory capacity directly impacts the number of virtual machines that can be hosted per physical server. High-density 96GB RDIMMs allow administrators to allocate sufficient memory per VM while maintaining headroom for additional workloads. This improves VM performance, reduces the risk of memory overcommitment, and increases consolidation ratios in multi-tenant cloud infrastructures.

Database Performance and In-Memory Computing

Enterprise database systems, especially in-memory databases like SAP HANA, Oracle TimesTen, and SQL Server In-Memory OLTP, benefit from large, high-speed memory modules. High-capacity RDIMMs allow more data to be cached in memory, reducing disk I/O and improving query response times. The Micron 96GB DDR5 RDIMM supports real-time analytics, transactional workloads, and high-performance reporting with minimal latency, enabling organizations to process critical business data efficiently.

Lower Voltage and Improved

DDR5 modules operate at lower voltages compared to DDR4, improving energy efficiency while maintaining high performance. The reduced operating voltage, combined with PMIC regulation, minimizes thermal stress and power waste. This efficiency is particularly beneficial in large-scale data centers with hundreds or thousands of DIMMs, helping organizations reduce operational costs and improve environmental sustainability.

Maintaining Thermal Stability in High-Density Configurations

High-density server configurations require memory modules capable of stable operation under constrained thermal conditions. The Micron 96GB RDIMM is designed to maintain performance across a wide temperature range, supporting high-density blade servers and rack-mounted clusters. Proper cooling, airflow management, and chassis design complement the module’s efficient thermal characteristics to prevent throttling and maintain reliability under continuous operation.

Enterprise Server Platforms

The Micron MTC40F204WS1RC64BC1 is compatible with modern server platforms supporting DDR5 registered memory, including Intel Xeon Scalable and AMD EPYC processors. These platforms leverage DDR5’s increased bandwidth, dual-subchannel architecture, and power efficiency to improve overall system performance. When installed in compatible servers, the module integrates seamlessly, leveraging firmware and BIOS features to automatically configure voltage, frequency, and timing for optimal operation.

Firmware and BIOS

Server BIOS and firmware play a critical role in achieving rated performance for high-speed DDR5 RDIMMs. Updates to BIOS can improve memory training, enhance voltage regulation, and optimize channel interleaving for dual-rank modules. Ensuring servers are updated with the latest firmware guarantees the Micron 96GB RDIMM operates reliably at full 6400MT/s speed and with full ECC support.

Balanced Channel Population for Maximum Throughput

Memory channel balancing is essential to maximize the performance of multi-channel server architectures. Installing identical DDR5 RDIMMs across all channels ensures even data distribution, reduced latency, and optimal bandwidth utilization. High-capacity 96GB modules simplify channel balancing while enabling organizations to achieve large total memory capacities efficiently without compromising performance.

Enterprise and Industry Applications

The Micron MTC40F204WS1RC64BC1 96GB DDR5 RDIMM is suitable for a broad range of enterprise and industry workloads. It supports high-density virtualization, cloud computing, in-memory databases, AI/ML workloads, real-time analytics, and HPC clusters. Industries such as finance, healthcare, telecommunications, research, and government rely on high-capacity, high-speed, and error-correcting memory to ensure data integrity, low latency, and high availability.

Machine Learning Workloads

AI and ML workflows involve extensive dataset preprocessing, feature extraction, and model training, often requiring substantial memory resources. System memory serves as a staging area, supporting GPU or accelerator operations. The Micron 96GB DDR5 RDIMM provides sufficient capacity and bandwidth to ensure smooth AI workflows, reduce memory bottlenecks, and improve training and inference performance for complex machine learning models.

Computing and Research Applications

High-performance computing environments perform simulations, modeling, and large-scale calculations that rely on reliable, high-speed memory. Dual-rank ECC registered RDIMMs provide the capacity, bandwidth, and error correction necessary for accurate, uninterrupted computations. The Micron 96GB DDR5 module enables researchers, engineers, and scientists to execute complex workloads efficiently, accelerating time-to-insight while maintaining high system reliability.

Micron Quality Assurance and Reliability

Micron is globally recognized for its stringent quality control, rigorous testing, and enterprise-grade reliability. The MTC40F204WS1RC64BC1 undergoes extensive validation, including signal integrity testing, environmental stress assessments, and compatibility verification with leading server platforms. This ensures consistent performance, long-term reliability, and predictable operation under demanding enterprise workloads.

Long-Term Availability

Enterprise-grade memory modules must provide long-term availability and compatibility across multiple server generations. Micron designs RDIMMs with lifecycle support in mind, ensuring consistent configurations and performance over extended deployment periods. This reduces the risk of obsolescence and simplifies server refresh cycles, allowing IT infrastructure to maintain uniform memory architecture over time.

Technical Documentation and Deployment

Micron provides detailed technical documentation, deployment guidelines, and compatibility matrices to assist administrators and system integrators in deploying memory modules. This guidance facilitates proper installation, configuration, and optimization, ensuring high-capacity DDR5 RDIMMs deliver maximum performance and reliability across enterprise platforms.

Strategic Role of DDR5 Memory

The Micron MTC40F204WS1RC64BC1 96GB DDR5 6400MHz PC5-51200 ECC Registered Dual Rank RDIMM plays a strategic role in modern enterprise computing. Its combination of high frequency, large capacity, dual-rank architecture, and multi-layer ECC reliability enables organizations to support demanding workloads, achieve high availability, and scale efficiently. By deploying these modules, enterprises ensure that server infrastructure remains future-ready, capable of supporting advanced virtualization, in-memory computing, AI/ML workloads, and high-performance computing applications.