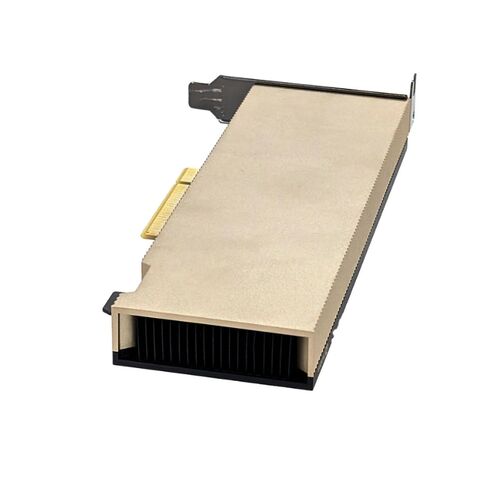

699-2G179-0220-100 Nvidia 16GB GDDR6 PCIE Gen4 ECC Passive Gpu

Brief Overview of 699-2G179-0220-100

Nvidia 699-2G179-0220-100 16GB GDDR6 Computing Processor PCIE Gen4 X8 Tensor Core Low-profile ECC Passive Gpu. Excellent Refurbished with 1-Year Replacement Warranty Eta 2-3 Weeks. No Cancel No Return

- — Free Ground Shipping

- — Min. 6-month Replacement Warranty

- — Genuine/Authentic Products

- — Easy Return and Exchange

- — Different Payment Methods

- — Best Price

- — We Guarantee Price Matching

- — Tax-Exempt Facilities

- — 24/7 Live Chat, Phone Support

- — Visa, MasterCard, Discover, and Amex

- — JCB, Diners Club, UnionPay

- — PayPal, ACH/Bank Transfer (11% Off)

- — Apple Pay, Amazon Pay, Google Pay

- — Buy Now, Pay Later - Affirm, Afterpay

- — GOV/EDU/Institutions PO's Accepted

- — Invoices

- — Deliver Anywhere

- — Express Delivery in the USA and Worldwide

- — Ship to -APO -FPO

- — For USA - Free Ground Shipping

- — Worldwide - from $30

NVIDIA 699-2G179-0220-100 A2 GPU Computing Processor

Advanced GPU Architecture

The NVIDIA 699-2G179-0220-100 A2 leverages the powerful Ampere architecture, delivering exceptional performance for AI, deep learning, and high-performance computing workloads.

Key Specifications

- GPU Architecture: NVIDIA Ampere

- CUDA Cores: 1280

- Tensor Cores: 40 (3rd Generation)

- RT Cores: 108 (2nd Generation)

- Peak FP32 Performance: 4.5 TFLOPS

- Peak TF32 Tensor Core Performance: 9 TFLOPS (18 TFLOPS with sparsity)

- Peak FP16 Tensor Core Performance: 18 TFLOPS (36 TFLOPS with sparsity)

- Peak INT8 Performance: 36 TOPS (72 TOPS with sparsity)

- Peak INT4 Performance: 72 TOPS (144 TOPS with sparsity)

- GPU Memory: 16GB GDDR6 ECC

- Memory Bandwidth: 200 GB/s

- System Interface: PCIe Gen4 x8

- Form Factor: Low-profile, single-slot

- Thermal Solution: Passive cooling

- Maximum Power Consumption: 40–60W (configurable)

CUDA, Tensor, and RT Cores

- CUDA Cores: 1280 parallel processing cores for efficient computation

- Tensor Cores: 40 third-generation cores optimized for AI operations

- RT Cores: 108 second-generation cores enabling accelerated ray tracing

Performance Metrics

FP and Tensor Precision

- Peak FP32: 4.5 TFLOPS for single-precision computing

- Peak TF32 Tensor Core: 9 TFLOPS (18 TFLOPS with sparsity)

- Peak FP16 Tensor Core: 18 TFLOPS (36 TFLOPS with sparsity)

INT Performance

- INT8: 36 TOPS (72 TOPS with sparsity)

- INT4: 72 TOPS (144 TOPS with sparsity)

Memory and Bandwidth

- GPU Memory: 16GB GDDR6 with ECC for reliable data integrity

- Memory Bandwidth: 200 GB/s for high-speed data transfer

Thermal Design and Power Efficiency

- Thermal Solution: Passive cooling design for silent operation

- Maximum Power Consumption: 40–60 Watts configurable to system requirements

Connectivity and System Interface

- System Interface: PCIe Gen 4.0 x8 for high-speed communication with the host system

- Form Factor: Low-profile design ideal for compact systems

Key Advantages

- Optimized for AI training and inference workloads

- Energy-efficient operation with configurable power settings

- Supports a wide range of machine learning frameworks

- Compact design suitable for small form-factor servers

The NVIDIA A2 GPU

The NVIDIA 699-2G179-0220-100 A2 GPU is designed to deliver exceptional AI performance while maintaining low power consumption. Its Ampere architecture ensures robust processing for AI workloads, machine learning, and deep learning applications. The low-profile form factor and passive thermal design make it ideal for deployment in compact workstations, edge servers, and enterprise-grade computing solutions.

The NVIDIA A2 Tensor Core GPU

The NVIDIA A2 Tensor Core GPU (Part Number: 699-2G179-0220-100) is a compact, low-power accelerator designed to deliver high-performance computing capabilities for a variety of applications, including AI inference, machine learning, and data analytics. With its 16GB GDDR6 memory and support for PCIe Gen4 x8, the A2 offers a balance between performance and efficiency, making it suitable for deployment in environments where space and power constraints are critical considerations.

Performance and Capabilities

Compute Performance

The A2 GPU's architecture is optimized for a wide range of compute tasks. Its 1280 CUDA cores provide substantial parallel processing power, enabling efficient execution of complex algorithms and workloads. The inclusion of 40 third-generation Tensor Cores enhances performance for AI and machine learning applications, particularly those involving deep learning models that benefit from matrix operations and high-throughput computations.

AI and Machine Learning Acceleration

With support for Tensor Cores, the A2 GPU excels in accelerating AI inference tasks. The peak performance figures, such as 9 TFLOPS for TF32 and 36 TFLOPS for FP16 with sparsity, indicate its capability to handle demanding AI workloads efficiently. These performance metrics make the A2 suitable for real-time AI applications, including image recognition, natural language processing, and recommendation systems.

Graphics Rendering and Visualization

While the A2 GPU is primarily designed for compute-intensive tasks, its 108 second-generation RT Cores enable hardware-accelerated ray tracing, which can be beneficial for applications requiring advanced graphics rendering. This feature allows for realistic lighting and shadow effects, enhancing the visual quality of simulations and visualizations.

Form Factor

Compact and Efficient Design

The A2 GPU's low-profile, single-slot form factor makes it an ideal choice for systems with limited space, such as compact servers and workstations. Its passive cooling solution, relying on system airflow, eliminates the need for additional fans, reducing noise and potential points of failure while maintaining efficient thermal management.

Power Efficiency

Operating within a configurable power envelope of 40–60W, the A2 GPU offers a balance between performance and energy consumption. This power efficiency is particularly advantageous in data centers and edge computing environments where power availability and thermal constraints are significant considerations.

Machine Learning Model Inference

In machine learning workflows, the A2 GPU accelerates the inference phase, where trained models are applied to new data. Its support for Tensor Cores and high throughput ensures that models can be deployed effectively, delivering predictions with minimal delay.

Compatibility and Integration

System Requirements

To ensure optimal performance, the A2 GPU requires a system with a PCIe Gen4 x8 slot. Its low-profile design allows it to fit into compact chassis, making it compatible with a wide range of server and workstation configurations. The passive cooling solution necessitates adequate system airflow to maintain thermal performance.

Environmental Considerations

Thermal Management

Given its passive cooling design, the A2 GPU relies on the host system's airflow to dissipate heat. It is essential to ensure that the system provides adequate ventilation to maintain optimal operating temperatures and prevent thermal throttling.

Operating Conditions

The A2 GPU is designed to operate in a range of environmental conditions. However, for sustained performance and longevity, it is recommended to deploy the GPU in environments with controlled temperature and humidity levels, adhering to the specifications provided by NVIDIA.