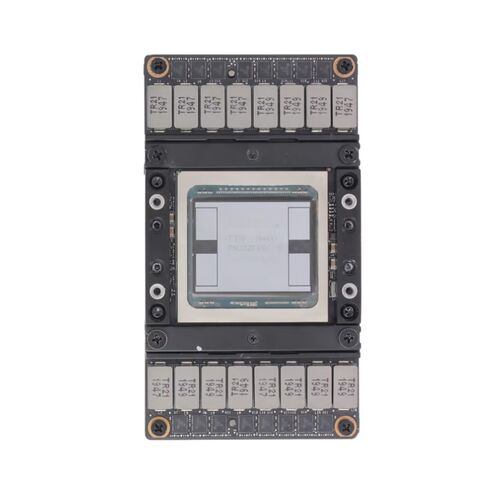

699-2G503-0201-200 Nvidia Tesla V100 SXM2 16GB GPU

- — Free Ground Shipping

- — Min. 6-month Replacement Warranty

- — Genuine/Authentic Products

- — Easy Return and Exchange

- — Different Payment Methods

- — Best Price

- — We Guarantee Price Matching

- — Tax-Exempt Facilities

- — 24/7 Live Chat, Phone Support

- — Visa, MasterCard, Discover, and Amex

- — JCB, Diners Club, UnionPay

- — PayPal, ACH/Bank Transfer (11% Off)

- — Apple Pay, Amazon Pay, Google Pay

- — Buy Now, Pay Later - Affirm, Afterpay

- — GOV/EDU/Institutions PO's Accepted

- — Invoices

- — Deliver Anywhere

- — Express Delivery in the USA and Worldwide

- — Ship to -APO -FPO

- — For USA - Free Ground Shipping

- — Worldwide - from $30

Nvidia Tesla V100 SXM2 16GB GPU

The Nvidia 699-2G503-0201-200 Tesla V100 SXM2 16GB Computational Accelerator is a high-performance graphics processor designed for advanced computing tasks, artificial intelligence workloads, and scientific simulations.

Manufacturer Details

- Brand: Nvidia

- Part Number: 699-2G503-0201-200

- Category: Graphics Processing Unit

Technical Specifications

Core Features

- Chipset Model: Nvidia Tesla V100

- GPU Architecture: Volta

- Memory Capacity: 16 GB HBM2

- Memory Bandwidth: 900 GB/s (source: ITCreations.com)

Processing Units

CUDA Cores

- 5120 parallel CUDA cores for accelerated performance

Tensor Cores

- 640 tensor cores optimized for deep learning tasks

Connectivity and Interfaces

- Interconnect: SXM2 NVLINK passive interface

- Host Bus: PCI-e 3.0 (available in certain configurations)

Power and Thermal Design

- Thermal Design Power (TDP): 300 W

Physical Dimensions

Size Information

- Approximate dimensions: 10 x 6 x 4 inches

Key Highlights

Performance Advantages

- Exceptional memory bandwidth for data-intensive workloads

- High CUDA core count for parallel computing

- Tensor cores designed for AI and machine learning acceleration

Nvidia 699-2G503-0201-200 Tesla V100 16GB GPU Overview

The Nvidia 699-2G503-0201-200 Tesla V100 SXM2 16GB Computational Accelerator represents one of the most advanced accelerators designed for demanding high-performance computing environments. This category focuses on enterprise-grade GPU modules optimized for parallel workloads, artificial intelligence, deep learning, numerical simulation and accelerated data analytics. Built on Nvidia’s Volta architecture, the Tesla V100 SXM2 variant offers exceptional throughput, advanced tensor processing capabilities and optimized power efficiency for large-scale operations. The SXM2 form factor stands out due to its enhanced thermal transfer and interconnect design, allowing deployment in GPU-dense servers and HPC clusters that require stable performance under continuous heavy workloads.

Advanced Architecture and Processing Efficiency

The Tesla V100 SXM2 accelerator is engineered with a highly optimized parallel architecture built specifically for complex scientific calculations and enterprise machine learning tasks. It incorporates Nvidia’s Volta GPU core design, enabling enhanced computational density and operational consistency. This category highlights products capable of sustaining extremely intensive floating-point calculations while minimizing latency during multi-GPU communication. The SXM2 interface elevates sustained throughput by enabling direct GPU-to-GPU interconnect and heat dissipation that supports extended usage cycles without forced throttling. As a result, research institutions, data center operators, and AI development teams rely on accelerators in this category for simulation tasks, neural network training and computationally demanding workloads.

Optimized Tensor Performance

One of the primary advantages of the Tesla V100 SXM2 design is its Tensor Core implementation. These specialized cores deliver extraordinary acceleration for matrix operations essential in AI model training and deep neural network development. The accelerators within this category leverage Tensor Core technology to boost multi-precision workloads such as FP16, FP32 and INT8 operations. This allows data scientists and computational engineers to dramatically reduce training times for large-scale models while maintaining stable accuracy and predictable performance benchmarks. By utilizing high-efficiency tensor operations, enterprises gain the advantage of running complex algorithms that would otherwise require substantial CPU resources and extended project timelines.

High-Bandwidth HBM2 Memory Subsystem

The Nvidia 699-2G503-0201-200 Tesla V100 SXM2 features 16GB of high-bandwidth HBM2 memory that is engineered for data-intensive compute environments. This subsystem supports significantly higher memory bandwidth compared to traditional GDDR-based GPU platforms. The category emphasizes accelerators capable of sustaining uninterrupted data flow between GPU cores and memory modules, preventing bottlenecks during scientific simulations and AI training tasks. High-bandwidth memory enables faster loading of large datasets, improved responsiveness to dynamic computational demands and overall greater efficiency in memory-heavy workloads. Researchers and engineers working with vast training datasets or real-time analysis pipelines benefit greatly from this memory architecture.

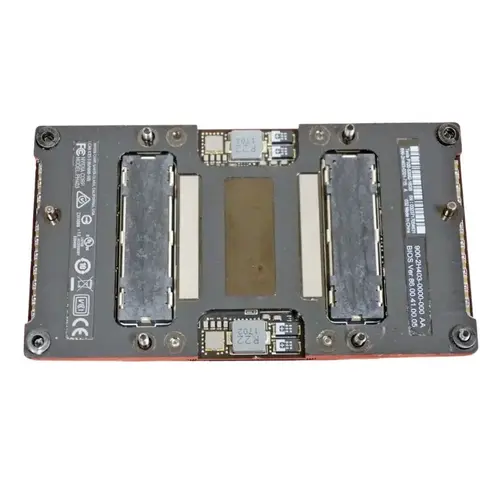

Thermal Design and SXM2 Form Factor Advantages

The SXM2 configuration of the Tesla V100 provides superior thermal conductivity and advanced physical integration compared to PCIe-based GPU accelerators. This category includes GPU modules optimized for blade servers, high-density GPU chassis and systems utilizing Nvidia NVLink interconnect frameworks. The optimized thermal surface area allows the accelerator to maintain stable operating temperatures even under multi-hour workloads that push GPU cores to peak capacity. This ensures reliability for mission-critical applications requiring consistent output without thermal degradation. The SXM2 design further supports advanced cooling frameworks implemented in enterprise-class servers, making it a preferred choice for HPC setups leveraging multi-GPU nodes.

NVLink High-Speed Interconnect Capability

Accelerators in this category also feature compatibility with Nvidia NVLink, a high-bandwidth interface enabling direct peer-to-peer communication between GPUs. NVLink significantly reduces the latency obstacles commonly associated with PCIe-based communication paths. The Tesla V100 SXM2 uses NVLink to create interconnected GPU clusters capable of operating as a unified high-performance computational resource. This architecture benefits engineering simulations, large-scale AI workloads and scientific modeling scenarios where rapid inter-GPU data exchange is essential. NVLink-enabled systems deliver improved scalability and more efficient distribution of workloads across multiple accelerators.

Enterprise and HPC Application Focus

The Tesla V100 SXM2 accelerator category is widely adopted across fields requiring complex computational modeling. Scientific institutions employ these accelerators for weather prediction models, molecular dynamics simulations and energy research calculations. Enterprise AI teams rely on the category for training foundational models, natural language processing frameworks, generative AI systems and large-scale recommendation algorithms. Financial organizations integrate these accelerators into risk assessment frameworks, algorithmic modeling and real-time analytics environments. Healthcare research facilities utilize this category for genomic sequencing analysis and imaging workloads. The broad applicability of the Tesla V100 SXM2 demonstrates the versatility and reliability of this accelerator family.

Data Center Deployment Advantages

Within large-scale data centers, accelerators in this category enable advanced virtualization, parallelized operations and GPU-accelerated containerized workloads. Administrators benefit from high-reliability architecture that supports multi-tenant environments, distributed computational pipelines and cloud-based GPU provisioning. The strong compute density offered by the SXM2 form factor helps optimize rack space utilization, allowing enterprises to scale compute capacity without large expansions in physical infrastructure. Enhanced energy efficiency further assists operators in maintaining lower operational expenses while delivering top-tier acceleration across numerous workloads.

Reliability, Longevity and Manufacturing Standards

The Nvidia 699-2G503-0201-200 Tesla V100 SXM2 is produced with enterprise manufacturing standards that prioritize durability, component integrity and long-term reliability. Products within this category undergo rigorous validation tests to ensure stable performance under continuous processing loads. The robust design of the Tesla V100 makes it suitable for always-on HPC environments, AI compute farms and enterprise clusters requiring uninterrupted operation. Hardware-level error correction, optimized voltage regulation and stable interaction between GPU cores and memory systems contribute to long-term reliability and predictable output performance.

System Integration and Compatibility

The SXM2 Tesla V100 integrates seamlessly with systems engineered for multi-GPU compute nodes, providing compatibility with Nvidia DGX architectures, specialized HPC servers and modular data center platforms. These accelerators are designed for professional system integration environments where consistent performance, predictable thermal characteristics and efficient interconnect capability are essential. The category supports server architectures that leverage AI frameworks such as TensorFlow, PyTorch and specialized parallel computing libraries optimized for CUDA. This ensures that organizations can deploy these accelerators without complex hardware revisions or system-level redesigns.

Scalability Advantages for AI and Scientific Research

Scalability is a critical priority within the Tesla V100 SXM2 category, enabling organizations to expand computational capacity as workloads increase in complexity. Multi-GPU clusters allow researchers and developers to process significantly larger datasets, train more complex algorithms and execute higher-fidelity simulations. The Tesla V100 SXM2 architecture is designed to scale linearly across multiple nodes when paired with NVLink-optimized server platforms. This scalable performance enables enterprises to reduce total project time and accelerate innovation across fields such as robotics, natural sciences, cloud AI services and predictive analytics.

Enhanced Software Ecosystem Support

Nvidia’s software ecosystem provides extensive support for accelerators in this category through CUDA, cuDNN, TensorRT and other GPU-optimized libraries. The Tesla V100 SXM2 benefits from continuous updates, performance tuning enhancements and compatibility improvements within the Nvidia software environment. This deep software integration ensures that researchers and AI developers can fully utilize the computational power of the accelerator with reduced configuration complexity. Long-term software support further enhances the product’s viability in enterprise deployment plans, maximizing lifecycle value and computational return on investment.