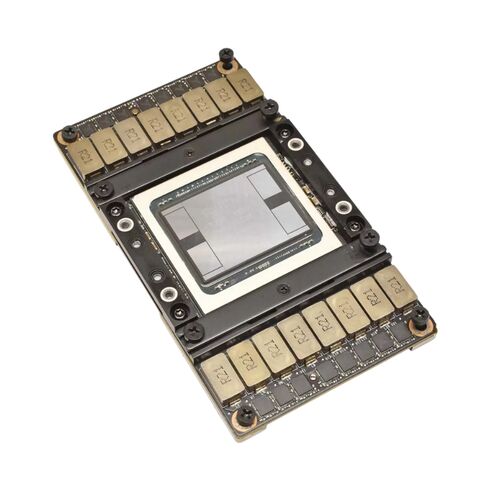

699-2G503-0203-220 Nvidia Tesla V100-Sxm2-32GB Passive GPU Accelerator Card

- — Free Ground Shipping

- — Min. 6-month Replacement Warranty

- — Genuine/Authentic Products

- — Easy Return and Exchange

- — Different Payment Methods

- — Best Price

- — We Guarantee Price Matching

- — Tax-Exempt Facilities

- — 24/7 Live Chat, Phone Support

- — Visa, MasterCard, Discover, and Amex

- — JCB, Diners Club, UnionPay

- — PayPal, ACH/Bank Transfer (11% Off)

- — Apple Pay, Amazon Pay, Google Pay

- — Buy Now, Pay Later - Affirm, Afterpay

- — GOV/EDU/Institutions PO's Accepted

- — Invoices

- — Deliver Anywhere

- — Express Delivery in the USA and Worldwide

- — Ship to -APO -FPO

- — For USA - Free Ground Shipping

- — Worldwide - from $30

Detailed of Nvidia Tesla 32GB Passive GPU

General Product Information

- Brand Name: Nvidia Corporation

- Model Number: 699-2G503-0203-220

- Hardware Category: High-Performance Graphics Adapter

- Product Type: GPU Accelerator Card

Technical Highlights

Graphics Architecture and Processing Power

- GPU Architecture: NVIDIA Volta

- CUDA Core Count: 5120 Parallel Computing Units

- Graphics Processor: NVIDIA GV100-896A-A1

Memory Configuration

- Installed Memory: 32GB High Bandwidth Memory (HBM2)

- Memory Type: HBM2 Technology

Interface and Form Factor

- Bus Interface: NVIDIA NVLink

- Card Format: SXM2 Module

- Cooling Design: Passive (Fanless)

Software and Compute API Compatibility

Supported Programming Interfaces

- CUDA

- DirectCompute

- OpenCL

- OpenACC

The Tesla V100-SXM2 GPU

- Engineered for artificial intelligence, deep learning, and scientific simulations

- Massive parallel processing with 5120 CUDA cores for accelerated workloads

- NVLink interface ensures ultra-fast data transfer between GPUs

- Passive cooling design ideal for data center environments

- Supports multiple compute APIs for versatile development

Nvidia 699-2G503-0203-220 32GB GPU Accelerator Overview

Advanced Architecture and Performance

The Tesla V100-SXM2-32GB utilizes Nvidia's Volta GPU architecture, which introduces Tensor Cores optimized for deep learning tasks. Each core is capable of accelerating matrix operations, delivering up to 125 teraflops of deep learning performance. The high-bandwidth memory (HBM2) integrated into this GPU ensures rapid data transfer, supporting memory-intensive applications without latency bottlenecks. With 32GB of HBM2 memory, the Tesla V100-SXM2 offers the ability to process massive datasets efficiently, making it ideal for AI training, scientific research, and high-performance computing (HPC) workloads.

Passive Cooling Design for Data Centers

The Nvidia 699-2G503-0203-220 model features a passive cooling design, enabling efficient heat dissipation in server environments with optimized airflow. Passive cooling minimizes noise and reduces the dependency on mechanical fans, making it suitable for high-density server racks. This design allows for scalable deployments in large-scale data centers, ensuring continuous operation under heavy computational loads while maintaining optimal thermal performance.

High-Bandwidth Memory and Connectivity

The Tesla V100-SXM2 is equipped with 32GB of HBM2 memory, providing a memory bandwidth of up to 900GB/s. This high-bandwidth memory enables fast access to large datasets, which is crucial for AI, machine learning, and scientific simulations. The SXM2 form factor ensures optimal integration with server architectures, providing efficient power delivery and superior connectivity. The GPU supports NVLink interconnect, enabling multiple GPUs to work in tandem for enhanced computational throughput and seamless multi-GPU scaling.

Deep Learning and AI Optimization

The Tesla V100-SXM2-32GB is designed with AI workloads in mind. Tensor Cores accelerate the training of deep neural networks, reducing time to insights for machine learning projects. Researchers can leverage mixed-precision computing to maximize performance without compromising accuracy. The GPU also supports Nvidia's CUDA, cuDNN, and TensorRT frameworks, providing developers with a robust software ecosystem to optimize AI model training and inference tasks.

Scientific Research and High-Performance Computing

In addition to AI, the Tesla V100-SXM2 excels in high-performance computing environments. Its immense computational power accelerates simulations, molecular dynamics, weather modeling, and other scientific computations. The passive GPU design allows for dense server deployments, while HBM2 memory ensures large-scale simulations run smoothly. Researchers benefit from reduced compute times, enabling faster experimentation and innovation across multiple scientific disciplines.

Energy Efficiency and Data Center Integration

The Tesla V100-SXM2-32GB is engineered to optimize energy efficiency while maintaining peak performance. Passive cooling and advanced power management allow data centers to reduce operational costs and minimize thermal stress on server components. The SXM2 form factor ensures compatibility with Nvidia-certified servers, allowing seamless integration into existing data center infrastructure. IT administrators can deploy multiple Tesla GPUs to scale AI and HPC workloads without compromising energy efficiency or cooling requirements.

Software Ecosystem and Developer Tools

Nvidia provides a comprehensive software ecosystem to maximize the potential of the Tesla V100-SXM2 GPU. CUDA, Nvidia’s parallel computing platform, allows developers to leverage GPU acceleration for a wide range of applications. TensorRT provides optimized inference for deep learning models, while cuDNN accelerates neural network computations. This ecosystem ensures that developers and researchers can deploy and manage AI, ML, and HPC workloads efficiently, making the Tesla V100-SXM2 a future-proof solution for high-performance computing.

Scalable Multi-GPU Deployments

The Tesla V100-SXM2 supports NVLink and multi-GPU scaling, enabling the combination of several GPUs to achieve massive parallel processing power. This capability is essential for large-scale AI model training and simulations that require extremely high compute throughput. Researchers can scale workloads horizontally, combining multiple Tesla GPUs to tackle projects that would otherwise be impossible with a single GPU, ensuring flexibility, efficiency, and accelerated results in data-intensive environments.