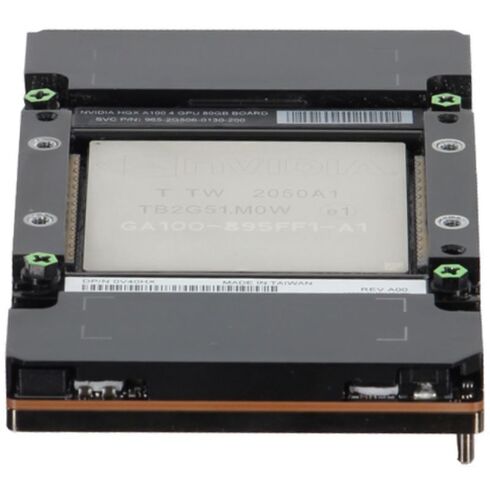

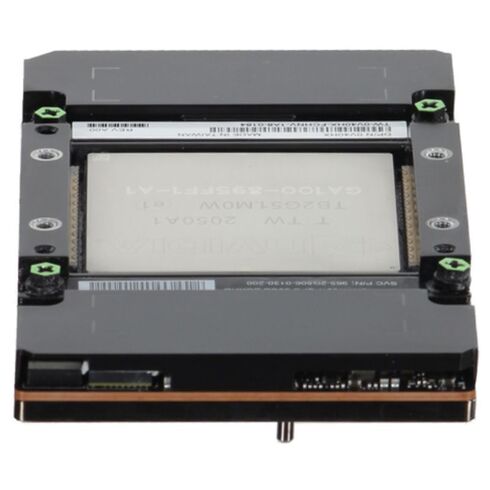

699-2G506-0212-320 Nvidia A100 Tensor Core 80GB 500W GPU

- — Free Ground Shipping

- — Min. 6-month Replacement Warranty

- — Genuine/Authentic Products

- — Easy Return and Exchange

- — Different Payment Methods

- — Best Price

- — We Guarantee Price Matching

- — Tax-Exempt Facilities

- — 24/7 Live Chat, Phone Support

- — Visa, MasterCard, Discover, and Amex

- — JCB, Diners Club, UnionPay

- — PayPal, ACH/Bank Transfer (11% Off)

- — Apple Pay, Amazon Pay, Google Pay

- — Buy Now, Pay Later - Affirm, Afterpay

- — GOV/EDU/Institutions PO's Accepted

- — Invoices

- — Deliver Anywhere

- — Express Delivery in the USA and Worldwide

- — Ship to -APO -FPO

- — For USA - Free Ground Shipping

- — Worldwide - from $30

Main Details of Nvidia A100 Tensor Core GPU

The Nvidia 699-2G506-0212-320 A100 Tensor Core 80GB 500W SXM4 GPU is a high-performance graphics processor designed for advanced computing workloads, artificial intelligence, and machine learning acceleration.

General Specifications

- Brand Name: Nvidia

- Model Number: 699-2G506-0212-320

- Product Type: Graphics Processing Unit

Technical Highlights

- Engine Architecture: Ampere

- CUDA Cores: 6912

Memory Features

- Memory Capacity: 80GB HBM2 (High Bandwidth Memory 2)

- Memory Bandwidth: 2039 GB/s

- Memory Interface Width: 5120-bit

Connectivity

- Interface: PCI Express 4.0 (PCI-E 4.0 x16 bus support)

- NVLink Bandwidth: 600 GB/s

Performance Details

Computational Power

- Peak Single Precision (FP32): 19.5 TFLOPs

- FP64 (Double Precision): 9.7 TFLOPs

- FP64 Tensor Core: 19.5 TFLOPs

Energy Consumption

- Maximum Graphics Power: 400W

Cooling System

- Cooling Type: Passive (requires optimized server airflow)

Key Highlights

Advanced Memory Technology

- HBM2 ensures ultra-fast data transfer rates

- Wide 5120-bit interface for seamless performance

High Computational Throughput

- Optimized for AI, ML, and HPC workloads

- Exceptional FP32 and FP64 performance

Connectivity and Scalability

- PCI-E 4.0 support for modern systems

- NVLink bandwidth of 600 GB/s for multi-GPU setups

Energy Efficiency and Cooling

- Passive cooling design for data center environments

- Requires efficient airflow management

Nvidia 699-2G506-0212-320 A100 Tensor Core GPU Overview

The Nvidia 699-2G506-0212-320 A100 Tensor Core 80GB 500W SXM4 GPU stands as a flagship accelerator designed for extreme computational workloads across artificial intelligence, high performance computing, large-scale data analytics, and enterprise cloud infrastructure. Built upon the sophisticated Ampere architecture, this GPU delivers unparalleled multi-instance flexibility, massive memory capacity, and exceptional throughput that enables modern data centers to support diverse and demanding applications. With its powerful Tensor Cores and innovative SXM4 form factor, the A100 offers a foundation for maximizing density, scalability, and efficiency in mission-critical server deployments.

Architecture Capabilities

Engineered to serve as the heart of accelerated computing platforms, the A100 80GB GPU leverages second-generation Tensor Cores capable of handling mixed-precision operations with exceptional accuracy and speed. This architectural advancement empowers machine learning engineers and scientific researchers to unlock performance for FP32, TF32, BFLOAT16, FP16, INT8, and other numeric formats without compromising computational stability. The GPU's multi-precision flexibility supports training and inference consolidation, enabling a single accelerator to handle full AI pipelines within the same hardware infrastructure.

The SXM4 module allows for significantly higher power headroom, granting the A100 80GB variant the ability to sustain extremely heavy workloads without thermal throttling or performance loss. This design ensures continuous acceleration for multi-node clusters, GPU-optimized servers, and hybrid computing environments requiring consistent throughput. As a major advancement over previous Nvidia generations, the A100 SXM4 form factor delivers exceptional interconnect bandwidth through NVLink and NVSwitch, providing data pathways essential for processing immense datasets in parallel.

Memory Bandwidth and High-Capacity HBM2E Integration

One of the signature enhancements of the Nvidia 699-2G506-0212-320 A100 Tensor Core GPU is its generous 80GB HBM2E memory configuration. This high-bandwidth memory architecture is purpose-built to tackle applications that rely on vast datasets, complex model structures, or memory-intensive simulations. Offering extraordinary bandwidth, HBM2E allows the GPU to transfer data at rapid rates to and from processing cores, profoundly reducing latency and accelerating performance across modeling, graph analytics, advanced simulations, and transformer-based architecture training.

The substantial 80GB capacity ensures that machine learning frameworks, high-resolution scientific models, and enterprise inference engines operate with minimal need for memory partitioning or fragmentation. For AI workloads involving large embedding tables or multi-billion-parameter deep learning networks, the GPU's memory footprint supports fluid execution, minimizing overhead typically associated with memory swapping or external data transfers. This advantage becomes especially valuable within HPC clusters where resource efficiency directly impacts overall computational performance.

Tensor Core Performance in Machine Learning and AI Development

At the center of the A100's capabilities lies its refined Tensor Core design, offering the potential for exponential improvements in matrix operations and deep-learning computations. These Tensor Cores enhance performance for neural network training by accelerating functions that traditionally place heavy demand on floating-point operations. The A100 enables researchers to compress lengthy training cycles, supporting faster experimentation, model refinement, and algorithmic evolution.

During inference tasks, the GPU maintains efficient throughput, enabling complex AI models to respond at lightning-fast rates even at massive scale. The architectural specialization for Tensor operations ensures that workloads involving convolutional networks, attention mechanisms, language models, and collaborative filtering methods experience heightened responsiveness and reliability. Organizations in fields ranging from natural language processing to autonomous systems achieve significant acceleration using the A100’s optimized Tensor processing pipeline.

Integration in Scalable Compute Environments and GPU Clusters

The Nvidia A100 SXM4 80GB module is specifically created for high-density compute nodes where multiple accelerators operate collectively within tightly integrated server frameworks. Through NVLink and NVSwitch interconnect technologies, many GPUs can communicate simultaneously with near-uniform memory access architecture. This setup minimizes bottlenecks in distributed environments and promotes optimal scaling in enterprise-grade systems.

When integrated into GPU clusters, the A100 supports massive distributed training loads, enabling organizations to scale their AI research without sacrificing speed or efficiency. Its exceptional I/O and bandwidth performance are crucial for data centers running mixed compute workloads, particularly when training must be executed across multiple GPUs or servers. The SXM4 configuration also contributes to reducing communication overhead between GPUs, enabling multi-GPU architectures to function nearly as a single cohesive processing unit.

Role in High Performance Computing Workloads

Within the HPC domain, the Nvidia 699-2G506-0212-320 A100 Tensor Core GPU acts as a central driver for demanding scientific simulations, climate modeling, advanced physics calculations, genomic processing, and mathematical modeling applications. Its massive computational density supports researchers working on grand-challenge problems that require high-speed matrix operations and accelerated numerical processing.

The A100 architecture provides the flexibility to execute traditional double-precision scientific workflows alongside AI-enhanced models that incorporate neural networks or pattern recognition. This dual-purpose capability allows HPC infrastructures to merge classical simulation techniques with machine learning-driven prediction methods, making the A100 a powerful asset for next-generation research workloads.

Benefits in Cloud AI and Virtualized Infrastructure

Cloud platforms increasingly adopt A100 Tensor Core GPUs to meet the growing demand for virtualized AI acceleration. Multi-instance GPU (MIG) technology allows the A100 80GB SXM4 GPU to be partitioned into several independent instances, each capable of operating different workloads. This functionality maximizes utilization within cloud compute environments by enabling service providers to host multiple tenants or simultaneous AI processes on a single accelerator.

MIG support ensures predictable performance and strong isolation between workloads, making it beneficial for enterprises seeking reliable acceleration for diverse AI models or containerized applications. This flexibility is essential in modern DevOps and MLOps workflows where different teams require dedicated GPU resources without interference from other processes.

Thermal Management, Power Delivery, and Data Center Efficiency

The 500W power rating of the Nvidia 699-2G506-0212-320 A100 80GB GPU underscores its status as a high-performance accelerator engineered for uncompromising computational demands. The SXM4 module design improves thermal handling through efficient heat dissipation pathways, allowing sustained performance even under intensive, long-running workloads. This stable thermal performance reduces the risk of throttling and supports continuous acceleration in systems engineered with adequate cooling infrastructures.

Data centers deploying A100 GPUs often observe enhanced power efficiency due to the accelerator’s advanced design, which maximizes output per watt across both AI and HPC tasks. By consolidating workloads onto fewer accelerators without decreasing performance, organizations are able to reduce energy consumption while supporting larger-than-ever computational challenges.

Enterprise Deployment Scenarios

The Nvidia A100 GPU integrates seamlessly into enterprise data centers, research laboratories, cloud platforms, and advanced analytics facilities. Its incredible computational density makes it ideal for large-scale AI frameworks such as deep learning recommendation engines, generative AI development, data-mining processes, and medical imaging analysis. In environments where reliability, repeatability, and consistent throughput are essential, the A100 becomes the accelerator of choice.

Manufacturing, finance, pharmaceuticals, defense, and energy sectors rely heavily on large GPU-accelerated clusters to support simulations, predictive maintenance models, and neural networks that require massive input processing. The A100’s architecture ensures that teams can push the boundaries of innovation while maintaining dependable performance across production workloads.

NVLink and NVSwitch Connectivity Advantages

For multi-GPU configurations, NVLink and NVSwitch provide a direct performance boost by enabling GPUs to exchange data with minimal latency and significantly higher bandwidth compared to PCIe-only systems. This interconnect architecture ensures that large models spanning multiple accelerators operate efficiently without fragmentation or unnecessary overhead.

With the A100 80GB GPU, NVLink connectivity allows applications involving multi-billion parameter models or large-scale inference platforms to maintain smooth data synchronization. NVSwitch extends these capabilities further within high-density servers, giving compute nodes a wide bandwidth network for GPU-to-GPU communication and enabling near-linear scaling in many complex applications.

Performance Optimization in AI Training

Stable interconnect bandwidth enables training frameworks such as TensorFlow, PyTorch, and JAX to operate at maximum efficiency in multi-GPU environments. The A100 architecture benefits distributed data parallelism and model parallelism, reducing communication time between nodes and accelerating overall training throughput.

This advantage is particularly noticeable in transformer architectures, recommendation systems, and advanced reinforcement learning scenarios, where synchronized updates and constant parameter exchanges are essential.

Operational Reliability and Compute Precision Enhancements

As enterprise computing evolves, workload reliability plays an increasingly important role. The A100 80GB GPU ensures that precision is maintained across both low-level numeric operations and high-level modeling tasks through refined error-correction mechanisms and compute consistency features. These reliability enhancements support mission-critical workloads across all data center environments.

Compute precision also benefits from the TF32 format introduced with the A100 generation, enabling a balance of FP32 accuracy and FP16-like acceleration. This hybrid precision method improves training speed without compromising result quality, making it especially suitable for research environments seeking both fast iteration cycles and dependable model convergence.

Versatility in Software Stacks and Developer Ecosystems

Developers working with the Nvidia A100 gain access to a comprehensive ecosystem of tools through the CUDA platform, cuDNN libraries, Nvidia AI Enterprise software, and other frameworks optimized for Tensor Core operations. This extensive support ensures rapid deployment of AI pipelines and maximizes the accelerator’s capability across industries.

Software optimizations extend into numerous domains including data science workflows, distributed training platforms, GPU-accelerated databases, and simulation engines. This broad ecosystem empowers organizations to align the A100 hardware with specialized applications tailored to unique research or business objectives.

Server Compatibility and Infrastructure Planning

The Nvidia 699-2G506-0212-320 A100 Tensor Core 80GB 500W SXM4 GPU is compatible with a wide range of enterprise-grade servers specifically designed to support SXM4 modules. These infrastructures provide enhanced thermal dissipation, robust power delivery subsystems, and optimized internal layout structures to support high-density GPU installations.

When deploying A100 GPUs, organizations often integrate nodes with multiple accelerators operating in parallel to handle concurrent workflows. Infrastructure planners benefit from the GPU’s predictable performance characteristics, helping maintain balanced compute environments where workloads do not exceed thermal or power limitations. This engineering consistency also ensures dependable multi-node scaling within GPU-enhanced clusters.

AI-Driven Business Insights and Data Processing

Enterprise AI continues to expand across industries, and the A100 GPU serves as a foundational tool for extracting insights from enormous datasets. Its acceleration capabilities enable faster analytics, more powerful data modeling, and advanced inference methods that provide organizations with strategic advantages.

Whether processing real-time data streams, performing predictive analysis, or powering recommendation systems, the A100 handles data at speeds that dramatically enhance decision-making workflows. The combination of memory capacity, compute density, and interconnect performance ensures that businesses stay competitive in fast-evolving markets.