699-2H403-0201-701 Nvidia Tesla P100 SXM2 16GB Computing Graphics Card.

- — Free Ground Shipping

- — Min. 6-month Replacement Warranty

- — Genuine/Authentic Products

- — Easy Return and Exchange

- — Different Payment Methods

- — Best Price

- — We Guarantee Price Matching

- — Tax-Exempt Facilities

- — 24/7 Live Chat, Phone Support

- — Visa, MasterCard, Discover, and Amex

- — JCB, Diners Club, UnionPay

- — PayPal, ACH/Bank Transfer (11% Off)

- — Apple Pay, Amazon Pay, Google Pay

- — Buy Now, Pay Later - Affirm, Afterpay

- — GOV/EDU/Institutions PO's Accepted

- — Invoices

- — Deliver Anywhere

- — Express Delivery in the USA and Worldwide

- — Ship to -APO -FPO

- — For USA - Free Ground Shipping

- — Worldwide - from $30

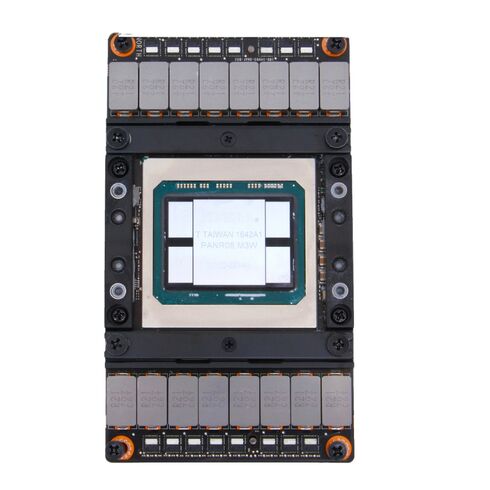

Nvidia 699-2H403-0201-701 Tesla P100 SXM2 16GB Computing Graphics Card Overview

The Nvidia Tesla P100 SXM2 16GB is a high-performance computing graphics card designed for data centers, AI workloads, and scientific simulations. Built on the powerful Pascal GP100 GPU architecture, this GPU delivers exceptional computation efficiency and bandwidth for demanding applications.

Key Features of Nvidia 699-2H403-0201-701 Tesla P100

- Powered by 3584 CUDA® cores for parallel processing

- Double-precision performance up to 5.3 TeraFLOPS

- Single-precision performance up to 10.6 TeraFLOPS

- Half-precision performance up to 21.2 TeraFLOPS

- Equipped with 16GB HBM2 CoWoS memory for ultra-fast data access

- Memory bandwidth of 732 GB/s for seamless computation

- High-speed NVLink interconnect for multi-GPU communication

- Maximum power consumption of 300 W with passive thermal design

- Error-correcting code (ECC) support without performance compromise

Technical Specifications

GPU Architecture and Cores

The Nvidia 699-2H403-0201-701Tesla P100 utilizes Nvidia Pascal architecture featuring 3584 CUDA cores. This allows for high-throughput parallel processing suitable for scientific computation, AI training, and deep learning models.

Performance Metrics

- Double-Precision: 5.3 TeraFLOPS

- Single-Precision: 10.6 TeraFLOPS

- Half-Precision: 21.2 TeraFLOPS

Memory and Bandwidth

Featuring 16GB CoWoS HBM2 memory, the Tesla P100 provides ultra-fast access to large datasets. Its impressive 732 GB/s bandwidth ensures smooth computation for large-scale AI and HPC workloads.

Connectivity and Interconnect

The GPU leverages Nvidia NVLink technology for high-speed multi-GPU communication, enabling scalable performance in demanding computing environments.

Design and Thermal Management

- Form Factor: SXM2 for server compatibility

- Cooling: Passive thermal solution designed for data center airflow

- Power Efficiency: Optimized 300 W consumption for maximum performance

Supported Compute APIs

The Tesla P100 is compatible with multiple compute APIs:

- Nvidia CUDA – For GPU-accelerated computing

- DirectCompute – For high-performance graphics and computing

- OpenCL™ – Cross-platform parallel programming

- OpenACC – For simplifying GPU acceleration in scientific applications

Applications and Use Cases

Artificial Intelligence and Deep Learning

The Nvidia 699-2H403-0201-701 Tesla P100 excels in AI model training, neural network inference, and deep learning research, providing fast computation and high memory bandwidth.

High-Performance Computing (HPC)

Ideal for supercomputing, simulations, and scientific computations, the Tesla P100 delivers exceptional performance for double-precision and single-precision calculations.

Data Analytics and Large-Scale Processing

- Big data processing with accelerated throughput

- Machine learning model deployment at scale

- Real-time analytics and computational simulations

Package and Condition

This GPU is supplied as a brand-new OEM unit including only the graphics card, making it ideal for integration into high-performance servers and workstations.

Nvidia Tesla P100 SXM2 16GB HBM2 GPU Overview

The Nvidia 699-2H403-0201-701 Tesla P100 SXM2 16GB HBM2 GPU is a cutting-edge graphics processing unit designed for high-performance computing, artificial intelligence, deep learning, and scientific simulations. Built on the Pascal architecture, this GPU delivers exceptional computational power, energy efficiency, and advanced memory capabilities. Its SXM2 form factor ensures optimal integration with high-end servers and data center configurations, providing unmatched performance for demanding workloads.

High-Bandwidth Memory (HBM2) Technology

The Nvidia 699-2H403-0201-701 Tesla P100 utilizes 16GB of HBM2 memory, which significantly enhances memory bandwidth and overall data throughput. Unlike traditional GDDR5 memory, HBM2 enables lower latency, higher energy efficiency, and a compact memory footprint. This allows the GPU to handle massive datasets efficiently, making it ideal for applications in deep learning, large-scale simulations, and data analytics.

Memory Bandwidth and Performance

- Up to 720 GB/s memory bandwidth for faster data transfer.

- Optimized for parallel processing with multiple computing cores.

- Supports high-speed NVLink connections for GPU-to-GPU communication.

These features make the Tesla P100 a powerful solution for scientific computations, artificial intelligence training, and enterprise-level data processing tasks.

Pascal Architecture and Computational Power

The Nvidia 699-2H403-0201-701 Tesla P100 SXM2 GPU is built on Nvidia’s Pascal architecture, which introduces enhanced CUDA cores, improved instruction scheduling, and superior energy efficiency. The Pascal design provides robust double-precision floating-point performance, making it suitable for HPC (High-Performance Computing) applications that demand precision and speed.

CUDA Cores and FP64 Performance

- 3584 CUDA cores for massive parallel processing capabilities.

- Up to 4.7 TFLOPS of double-precision (FP64) performance.

- Single-precision (FP32) performance reaching 10.6 TFLOPS for AI workloads.

This ensures that the Tesla P100 can accelerate machine learning models, complex simulations, and data-intensive computations effectively.

NVLink Interconnect Technology

The Nvidia 699-2H403-0201-701 Tesla P100 SXM2 is equipped with NVLink technology, providing a high-bandwidth interconnect between multiple GPUs. NVLink enables efficient scaling in multi-GPU configurations, offering significantly higher data transfer speeds compared to traditional PCIe connections.

Benefits of NVLink

- High-speed GPU-to-GPU communication at 80 GB/s bidirectional bandwidth.

- Improved multi-GPU scaling for large AI and HPC clusters.

- Reduced latency and better data synchronization across GPUs.

NVLink technology makes the Tesla P100 an excellent choice for deep learning training, multi-node simulations, and enterprise AI infrastructure.

Enterprise and Data Center Applications

The Tesla P100 SXM2 is specifically designed for enterprise-grade data centers and cloud computing environments. Its compact SXM2 form factor allows dense GPU deployments, which is crucial for optimizing space and power consumption in server racks.

Deep Learning and AI Workloads

With 16GB of HBM2 memory and thousands of CUDA cores, the Tesla P100 excels at accelerating AI workloads, including convolutional neural networks (CNNs), recurrent neural networks (RNNs), and natural language processing (NLP) models. Its FP16 and FP32 mixed-precision computing capabilities significantly reduce training times for large datasets.

Training Large AI Models

- Supports TensorFlow, PyTorch, and other popular AI frameworks.

- Enables faster iteration and model experimentation.

- Optimized for multi-GPU training clusters using NVLink interconnects.

High-Performance Computing (HPC)

The Tesla P100 is highly suitable for scientific simulations, weather modeling, molecular dynamics, and financial modeling. Its FP64 double-precision capabilities ensure high accuracy in calculations, while its parallel processing power accelerates computations that would take hours on conventional CPUs.

Simulation and Research Applications

- Fluid dynamics simulations for engineering and physics research.

- Climate and weather prediction models requiring extensive computational power.

- Computational chemistry and genomics applications.

Energy Efficiency and Thermal Management

Nvidia designed the Tesla P100 SXM2 with energy efficiency in mind. The Pascal architecture optimizes power consumption while maintaining peak performance, making it suitable for high-density data center deployments where power efficiency is critical.

Cooling and Thermal Design

The SXM2 module incorporates advanced thermal solutions to ensure reliable operation under heavy computational loads. Its cooling design allows servers to maintain stable temperatures, reduce the risk of thermal throttling, and prolong the lifespan of the GPU.

Power Efficiency Highlights

- Typical power consumption of 300W per GPU.

- Optimized for server racks with efficient airflow and cooling.

- Supports dynamic power management for reduced energy costs.

Compatibility and Integration

The Tesla P100 SXM2 is designed to integrate seamlessly with modern server infrastructures. Its PCIe and NVLink support ensures compatibility with multi-GPU setups and enterprise data center environments.

Supported Platforms

- High-performance computing clusters with Nvidia-supported drivers.

- Cloud AI infrastructure requiring scalable GPU performance.

- Enterprise servers and workstations optimized for Pascal-based GPUs.

Software and Driver Ecosystem

Nvidia provides extensive software support for the Tesla P100, including CUDA libraries, cuDNN, and Nvidia HPC SDK. These tools allow developers to fully leverage the GPU’s computational capabilities and optimize workloads for maximum efficiency.

Reliability and Enterprise-Grade Features

The Tesla P100 SXM2 includes features that enhance reliability and stability in demanding computing environments. ECC (Error-Correcting Code) memory ensures data integrity, while robust hardware design minimizes downtime in critical applications.

Enterprise Advantages

- ECC memory to prevent data corruption during intensive computations.

- Continuous operation in 24/7 data center environments.

- Hardware-level monitoring and diagnostics for proactive maintenance.

Use in Mission-Critical Environments

The Tesla P100 is trusted by research institutions, AI startups, and enterprise organizations where computational accuracy, reliability, and scalability are essential. Its robust design ensures long-term operational stability for mission-critical workloads.

Scalability and Multi-GPU Configurations

The Tesla P100 SXM2 is engineered for multi-GPU scalability, leveraging NVLink to connect multiple GPUs efficiently. This allows data centers and HPC clusters to scale performance linearly, meeting the growing demands of AI, deep learning, and scientific research applications.

Building High-Performance Clusters

- Combine multiple Tesla P100 GPUs for accelerated AI model training.

- Leverage NVLink bridges to maximize inter-GPU bandwidth.

- Optimize workloads for distributed computing across multiple nodes.

Advantages in AI Research

Researchers can train larger models with faster iteration cycles, utilize mixed-precision training, and efficiently distribute workloads across multiple GPUs. The Tesla P100's architecture ensures that scaling does not compromise computational accuracy or performance.