900-2G133-00A0-000 Nvidia 48GB GDDR6 Passive GPU

- — Free Ground Shipping

- — Min. 6-month Replacement Warranty

- — Genuine/Authentic Products

- — Easy Return and Exchange

- — Different Payment Methods

- — Best Price

- — We Guarantee Price Matching

- — Tax-Exempt Facilities

- — 24/7 Live Chat, Phone Support

- — Visa, MasterCard, Discover, and Amex

- — JCB, Diners Club, UnionPay

- — PayPal, ACH/Bank Transfer (11% Off)

- — Apple Pay, Amazon Pay, Google Pay

- — Buy Now, Pay Later - Affirm, Afterpay

- — GOV/EDU/Institutions PO's Accepted

- — Invoices

- — Deliver Anywhere

- — Express Delivery in the USA and Worldwide

- — Ship to -APO -FPO

- — For USA - Free Ground Shipping

- — Worldwide - from $30

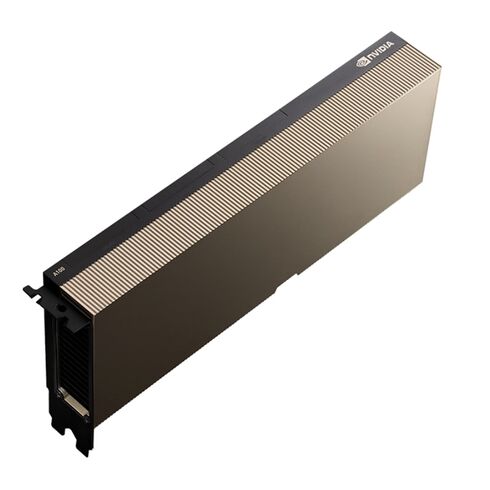

Highlights of Nvidia 900-2G133-00A0-000 L20 48GB GDDR6 GPU

The NVIDIA 900-2G133-00A0-000 L20 48GB GDDR6 Graphics Card is a high-performance GPU built for advanced data center workloads, AI training, and complex simulations. Designed with passive cooling and a robust architecture, it delivers superior performance and energy efficiency across modern compute-intensive environments.

General Information

- Manufacturer: Nvidia

- Part Number: 900-2G133-00A0-000 L20

- Product Type: Internal Graphics Card

- Sub Type: 48GB GDDR6 PCIe 4.0

Technical Specifications

- Interface Type: PCI Express 4.0 x16

- GPU Architecture: NVIDIA Ampere

- Cooling Type: Passive dual-slot heat sink

- NVLink Support: Yes, for high-speed GPU interconnectivity

- Default Power (TDP): 300W

- Thermal Design: Optimized passive cooling for server environments

Performance & Computing Power

- GPU Base Clock: 1065 MHz

- Memory Clock Speed: 1512 MHz

- Memory Capacity: 48GB GDDR6

- Memory Bus Width: 5120 bits

- FP64 Performance: 19.5 TFLOPS

- FP32 Performance: 156 TFLOPS

- FP16 Performance: 312 TFLOPS

Reliability and Design

- Engineered for 24/7 enterprise workloads

- Supports multi-GPU configurations in compatible server systems

- Ideal for AI inference, HPC workloads, and machine learning tasks

Compatibility

- Dell PowerEdge R750

- Dell PowerEdge R750xa

- Dell PowerEdge R7525

- Dell PowerEdge R740

- Dell PowerEdge R740xd

- Dell PowerEdge R940xa

- Dell PowerEdge R840

- Dell PowerEdge C6525

- Dell PowerEdge R6515

Nvidia 900-2G133-00A0-000 L20 48GB GDDR6 Passive 2x Slot 275W PCIe 4.0 x16 Graphics Card Overview

The Nvidia 900-2G133-00A0-000 L20 48GB GDDR6 Passive 2x Slot 275W PCIe 4.0 x16 Graphics Card is a powerhouse designed for artificial intelligence inference, machine learning, high-performance computing, and data center workloads. As part of Nvidia’s advanced data center GPU lineup, the L20 delivers outstanding acceleration for enterprise-scale AI applications, rendering, and simulation. It combines the efficiency of Nvidia’s cutting-edge architecture with a massive 48GB of GDDR6 memory, ensuring exceptional performance for computationally demanding environments. The passive cooling design and dual-slot form factor make it ideal for deployment in rack-mounted servers, offering high-density compute power in a thermally optimized configuration.

Advanced Nvidia Architecture for Data Center Acceleration

The Nvidia L20 GPU is built on the latest enterprise-grade GPU architecture designed to power large-scale inference, data processing, and rendering workloads. This architecture integrates thousands of CUDA cores, next-generation Tensor cores, and RT cores, all engineered for optimal efficiency and scalability. With PCIe 4.0 x16 interface support, the L20 ensures maximum data throughput between GPU and CPU, reducing latency and improving overall compute performance across demanding applications.

Next-Generation CUDA Core Architecture

The L20 GPU is equipped with a massive array of CUDA cores optimized for parallel computing. These cores enable high-speed execution of floating-point and integer operations simultaneously, allowing faster scientific computations, AI model execution, and GPU-based simulations. CUDA technology remains at the heart of Nvidia’s GPU ecosystem, enabling developers to utilize the L20 for HPC, deep learning, and visualization applications efficiently.

Enhanced Tensor Core Performance

The inclusion of advanced Tensor cores significantly boosts performance for AI inferencing and deep learning workloads. These specialized cores accelerate mixed-precision matrix operations, crucial for neural network training and inferencing at scale. The L20 GPU supports FP32, FP16, and INT8 precision, ensuring an optimal balance between computational accuracy and performance throughput across AI models.

Integrated RT Cores for Real-Time Rendering

The Nvidia L20 features dedicated RT cores for ray tracing acceleration, providing exceptional rendering performance for visualization, simulation, and 3D design applications. These cores enable physically accurate light simulations, reflections, and shadows, enhancing realism for industries such as architecture, media production, and digital twin modeling.

Massive 48GB GDDR6 Memory for Enterprise Workloads

With 48GB of high-speed GDDR6 memory, the Nvidia 900-2G133-00A0-000 L20 delivers outstanding capacity for handling complex workloads. This large memory configuration enables users to process massive datasets, perform real-time analytics, and work with high-resolution simulations without latency bottlenecks.

High-Bandwidth 384-Bit Memory Interface

The L20 GPU features a 384-bit memory interface, offering extremely high bandwidth for fast data movement between the GPU cores and memory. This allows consistent throughput in applications involving large data sets, such as training large AI models, real-time rendering, and scientific computation.

GDDR6 Memory Efficiency and Speed

The GDDR6 memory in the Nvidia L20 ensures excellent power efficiency and speed. It operates at high frequencies to deliver increased data bandwidth while maintaining thermal stability, essential for continuous use in data centers and HPC clusters.

Memory for Multi-Tasking

Nvidia’s advanced memory management architecture intelligently distributes memory resources across concurrent tasks. Whether processing AI inference models, performing scientific simulations, or rendering visual content, the L20 ensures balanced resource allocation to minimize latency and maximize performance.

PCIe 4.0 x16 Interface for Ultra-Fast Data Transfer

The Nvidia L20 utilizes the PCIe 4.0 x16 interface, which doubles the data bandwidth of PCIe 3.0, providing faster communication between the GPU and the system processor. This enhanced connectivity minimizes bottlenecks in data transfer, allowing workloads like data analytics, real-time simulations, and AI inferencing to run efficiently.

Next-Generation Platforms

The L20 is compatible with modern enterprise platforms that leverage PCIe 4.0 technology. It can be easily integrated into servers, workstations, and cloud systems built by vendors such as Dell, HPE, and Lenovo, providing scalable acceleration across diverse workloads.

Backward for Legacy Systems

Despite being a PCIe 4.0 GPU, the L20 maintains backward compatibility with PCIe 3.0 slots. This ensures flexible deployment in existing infrastructures, allowing organizations to upgrade performance without a complete hardware overhaul.

Parallel Compute Environments

In multi-GPU configurations, the PCIe 4.0 interface allows the L20 to communicate rapidly with other GPUs and CPUs, delivering exceptional scaling performance for cluster-based AI training and deep learning environments.

Deep Learning and Neural Network Training

The L20 GPU is capable of handling neural network training with exceptional efficiency. Its large memory pool supports high batch sizes and model parallelism, reducing training time for deep learning frameworks like TensorFlow, PyTorch, and MXNet. The GPU accelerates both forward and backward propagation steps, improving model accuracy and reducing time-to-insight.

Integration with Nvidia AI Enterprise Software

The GPU supports the Nvidia AI Enterprise software suite, a comprehensive ecosystem of optimized tools and frameworks for AI development and deployment. This integration ensures smooth compatibility with VMware vSphere and other virtualization environments, enabling enterprise-scale AI processing in virtualized data centers.

Rendering Capabilities

Although primarily designed for compute workloads, the Nvidia L20 also provides exceptional visualization performance for professional applications requiring photorealistic rendering and simulation. Its RT cores and CUDA architecture make it suitable for design, engineering, and digital content creation.

Ray-Traced Rendering for Professional Workflows

With hardware-accelerated ray tracing, the L20 allows professionals to visualize complex 3D models with lifelike lighting and textures. Architects, designers, and VFX artists can rely on the GPU to render scenes in real time with unmatched visual fidelity and accuracy.

Professional Applications

The L20 GPU is compatible with leading industry software, including Autodesk Maya, Dassault Systèmes SOLIDWORKS, Siemens NX, and Adobe After Effects. Its certified drivers ensure stable operation and consistent performance in mission-critical creative workflows.

Multi-Display and High-Resolution

The GPU supports multiple high-resolution outputs, allowing simultaneous display on 4K or 8K monitors. This enhances workflow efficiency for designers and engineers who require expansive visual workspaces and high-definition rendering capability.

Thermal Dissipation

The L20’s passive heat sink efficiently dissipates heat through the server’s existing airflow system, eliminating the need for active fans. This reduces maintenance requirements and increases reliability in continuous operation environments.

Power Consumption at 275W

With a thermal design power (TDP) of 275W, the L20 balances high performance with energy efficiency. Its architecture is engineered to maximize performance-per-watt, reducing operational costs while delivering consistent GPU acceleration.

High-Density Deployment

The dual-slot, passive design enables deployment of multiple L20 GPUs in a single server, allowing organizations to scale compute performance without exceeding power or thermal constraints.

Nvidia Virtual GPU (vGPU) Technology

Using Nvidia vGPU software, the L20 allows multiple virtual machines to share a single GPU without compromising performance. Each virtual instance can be assigned a dedicated GPU profile, ensuring predictable and secure performance for each user.

Cloud-Ready Infrastructure

The L20 integrates seamlessly into cloud-based AI and HPC environments. Its scalability makes it ideal for large-scale deployments in cloud service providers and enterprise hybrid data centers.

Secure Multi-Tenant Operations

Advanced resource isolation ensures that workloads from different users remain secure and independent. This is critical for enterprises handling sensitive data across multi-user GPU environments.

Reliability and Enterprise-Grade

The Nvidia 900-2G133-00A0-000 L20 GPU is designed for mission-critical enterprise workloads. It includes built-in reliability, availability, and serviceability (RAS) features to maintain uptime and ensure data integrity.

Firmware and Boot

The GPU supports secure boot and firmware validation, protecting systems from unauthorized firmware modifications. This feature maintains system integrity and compliance with enterprise security standards.

Long-Term Stability and Continuous Operation

Designed for 24/7 operation, the L20 GPU offers robust thermal stability and hardware endurance. It’s optimized for sustained performance in demanding environments, making it suitable for continuous AI inference, rendering, and simulation workloads.

Software Ecosystem and Developer Tools

The L20 GPU supports Nvidia’s full suite of software development tools and frameworks, empowering developers to accelerate applications across AI, HPC, and visualization workflows.

Nvidia CUDA and OpenCL Frameworks

The CUDA platform provides developers with access to parallel computing capabilities, enabling GPU acceleration for a wide range of scientific and computational applications. OpenCL compatibility extends support to cross-platform development environments.

Scalability and Multi-GPU Configurations

The Nvidia L20 supports scalability across multiple GPUs, allowing organizations to build GPU clusters that handle massive computational workloads. This scalability is ideal for deep learning training, scientific simulation, and big data processing.

Parallel Processing Across Multiple GPUs

Multiple L20 GPUs can be interconnected to handle data-intensive workloads collaboratively. This setup enhances throughput and shortens processing times for large AI models and simulation projects.

Cluster and HPC Environments

The L20 integrates seamlessly into high-performance computing (HPC) clusters, supporting distributed computing frameworks like MPI and Kubernetes. It enables efficient resource utilization and high availability across compute nodes.

Flexible Deployment Across Server Architectures

The L20 is compatible with a wide range of server architectures, including rack-mounted systems, blade servers, and cloud nodes. Its standardized PCIe 4.0 form factor ensures flexibility in both on-premises and cloud-based deployments.

High Performance Per Watt Ratio

The GPU achieves an exceptional performance-per-watt ratio, maximizing energy efficiency in compute-intensive environments. This efficiency is especially critical for organizations managing large data center infrastructures.

Enterprise Sustainability Goals

By combining efficiency, scalability, and reliability, the Nvidia L20 aligns with enterprise sustainability initiatives. It supports long-term performance without excessive energy demands, contributing to greener IT operations.

Scientific and Engineering Simulations

For computational research, the L20 accelerates molecular modeling, climate prediction, and physics-based simulations. Its precision and processing power make it indispensable in research institutions and laboratories worldwide.