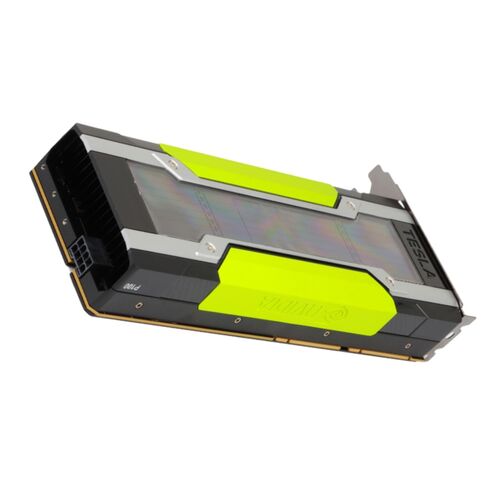

900-2H400-0010-000 Nvidia Tesla P100 Pascal 12GB HBM2 3072 Bit PCI-E 3.0 x16 1x 8Pin Dual Slot Graphics Card

- — Free Ground Shipping

- — Min. 6-month Replacement Warranty

- — Genuine/Authentic Products

- — Easy Return and Exchange

- — Different Payment Methods

- — Best Price

- — We Guarantee Price Matching

- — Tax-Exempt Facilities

- — 24/7 Live Chat, Phone Support

- — Visa, MasterCard, Discover, and Amex

- — JCB, Diners Club, UnionPay

- — PayPal, ACH/Bank Transfer (11% Off)

- — Apple Pay, Amazon Pay, Google Pay

- — Buy Now, Pay Later - Affirm, Afterpay

- — GOV/EDU/Institutions PO's Accepted

- — Invoices

- — Deliver Anywhere

- — Express Delivery in the USA and Worldwide

- — Ship to -APO -FPO

- — For USA - Free Ground Shipping

- — Worldwide - from $30

Product Overview : Nvidia Tesla P100 Pascal 12GB HBM2 GPU

The Nvidia Tesla P100 (Part Number: 900-2H400-0010-000) is a high-performance accelerator card engineered for enterprise workloads, deep learning frameworks, and scientific simulations. Powered by the groundbreaking Pascal architecture, this dual-slot GPU delivers exceptional computational throughput and memory bandwidth, setting a benchmark for data center GPU acceleration.

General Information

- Brand: Nvidia

- Manufacturer Part Number: 900-2H400-0010-000

- Product Type: 12GB HBM2 3072-Bit PCI-E Graphics Card

- Form Factor: Dual Slot

- Power Connector: 1x 8-Pin

Key Specifications & Features

Core Technical Details

- GPU Architecture: Pascal

- Video Memory: 12 GB HBM2 (High Bandwidth Memory 2)

- Memory Interface: 3072-bit ultra-wide bus

- CUDA Cores: 3584 parallel cores

- Bus Interface: PCI-Express 3.0 x16

- Part Number: 900-2H400-0010-000

Performance Highlights

Exceptional Memory Bandwidth

Leveraging HBM2 technology and a 3072-bit memory interface, the Tesla P100 delivers superior bandwidth, enabling seamless handling of large-scale datasets and complex AI models.

Massive Parallel Processing Power

With 3584 CUDA cores, the Pascal architecture accelerates machine learning training, molecular dynamics, weather forecasting, and other compute-intensive workloads.

Optimized for Data Centers

Designed for server-grade environments, this dual-slot GPU integrates seamlessly via PCI-E 3.0 x16, ensuring high-speed connectivity and reliable performance in enterprise data centers.

The Choose the Nvidia Tesla P100

Key Advantages

- Unmatched parallel computing efficiency

- Advanced Pascal GPU architecture

- High-bandwidth HBM2 memory for data-intensive tasks

- Reliable dual-slot design for server integration

- Enterprise-ready with PCI-E 3.0 x16 interface

Ideal Use Cases

Artificial Intelligence & Deep Learning

- Training neural networks

- Inference acceleration

- Natural language processing

Scientific Research

- Molecular modeling

- Climate and weather simulations

- Physics-based computations

Enterprise Applications

- Big data analytics

- High-performance computing (HPC)

- Cloud-based GPU acceleration

Nvidia 900-2H400-0010-000 Tesla P100 12GB HBM2 GPU

The Nvidia Tesla P100 (900-2H400-0010-000) is a data-center class accelerator built on Nvidia's Pascal architecture. Engineered for compute-heavy workflows, the Tesla P100 pairs 12GB of HBM2 memory on a 3072-bit memory interface with a PCI-Express 3.0 x16 form factor and a single 8‑pin auxiliary power connector in a dual-slot card profile. This category page section covers architecture highlights, real-world use cases, deployment considerations, software ecosystem compatibility, performance features, and buying guidance aimed at system builders, researchers, and enterprises seeking proven GPU compute hardware.

Although newer GPU generations exist, the Tesla P100 remains a practical and cost-effective accelerator for established high-performance computing (HPC), scientific simulation, and enterprise AI inference workloads where reliable double-precision and single-precision throughput, combined with large on-board HBM2 memory, deliver consistent value. The P100's broad support in CUDA-based toolchains and its proven stability in data-center environments make it a dependable choice for organizations balancing performance and budget.

Pascal GPU Core and Compute Engines

The Tesla P100's Pascal GPU core is engineered for parallel floating-point compute with specialized units that accelerate matrix and vector math. Key architectural features include configurable FP64/FP32 performance modes and efficient scheduling designed for large-scale kernels. The GPU is well-suited for workloads such as finite-element analysis, molecular dynamics, and large matrix algebra.

High-Bandwidth Memory (HBM2) — 12GB, 3072-bit Interface

One of the P100's standout features is its HBM2 memory subsystem. With 12GB of stacked HBM2 memory operating across a 3072-bit bus, the card provides very high sustained memory bandwidth compared to same-era GDDR solutions. This bandwidth advantage helps workloads that are memory-bound, such as large-scale graph analytics, high-resolution visualization, and data-intensive simulation that cannot fit into smaller GPU memories.

Performance Characteristics and Use Cases

Scientific Computing and FP64 Workloads

The Tesla P100 provides strong double-precision (FP64) throughput relative to consumer GPUs of its era, making it a favorite for simulations that require high numerical precision, such as computational fluid dynamics (CFD), climate modeling, and computational chemistry. These workloads benefit from the P100's balance of compute and memory bandwidth.

Data Analytics, Databases, and Graph Processing

Memory-bound analytics — such as graph traversal, large-scale joins, and in-memory databases — gain from the P100's wide memory bus. The 12GB of HBM2 allows larger in-GPU datasets, which reduces PCI-E transfer overhead and accelerates end-to-end query times when paired with GPU-accelerated data frameworks.

Visualization, Rendering, and Simulation Workflows

The Tesla P100's robustness, high memory bandwidth, and thermal reliability make it suitable for server-side rendering, high-precision visualization, and VR content pipelines where deterministic performance is preferred over the newest graphical feature sets provided by gaming GPUs.

Compatibility and Software Ecosystem

CUDA, cuDNN, and Common Frameworks

The P100 is fully supported by Nvidia's CUDA toolkit and is compatible with mainstream AI and HPC libraries such as cuBLAS, cuFFT, cuSPARSE, and cuDNN. Popular frameworks — TensorFlow, PyTorch, MXNet, and others — provide optimizations and builds that target the Pascal architecture, enabling drop-in acceleration for many projects.

Multi-GPU and Interconnect Considerations

The Tesla P100 can be deployed in multi-GPU configurations for parallel jobs. Depending on the server platform and the specific P100 variant (passive vs. active cooling), consider PCI-E lane distribution and potential NVLink availability. Some P100 models were offered with NVLink interconnects on specific form factors; always verify the SKU and platform compatibility when planning GPU-to-GPU interconnect topologies for high-bandwidth, low-latency communication.

Physical, Power, and Thermal Design

Form Factor and Installation

The P100's dual-slot design allows installation into standard server chassis and workstations that have at least a two-slot height available. Verify clearance for adjacent PCIe cards and the adequacy of chassis airflow. Some vendor-specific variants are full-height, half-length, or server-profile passive-cooled designs intended for blade and dense server environments.

Power Requirements

Typical P100 cards require an auxiliary 8-pin power connector and a stable PCIe power delivery system. Ensure the host power supply or server power budget can support multiple GPUs plus high-performance CPUs, storage, and memory. Planning for peak power draw under sustained compute loads is critical to avoid thermal throttling or power-trip events.

Cooling and Airflow Best Practices

Data-center deployments should prioritize directed airflow across the GPU heat sink and memory stacks. Passive-cooled P100s require chassis-level airflow from server fans; active-cooled cards depend on chassis clearance and fan curves. Monitor GPU temperatures and use telemetry to trigger additional cooling or workload redistribution when necessary.

Procurement, SKU Notes, and Variants

Understanding the 900-2H400-0010-000 SKU

The manufacturer SKU (900-2H400-0010-000) is used to precisely identify this Tesla P100 configuration for procurement and compatibility checks. When sourcing cards, confirm whether the unit is a blower-style (active) or passive-cooled card, whether it uses a full-profile bracket for servers, and whether any firmware or firmware locks exist that tie the card to specific OEM systems.

Firmware Updates and Stability

Keep GPUs on validated firmware and driver stacks to avoid regressions. Many organizations maintain a strict change-control process for driver and firmware updates, particularly when GPUs are part of long-running batch or HPC clusters.

Data Sanitization and Secure Decommissioning

Though GPU memory is volatile, organizations should follow data center decommissioning policies to ensure any residual data in host memory or attached storage is sanitized. For leased or refurbished GPUs, confirm that sellers follow secure wipe processes and provide certificates of erasure when necessary.