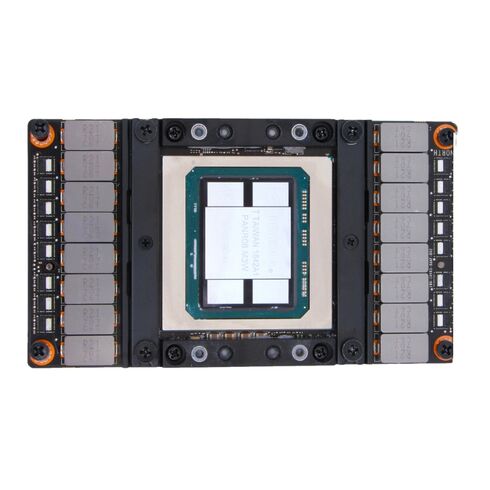

900-2H403-0000-000 Nvidia Tesla P100 Pascal 16GB HBM2 GPU

- — Free Ground Shipping

- — Min. 6-month Replacement Warranty

- — Genuine/Authentic Products

- — Easy Return and Exchange

- — Different Payment Methods

- — Best Price

- — We Guarantee Price Matching

- — Tax-Exempt Facilities

- — 24/7 Live Chat, Phone Support

- — Visa, MasterCard, Discover, and Amex

- — JCB, Diners Club, UnionPay

- — PayPal, ACH/Bank Transfer (11% Off)

- — Apple Pay, Amazon Pay, Google Pay

- — Buy Now, Pay Later - Affirm, Afterpay

- — GOV/EDU/Institutions PO's Accepted

- — Invoices

- — Deliver Anywhere

- — Express Delivery in the USA and Worldwide

- — Ship to -APO -FPO

- — For USA - Free Ground Shipping

- — Worldwide - from $30

Nvidia Tesla P100 16GB HBM2 GPU

The Nvidia 900-2H403-0000-000 Tesla P100 Pascal 16GB HBM2 GPU is engineered for high-performance computing, offering advanced parallel processing capabilities and optimized memory bandwidth for demanding workloads.

Main Information

- Brand: Nvidia

- Model Number: 900-2H403-0000-000

- Category: Tesla P100 Graphics Accelerator

Technical Specifications

Supported APIs

- OpenACC

- OpenCL

- DirectCompute

Processing Power

CUDA Cores

- 3,584 parallel cores for intensive workloads

Performance Metrics

- Single-Precision: 10.6 TeraFLOPS

- Double-Precision: 5.3 TeraFLOPS

Memory Details

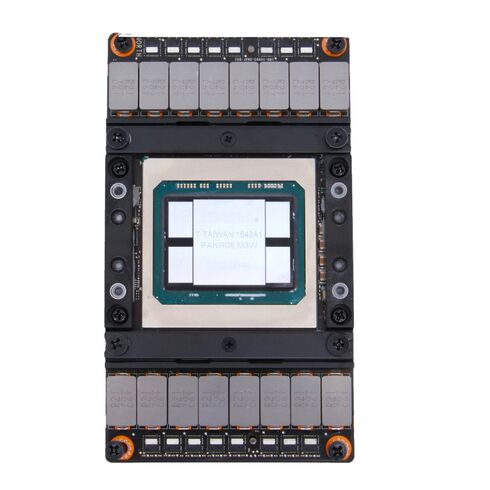

- Capacity: 16 GB CoWoS HBM2

- Bandwidth: 720 GB/s for rapid data throughput

Design & Form Factor

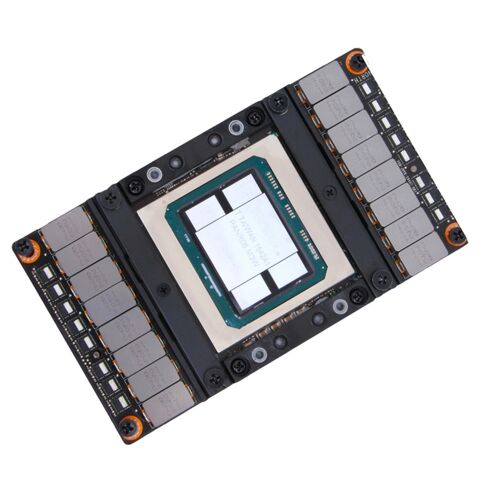

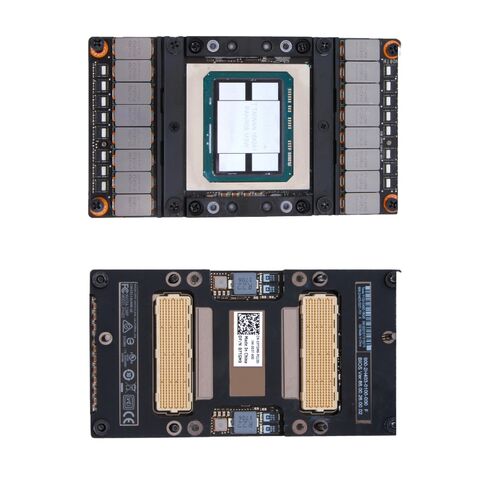

- Form Factor: SXM2 module

- Cooling Solution: Passive, optimized for server airflow

Power Efficiency

- Maximum Consumption: 300 W

Connectivity

- Interconnect: NVIDIA NVLink for high-speed communication

Nvidia Tesla P100 Pascal Series 16GB HBM2 GPU Overview

The Nvidia 900-2H403-0000-000 Tesla P100 Pascal 16GB HBM2 4096-bit NVLINK SXM2 Graphics Card represents a benchmark in high-performance computing and GPU acceleration. Designed specifically for enterprise, research, and scientific workloads, the Tesla P100 series leverages Nvidia's Pascal architecture to deliver exceptional computational power, energy efficiency, and memory bandwidth. This category of Tesla GPUs is engineered for data centers, deep learning frameworks, and complex simulations that require extreme parallel processing capabilities.

Advanced Pascal Architecture

The Pascal architecture underpins the Nvidia Tesla P100 series, incorporating cutting-edge FinFET 16nm technology that enhances processing speed while reducing energy consumption. Pascal GPUs provide a superior balance between raw computational throughput and efficiency, allowing enterprises to handle demanding tasks like artificial intelligence model training, high-performance computing (HPC), and scientific visualization. The architecture includes improvements in memory handling, floating-point operations, and interconnectivity that are crucial for large-scale GPU deployments.

GPU Core and Parallel Processing Capabilities

The Tesla P100 Pascal GPU features thousands of CUDA cores designed for parallel computation. Each core is optimized for simultaneous processing of complex mathematical calculations, making the card ideal for tasks requiring massive parallelism. This architecture facilitates accelerated data analysis, simulation, and rendering. The GPU's structure supports high throughput for both single-precision (FP32) and double-precision (FP64) workloads, which is essential for scientific simulations, machine learning inference, and computational fluid dynamics.

High-Bandwidth Memory Integration

The 16GB HBM2 memory integrated in the Tesla P100 enables unprecedented memory bandwidth of up to 720 GB/s. HBM2 technology stacks memory vertically, drastically increasing bandwidth while maintaining low power consumption compared to traditional GDDR5 or GDDR6 memory. This high-speed memory interface allows massive datasets to be processed efficiently, reducing bottlenecks in GPU-intensive workloads. The 4096-bit memory bus ensures that large-scale deep learning and HPC applications receive data with minimal latency, further optimizing computational performance.

NVLINK Interconnect Technology

The Nvidia Tesla P100 supports NVLINK, a high-speed interconnect technology that enables multiple GPUs to communicate at significantly higher bandwidth than traditional PCIe connections. NVLINK facilitates seamless scaling in multi-GPU configurations, enabling large models and simulations to be split across multiple GPUs without compromising performance. This interconnect is especially valuable for data-intensive tasks such as neural network training, where rapid data transfer between GPUs is critical for efficiency.

SXM2 Form Factor and Thermal Efficiency

The SXM2 form factor of the Tesla P100 Pascal graphics card is optimized for data center deployment. Unlike conventional PCIe cards, the SXM2 design allows higher power delivery and improved thermal management, which ensures the GPU maintains peak performance under continuous heavy workloads. Thermal efficiency is further enhanced by advanced cooling solutions, including liquid or high-efficiency air cooling, which prevents throttling and ensures consistent operation in high-density server environments.

Enterprise and Scientific Applications

Within enterprise and scientific domains, the Nvidia Tesla P100 excels in deep learning, artificial intelligence, high-performance computing, and advanced analytics. Researchers use Tesla P100 GPUs for molecular simulations, climate modeling, and genomic analysis, while AI developers leverage its capabilities for training deep neural networks and accelerating inference workloads. The GPU’s architecture enables it to handle complex matrix operations efficiently, which is fundamental for applications in AI and machine learning.

Deep Learning and AI Acceleration

The Tesla P100 Pascal 16GB HBM2 NVLINK SXM2 is particularly well-suited for deep learning acceleration. Its high memory bandwidth and massive parallel processing capabilities allow rapid training of deep neural networks on large datasets. Frameworks such as TensorFlow, PyTorch, and Caffe benefit from the GPU's architecture, which supports mixed-precision computing to optimize performance without sacrificing model accuracy. The card’s compute power reduces training times significantly, enabling researchers and data scientists to iterate and experiment more efficiently.

FP16 and Mixed-Precision Computing

Mixed-precision computing is a feature of the Tesla P100, allowing computations to be performed using a combination of FP16 and FP32 precision. This approach accelerates matrix multiplications and convolutions, which are central operations in deep learning workloads. By leveraging FP16 precision where applicable, the Tesla P100 achieves higher throughput without compromising the stability of the results, resulting in faster model convergence and reduced training times.

Large-Scale Model Training

The 16GB HBM2 memory of the Tesla P100 allows the processing of large datasets and complex neural network architectures. For applications such as natural language processing, image recognition, and autonomous vehicle development, memory capacity is critical. The Tesla P100 enables training of deep neural networks that would be impossible or highly inefficient on GPUs with smaller memory capacities, making it a preferred choice for enterprise AI projects and academic research requiring high computational resources.

Integration with AI Frameworks

The Tesla P100 is fully compatible with major AI frameworks and libraries, ensuring seamless integration into existing pipelines. Its CUDA cores, along with optimized libraries like cuDNN and NCCL, accelerate neural network operations, while NVLINK facilitates high-speed multi-GPU configurations. Researchers can deploy Tesla P100 GPUs in clusters to handle large-scale AI workloads, allowing faster experimentation and model optimization. The card’s compatibility with containerized environments and cloud infrastructures further enhances its flexibility for AI deployment.

High-Performance Computing Capabilities

In addition to AI acceleration, the Nvidia Tesla P100 Pascal excels in high-performance computing scenarios. Its architecture supports double-precision floating-point operations, which are essential for scientific simulations, engineering calculations, and data analysis. Researchers and engineers use Tesla P100 GPUs to simulate complex physical phenomena, optimize engineering designs, and perform large-scale computational tasks that would take significantly longer on conventional CPUs.

Scientific Simulations and Data Analysis

Scientific simulations such as molecular dynamics, finite element analysis, and quantum mechanics calculations benefit greatly from the Tesla P100's massive parallelism. The GPU’s thousands of CUDA cores allow simultaneous execution of multiple threads, enabling faster computation and more detailed simulations. Data-intensive fields, including astrophysics, climatology, and bioinformatics, utilize Tesla P100 GPUs to process and analyze large datasets efficiently, leading to faster insights and discoveries.

Engineering and Visualization Workloads

For engineering and visualization applications, the Tesla P100 supports real-time rendering, computational fluid dynamics, and finite element analysis. Its high bandwidth memory and NVLINK interconnect ensure that large models and complex simulations can be processed without performance degradation. This capability is essential for industries such as automotive, aerospace, and industrial design, where accurate and rapid simulations can reduce development time and improve product quality.

Scalability in Data Center Environments

The Tesla P100 Pascal series is designed for scalability within data center environments. NVLINK allows multiple GPUs to work together seamlessly, forming powerful computing clusters capable of tackling extremely demanding workloads. Combined with the SXM2 form factor and thermal management, Tesla P100 GPUs can operate continuously at peak performance, providing reliable and scalable solutions for enterprises and research institutions seeking to expand their computational capabilities.

Memory Bandwidth and Data Throughput

The Nvidia Tesla P100's HBM2 memory provides exceptionally high memory bandwidth, crucial for accelerating data-intensive tasks. With a 4096-bit interface and 16GB capacity, the GPU ensures rapid access to large datasets, reducing latency and improving overall computational efficiency. This memory architecture is particularly beneficial for applications such as deep learning, high-resolution simulations, and big data analytics, where fast data access directly translates into faster processing and reduced computation time.

Impact on AI and HPC Workloads

High memory bandwidth allows the Tesla P100 to process large batches of data simultaneously, which is essential for both AI and HPC workloads. For AI, this means quicker iterations during model training and faster convergence. For HPC, it ensures that simulations and analytical computations can be performed at scale without bottlenecks. The synergy between high-bandwidth memory, NVLINK, and CUDA cores maximizes throughput and computational efficiency, making Tesla P100 one of the most powerful GPUs for enterprise applications.

Optimized Data Handling and Transfer

The combination of HBM2 memory and NVLINK interconnect optimizes data handling and transfer between GPUs. In multi-GPU setups, NVLINK enables direct GPU-to-GPU communication with high bandwidth, reducing reliance on slower PCIe connections. This optimized data flow is critical for distributed deep learning training, multi-node HPC clusters, and real-time analytics, ensuring minimal data transfer delays and maximized utilization of GPU resources.

Performance in Large Datasets and Simulations

The Tesla P100 Pascal GPU excels in handling large-scale datasets, simulations, and computational tasks. Its memory bandwidth ensures that vast amounts of data can be accessed and processed quickly, which is crucial for simulations involving billions of parameters, high-resolution images, or complex scientific computations. This performance capability allows enterprises and research institutions to tackle problems previously limited by hardware constraints, facilitating breakthroughs in AI, HPC, and scientific research.

Enterprise Deployment and Reliability

The Nvidia Tesla P100 Pascal series is specifically designed for enterprise and data center deployment. Reliability, stability, and longevity are critical considerations for organizations running continuous, heavy workloads. Tesla P100 GPUs are rigorously tested to meet enterprise standards, providing fault tolerance, error correction, and consistent performance under sustained operational loads. This reliability ensures that businesses and research institutions can deploy Tesla P100 GPUs in critical workflows without risk of interruption or degradation.

Compatibility and Integration

Tesla P100 GPUs are compatible with a wide range of servers and workstations, making integration into existing IT infrastructure straightforward. The SXM2 form factor, combined with NVLINK and PCIe support, ensures flexible deployment options for single or multi-GPU configurations. System administrators can easily scale computing resources by adding Tesla P100 GPUs to clusters, taking advantage of NVLINK for high-speed interconnectivity and optimized parallel processing across multiple units.

Thermal and Power Efficiency

Designed with data center environments in mind, the Tesla P100 Pascal GPU balances performance with thermal and power efficiency. Advanced cooling solutions and the SXM2 design allow the GPU to operate continuously under heavy workloads without overheating. Power consumption is optimized through Pascal architecture and HBM2 memory efficiency, ensuring lower operational costs while maintaining peak computational capabilities. This efficiency is particularly valuable for enterprises seeking high-performance computing with minimal energy expenditure.

Future-Proofing and Scalability

The Nvidia Tesla P100 is a future-proof solution for enterprises and research institutions planning to scale their GPU infrastructure. Its support for NVLINK, high-bandwidth HBM2 memory, and Pascal architecture ensures compatibility with emerging AI and HPC workloads. By deploying Tesla P100 GPUs, organizations can build scalable, high-performance computing environments that meet current demands while remaining adaptable to future technological advancements.