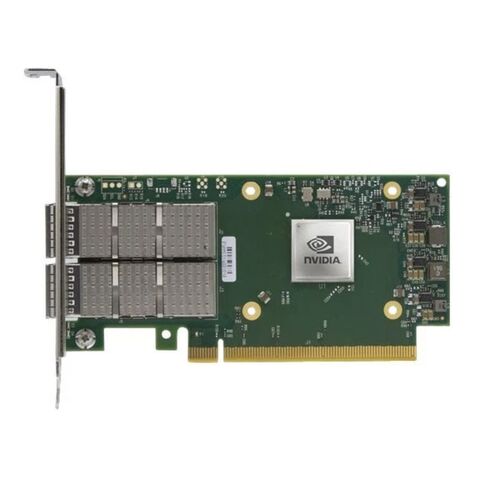

900-9X6AF-0056-MT1 Nvidia ConnectX-6 VPI 100GbE Dual-Ports PCIe Adapter Card

- — Free Ground Shipping

- — Min. 6-month Replacement Warranty

- — Genuine/Authentic Products

- — Easy Return and Exchange

- — Different Payment Methods

- — Best Price

- — We Guarantee Price Matching

- — Tax-Exempt Facilities

- — 24/7 Live Chat, Phone Support

- — Visa, MasterCard, Discover, and Amex

- — JCB, Diners Club, UnionPay

- — PayPal, ACH/Bank Transfer (11% Off)

- — Apple Pay, Amazon Pay, Google Pay

- — Buy Now, Pay Later - Affirm, Afterpay

- — GOV/EDU/Institutions PO's Accepted

- — Invoices

- — Deliver Anywhere

- — Express Delivery in the USA and Worldwide

- — Ship to -APO -FPO

- — For USA - Free Ground Shipping

- — Worldwide - from $30

Product Overview of Nvidia ConnectX-6 VPI 100GbE Adapter

The Nvidia 900-9X6AF-0056-MT1 ConnectX-6 VPI Adapter Card is a high-performance dual-port network interface designed for modern data centers. Supporting HDR100, EDR InfiniBand, and 100Gb Ethernet, this PCI Express x16 adapter delivers extreme throughput, ultra-low latency, and intelligent acceleration for compute, storage, and virtualized environments.

General Information

- Manufacturer: Nvidia

- Manufacturer Part Number: 900-9X6AF-0056-MT1

- Device Type: Network Adapter Card

Core Connectivity & Performance

Next-Generation Bandwidth

- Up to 200Gb/s connectivity per port

- Maximum aggregate bandwidth of 200Gb/s

- Supports both HDR100 and EDR InfiniBand as well as 100GbE

Ultra-Low Latency Messaging

- Handles up to 215 million messages per second

- Latency as low as sub-0.6 microseconds

- Best-in-class packet handling with sub-nanosecond accuracy

Advanced Hardware Capabilities

Security & Encryption

- Hardware-based XTS-AES block-level encryption

- FIPS-capable for compliance-driven environments

- Secure data protection without impacting performance

Storage & Offload Technologies

- Advanced storage acceleration features

- Block-level encryption and checksum offloads

- Optimized for high-speed data access and integrity

Interface & Compatibility

PCI Express Support

- Compatible with PCIe Gen 3.0 and PCIe Gen 4.0

- x16 interface for maximum throughput

Flexible SerDes Architecture

- Supports 50G SerDes (PAM4)

- Supports 25G SerDes (NRZ)

- Enables flexible port configurations across networks

Environmental & Industry Compliance

- RoHS compliant

- ODCC compatible

Key Benefits

High-Efficiency Data Center Networking

- Industry-leading throughput with low CPU utilization

- Exceptional message rate for demanding workloads

- Reduced operational costs and infrastructure complexity

Optimized for Virtualization & Cloud

- Outstanding performance in virtualized networks

- Ideal for NFV and service chaining

- Improved I/O consolidation efficiency

Smart & Scalable Architecture

- Supports x86, Power, ARM, GPU, and FPGA-based platforms

- Mellanox host chaining technology for cost-effective rack design

- Programmable data path for emerging network flows

Ideal Use Cases

- High-performance computing (HPC)

- Enterprise data centers

- Cloud and hyperscale environments

- Advanced storage and AI workloads

The ConnectX-6 Series: Defining High-Performance Data Center

NVIDIA's ConnectX-6 series represents a critical inflection point in intelligent adapter technology, engineered for the most demanding workloads of the modern data center, high-performance computing (HPC), and artificial intelligence (AI) infrastructures. The NVIDIA 900-9X6AF-0056-MT1 ConnectX-6 VPI adapter card stands as a flagship model within this lineage, embodying a fusion of extreme bandwidth, intelligent offloads, and protocol flexibility. This category encompasses network interface cards (NICs) designed not merely as connectivity solutions but as computational networking engines that actively reduce host CPU overhead, lower latency, and accelerate data processing from the network edge to the application. Built on NVIDIA's extensive expertise in InfiniBand and Ethernet, the ConnectX-6 series is pivotal for building efficient, scalable, and future-proofed systems where data movement is as crucial as compute power.

Unpacking the Model: 900-9X6AF-0056-MT1 Specifications Decoded

Understanding the product nomenclature reveals the card's core capabilities. The model number 900-9X6AF-0056-MT1 specifies a precise configuration: "ConnectX-6" denotes the silicon generation. "VPI" (Virtual Protocol Interconnect) confirms dual-protocol support for both InfiniBand and Ethernet. "HDR100/EDR" indicates InfiniBand performance up to HDR100 (100 Gb/s) and full EDR (100 Gb/s) compatibility. "100GbE" defines its maximum Ethernet speed. "Dual-Port QSFP56" specifies two ports using the QSFP56 form factor, capable of 100Gb/s each. "PCIe 3.0/4.0 x16" ensures a high-bandwidth host interface, backward and forward compatible. This card is engineered for system builders and IT architects requiring maximum port density and bandwidth flexibility within a single slot.

Core Architectural Innovations of the ConnectX-6 Adapter

The Power of NVIDIA Mellanox BlueField System-on-Chip Integration

At the heart of the ConnectX-6 adapter lies a highly integrated System-on-Chip (SoC). While not a BlueField Data Processing Unit (DPU) with ARM cores, the ConnectX-6 SoC incorporates sophisticated, hardware-based accelerators. This architecture is designed to perform complex networking, security, and storage tasks in silicon, freeing the server's central processors for core application workloads. This offload-centric design is fundamental to achieving the low latency and high message rates the card is renowned for.

Hardware Offload Engines: The Key to CPU Efficiency

The adapter features a comprehensive suite of hardware offload engines, each dedicated to a specific function:

Transport Offloads (RDMA over Converged Ethernet - RoCE)

Implements RoCEv2 in hardware, enabling direct memory access (RDMA) over standard Ethernet networks. This allows data to be transferred directly between the memories of two hosts, bypassing the operating system kernel and TCP/IP stack, which drastically reduces latency and CPU utilization for clustered applications.

GPUDirect Technologies

A groundbreaking suite of technologies that enables direct data path between GPU memory and network hardware. GPUDirect RDMA allows servers and GPUs in different systems to exchange data directly, which is essential for multi-node AI training. GPUDirect Storage (GDS) enables a direct path for data to move between NVMe storage and GPU memory, accelerating data pipelines for AI and analytics.

NVMe over Fabrics (NVMe-oF) Offload

Accelerates access to remote flash storage by offloading the NVMe-oF protocol, transforming networked storage into what appears as a local NVMe device to the application. This is critical for building high-performance, disaggregated storage pools.

Advanced Security and Virtualization Offloads

Includes IPsec and TLS inline cryptography acceleration, hardware-based Root of Trust, and SR-IOV (Single Root I/O Virtualization) with support for hundreds of virtual functions. These offloads secure data in motion and provide near-bare-metal performance for virtual machines and containers.

Dual-Protocol Flexibility: VPI (InfiniBand and Ethernet)

The Virtual Protocol Interconnect (VPI) technology is a hallmark feature, allowing the adapter to be configured at the port level to run either InfiniBand or Ethernet. This provides unparalleled investment protection and deployment flexibility.

InfiniBand Mode: Ultra-Low Latency for HPC

When configured for InfiniBand, the card delivers industry-leading latency and advanced in-network computing capabilities via NVIDIA's Scalable Hierarchical Aggregation and Reduction Protocol (SHARP). This is the preferred fabric for tightly coupled supercomputing clusters, large-scale AI training farms, and financial trading systems where microseconds matter.

Support for HDR100 and EDR Speeds

The card operates at InfiniBand EDR (100 Gb/s) and HDR100 (100 Gb/s) speeds. HDR100 provides a cost-effective migration path, allowing use of existing EDR cabling infrastructure while interoperating with full HDR (200 Gb/s) systems. This ensures a smooth, staged upgrade path for data centers.

Ethernet Mode: High-Performance, Standards-Based Networking

In Ethernet mode, the card functions as an ultra-high-performance 100 Gigabit Ethernet NIC, complete with all the hardware offloads (RoCE, etc.). This mode is ideal for cloud data centers, enterprise networks, and converged environments where a standard TCP/IP Ethernet fabric is required, but the performance benefits of RDMA and offloads are desired.

Performance and Application Landscape

Ideal Use Cases and Workloads

The Nvidia 900-9X6AF-0056-MT1 ConnectX-6 VPI adapter is specifically targeted at workloads that are sensitive to latency, bandwidth, or host CPU consumption.

Artificial Intelligence and Deep Learning Training

In multi-server, multi-GPU AI training clusters, the card's GPUDirect RDMA and low latency are indispensable. They enable efficient model parallelism and data parallelism by allowing GPUs across the network to exchange gradient updates at extremely high speeds, dramatically reducing training time for large models.

High-Performance Computing (HPC) and Scientific Simulation

MPI (Message Passing Interface) applications, common in computational fluid dynamics, weather modeling, and genomic research, benefit tremendously from the ultra-low latency and high message rate of InfiniBand. The hardware offload of MPI collective operations enhances scalability.

Hyperconverged Infrastructure (HCI) and Software-Defined Storage

The Nvidia 900-9X6AF-0056-MT1 combination of high bandwidth, NVMe-oF offload, and efficient RDMA makes this adapter perfect for storage traffic in vSAN, Ceph, or other SDS platforms. It maximizes storage performance and reduces compute overhead on storage servers.

Cloud and Telco Data Centers

With its robust virtualization features (SR-IOV), security offloads (IPsec/TLS), and support for network overlays (VXLAN, GENEVE), the card is ideal for delivering secure, high-performance networking to tenants in cloud environments and for 5G User Plane Function (UPF) acceleration in telco.

Compatibility, Deployment, and Ecosystem

Host Interface and Form Factor Considerations

The card utilizes a full-height, full-length (FHFL) form factor with a PCIe 3.0/4.0 x16 edge connector. It is backward compatible with PCIe 3.0 systems, ensuring broad server platform support, while also ready for the double bandwidth of PCIe 4.0 in newer platforms to prevent host bus bottlenecks with dual 100GbE ports.

Cabling and Transceiver Options

The dual QSFP56 cages support a wide range of industry-standard optical and copper cabling solutions for both InfiniBand and Ethernet:

InfiniBand: QSFP56 active optical cables (AOCs), QSFP56 to 4x SFP56 splitter cables, or QSFP56 direct attach copper (DAC) cables for short reaches.

Ethernet: QSFP28 (100GBASE-SR4, LR4, etc.) and QSFP56 (100GBASE-DR, FR1) optical transceivers, as well as appropriate DAC cables. Note: QSFP56 ports are backward compatible with QSFP28 optics and cables for 100GbE.

Software and Management Integration

The adapter is supported by NVIDIA's comprehensive NVIDIA MLNX-OS and NVIDIA Cumulus Linux for switches, and the NVIDIA MLNX_EN and OFED driver suites for hosts. It features full integration with popular orchestration platforms like Kubernetes (via the NVIDIA Network Operator) and management tools like NVIDIA NetQ for lifecycle management.