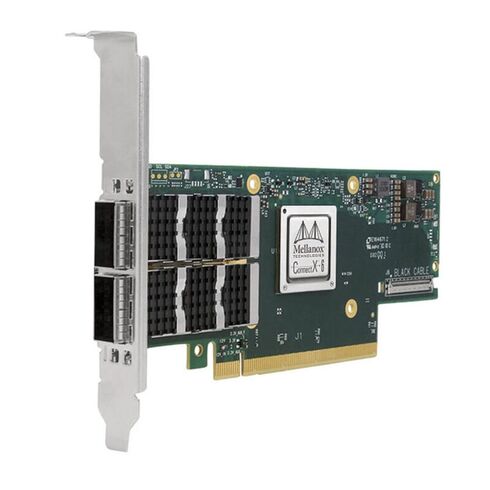

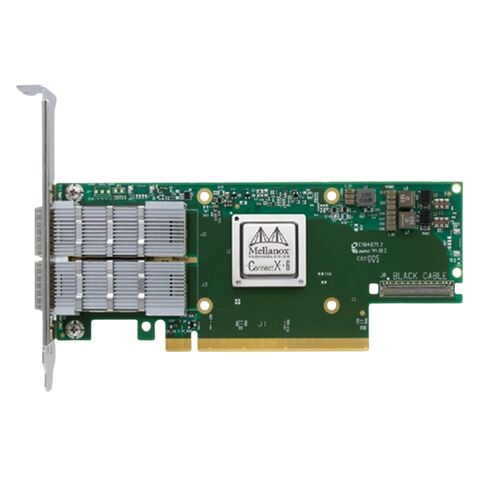

900-9X6AF-0058-ST1 Nvidia ConnectX-6 Ethernet 2-Ports QSFP56 PCIe Adapter Card

- — Free Ground Shipping

- — Min. 6-month Replacement Warranty

- — Genuine/Authentic Products

- — Easy Return and Exchange

- — Different Payment Methods

- — Best Price

- — We Guarantee Price Matching

- — Tax-Exempt Facilities

- — 24/7 Live Chat, Phone Support

- — Visa, MasterCard, Discover, and Amex

- — JCB, Diners Club, UnionPay

- — PayPal, ACH/Bank Transfer (11% Off)

- — Apple Pay, Amazon Pay, Google Pay

- — Buy Now, Pay Later - Affirm, Afterpay

- — GOV/EDU/Institutions PO's Accepted

- — Invoices

- — Deliver Anywhere

- — Express Delivery in the USA and Worldwide

- — Ship to -APO -FPO

- — For USA - Free Ground Shipping

- — Worldwide - from $30

High-Performance Network Adapter Overview

The NVIDIA 900-9X6AF-0058-ST1 ConnectX-6 200GbE Network Adapter Card is engineered for ultra-fast data center, cloud, and enterprise networking environments. Designed with dual QSFP56 ports and PCIe Gen3/Gen4 x16 support, this adapter delivers exceptional bandwidth, low latency, and advanced protocol compatibility for Ethernet and InfiniBand workloads.

General Product Details

- Brand: NVIDIA

- Model Number: 900-9X6AF-0058-ST1

- Device Category: PCIe Network Interface Card (NIC)

Physical Characteristics

- Form Factor: Adapter Card

- Dimensions: 6.6 inches x 2.71 inches

- Port Type: Dual QSFP56 for InfiniBand and Ethernet

- Cable Support: Copper and Optical media

Advanced Protocol Compatibility

- SDR, DDR, QDR, FDR10, FDR

- EDR (25 Gb/s per lane)

- HDR100 (2 lanes x 50 Gb/s)

- HDR (50 Gb/s per lane)

Ethernet Standards Supported

- 200GbE: CR4, KR4, SR4

- 100GbE: CR4, CR2, KR4, SR4

- 50GbE: R2, R4

- 40GbE: CR4, KR4, SR4, LR4, ER4, R2

- 25GbE, 20GbE, 10GbE (LR, ER, CX4, CR, KR, SR)

- 1GbE: SGMII, 1000BASE-CX, 1000BASE-KX

Data Transfer Rates

Network Throughput

- InfiniBand Speeds: SDR to HDR

- Ethernet Bandwidth: 1Gb/s to 200Gb/s

PCI Express Interface

- PCIe Gen3 & Gen4 compatibility

- SerDes speeds: 8.0 GT/s and 16.0 GT/s

- 16 PCIe lanes with backward support for PCIe 2.0 and 1.1

Power Consumption and Cooling

Electrical Specifications

- Auxiliary Voltage: 3.3V

- Maximum Current: 100 mA

- Typical Power Usage: 23.6W with passive cables

- QSFP56 Port Power: Up to 5W

Thermal and Airflow Requirements

- Passive Cables: 350 LFM at 55°C (heatsink to port)

- Passive Cables: 250 LFM at 35°C (port to heatsink)

- NVIDIA Active 4.7W Cables: 400 LFM at 55°C

- NVIDIA Active 4.7W Cables: 300 LFM at 35°C

Environmental Operating Conditions

Temperature Range

- Operating: 0°C to 55°C

- Storage: -40°C to 70°C

Humidity Tolerance

- Operational: 10% to 85% relative humidity

- Non-Operational: 10% to 90% relative humidity

Architectural Innovation: Inside the ConnectX-6 Dual-Port 200GbE Adapter

The Nvidia 900-9X6AF-0058-ST1 ConnectX-6 DX chip at the heart of this adapter is a marvel of semiconductor design, built to handle the unprecedented throughput and packet rates of modern data centers. Its architecture is a holistic fusion of high-speed connectivity, intelligent offloads, and advanced programmability.

Core Silicon and Interface Technology

The ConnectX-6 ASIC utilizes a cutting-edge process node to deliver exceptional performance per watt. Each of its two ports natively supports QSFP56 (Quad Small Form-factor Pluggable 56) transceivers, which are specifically designed for 200GbE using 4-level Pulse Amplitude Modulation (PAM4) signaling. This allows a single port to achieve 200 Gbps over a duplex fiber cable, effectively doubling the density of the previous generation. The card interfaces with the host system via a PCI Express 4.0 x16 slot, providing a theoretical bandwidth of up to 31.5 GB/s, which is fully capable of saturating both 200GbE ports simultaneously. Crucially, it maintains backward compatibility with PCIe 3.0 systems, ensuring broad deployment flexibility across existing and new server platforms.

Transformative Workload Acceleration: Beyond Basic Connectivity

The true value of the ConnectX-6 category lies not in raw speed alone, but in its comprehensive suite of hardware-accelerated engines that transform server and cluster performance.

Ultra-Low Latency and RDMA

For AI training and HPC simulations, microseconds matter. The adapter features hardware-assisted Remote Direct Memory Access (RDMA) over Converged Ethernet (RoCE). This allows one server's application to directly read from or write to the memory of another server's application, bypassing the operating system, kernel, and CPU on both ends. The result is near-inline latency and dramatically reduced CPU utilization, enabling scalable, cluster-wide performance. This is the technology that underpins NVIDIA's GPUDirect, creating a direct data path between GPU memory and the network.

GPUDirect Technologies: Fueling AI and Heterogeneous Computing

This adapter category is engineered to be the optimal data conduit for GPU-centric workloads. Key technologies include: GPUDirect RDMA for direct peer-to-peer communication between GPUs in different servers, and GPUDirect Storage (GDS), which enables a direct path for data to move between NVMe storage and GPU memory, bypassing the host CPU. This end-to-end acceleration is critical for feeding data-hungry AI training algorithms and complex analytics models without bottleneck.

NVMe over Fabrics (NVMe-oF) Acceleration

As storage disaggregates from compute, efficient network storage access becomes paramount. The ConnectX-6 adapter features full hardware offload for NVMe-oF initiator and target operations. It processes NVMe commands and data transfers in hardware, making networked NVMe storage perform as if it were locally attached. This is foundational for building high-performance, scalable, and software-defined storage pools.

Advanced Networking Features and Virtualization

Modern cloud and multi-tenant environments demand robust, secure, and isolated network infrastructure. The ConnectX-6 series delivers enterprise and cloud-grade capabilities on a single card.

Sophisticated Quality of Service (QoS) and Traffic Management

The Nvidia 900-9X6AF-0058-ST1 adapter hardware supports granular traffic control mechanisms like Priority Flow Control (PFC) and Explicit Congestion Notification (ECN), essential for running lossless Ethernet networks required for RDMA and storage traffic. Combined with advanced scheduling and buffer management, it ensures predictable performance for mixed workloads.

SR-IOV and Hardware-Based Isolation

With support for Single Root I/O Virtualization (SR-IOV), a single physical adapter can be partitioned into multiple (e.g., 127+) virtual functions (VFs). Each VF can be assigned directly to a virtual machine or container, providing near-bare-metal network performance, superior isolation, and enhanced security compared to software-based virtual switches. This dramatically improves density and performance in virtualized and cloud-native environments.

Enhanced Security with Inline Cryptography

To protect data in motion without compromising performance, the ConnectX-6 integrates inline TLS/SSL and IPsec cryptography engines. It can encrypt and decrypt traffic at line rate (200Gbps), offloading these computationally intensive tasks from the host CPU. This makes pervasive encryption feasible, even in the highest-throughput data centers.

Deployment Scenarios and Target Applications

The versatility of the ConnectX-6 200GbE adapter makes it the card of choice for a vast array of cutting-edge applications.

Artificial Intelligence and Machine Learning Clusters

In multi-GPU, multi-server AI training clusters, network latency and bandwidth directly impact time-to-solution. The adapter's RDMA and GPUDirect capabilities minimize communication overhead between nodes, allowing researchers to train larger models on bigger datasets in less time. It is a standard component in NVIDIA's DGX systems and mainstream AI infrastructure.

High-Performance Computing (HPC) and Scientific Simulation

HPC applications in fields like computational fluid dynamics, genomics, and climate modeling rely on tight coupling between thousands of servers. The ultra-low latency and high message rate of ConnectX-6 enable efficient execution of Message Passing Interface (MPI) jobs, scaling scientific discovery to new levels.

Hyper-Scale Cloud and Hyperscale Data Centers

For public and private cloud builders, the card's combination of SR-IOV, high density (200G per port), and advanced offloads allows for the creation of high-performance, secure, and multi-tenant cloud instances. It improves server economics by handling network processing in silicon, freeing up CPU cores for revenue-generating tenant workloads.

Software-Defined Storage and All-Flash Arrays

Storage appliances leveraging NVMe-oF benefit immensely from the adapter's storage offloads. It enables building low-latency, high-throughput storage targets that can serve hundreds of clients simultaneously, making disaggregated storage a high-performance reality.

Ecosystem Integration and Manageability

NVIDIA 900-9X6AF-0058-ST1 ensures the ConnectX-6 adapter integrates seamlessly into broader data center management frameworks.

NVIDIA BlueField DPU Synergy

The ConnectX-6 is the foundational networking engine for NVIDIA's BlueField Data Processing Unit (DPU). This positions it within a larger vision of data-centric, infrastructure-as-a-service, where networking, security, and storage services are offloaded and managed by the DPU, creating a zero-trust, self-securing data center environment.

Comprehensive Software

The adapter is supported by NVIDIA's Mellanox OFED (OpenFabrics Enterprise Distribution) for Linux and drivers for Windows Server, VMware ESXi, and other major hypervisors. It also features rich management interfaces like DPDK (Data Plane Development Kit), NVIDIA's own SDKs, and standard management tools (SNMP, RESTful), ensuring ease of integration and automation.