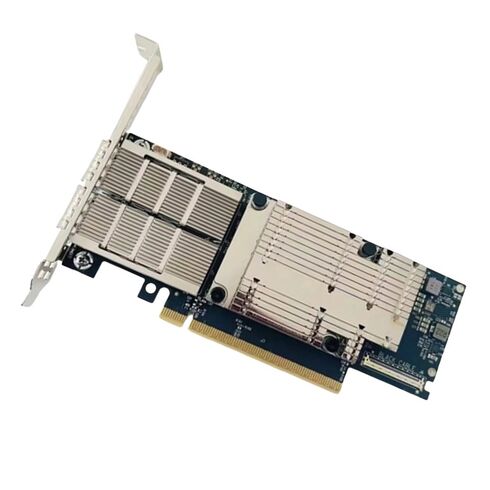

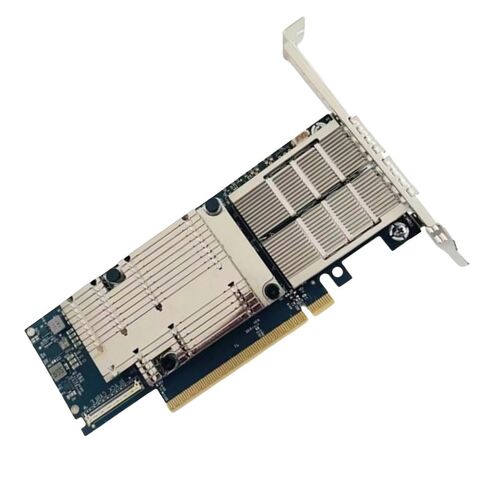

900-9X7AH-0078-DTZ Nvidia ConnectX-7 HHHL 2-Port QSFP112 Network Adapter

- — Free Ground Shipping

- — Min. 6-month Replacement Warranty

- — Genuine/Authentic Products

- — Easy Return and Exchange

- — Different Payment Methods

- — Best Price

- — We Guarantee Price Matching

- — Tax-Exempt Facilities

- — 24/7 Live Chat, Phone Support

- — Visa, MasterCard, Discover, and Amex

- — JCB, Diners Club, UnionPay

- — PayPal, ACH/Bank Transfer (11% Off)

- — Apple Pay, Amazon Pay, Google Pay

- — Buy Now, Pay Later - Affirm, Afterpay

- — GOV/EDU/Institutions PO's Accepted

- — Invoices

- — Deliver Anywhere

- — Express Delivery in the USA and Worldwide

- — Ship to -APO -FPO

- — For USA - Free Ground Shipping

- — Worldwide - from $30

High-Performance NVIDIA ConnectX-7 Adapter Card

The Nvidia 900-9X7AH-0078-DTZ ConnectX-7 HHHL adapter card is engineered for next-generation data centers, high-performance computing clusters, and AI-driven workloads. Built on advanced PCI Express Gen 5.0 x16 architecture, this dual-port QSFP112 network interface delivers exceptional bandwidth, ultra-low latency, and flexible connectivity across InfiniBand and Ethernet fabrics.

Manufacturer and Model Details

- Brand: NVIDIA

- Product Code: 900-9X7AH-0078-DTZ

- Form Factor: HHHL (Half Height, Half Length)

- Interface: PCIe 5.0 x16 expansion slot

Networking Ports

- Dual QSFP112 ports for InfiniBand and Ethernet

- Optimized for high-speed data center interconnects

- Designed for scalable cloud and hyperscale deployments

InfiniBand Speed

- NDR200 InfiniBand

- HDR and HDR100

- EDR, FDR, and SDR

- Backward compatibility across multiple generations

Ethernet Data Rates (Default Mode)

- 200 Gb/s Ethernet

- 100 Gb/s Ethernet

- 50 Gb/s Ethernet

- 25 Gb/s Ethernet

- 10 Gb/s Ethernet

InfiniBand Protocol Standards

- InfiniBand IBTA v1.5a compliance

- Auto-negotiation across multiple lane configurations

- NDR200 with 2 lanes at 100 Gb/s per lane

- HDR with 50 Gb/s per lane

- HDR100 using dual-lane architecture

- EDR at 25 Gb/s per lane

- FDR at 14.0625 Gb/s per lane

- 1x, 2x, and 4x SDR modes at 2.5 Gb/s per lane

Ethernet Protocols

- 200GAUI-2 and 200GAUI-4 C2M

- 200GBASE-CR4

- 100GAUI-2 and 100GAUI-1 C2M

- 100GBASE-CR4, CR2, and CR1

- 50GAUI-2 and 50GAUI-1 C2M

- 50GBASE-CR and 50GBASE-R2

- 40GBASE-CR4 and 40GBASE-R2

- 25GBASE-R

- 10GBASE-R and 10GBASE-CX4

- 1000BASE-CX

- CAUI-4 C2M, 25GAUI C2M

- XLAUI C2M, XLPPI, and SFI interfaces

Integrated Security Features

- Secure Boot functionality enabled

- Cryptographic acceleration disabled by default

- Firmware integrity validation

Power and Voltage Requirements

- Operating Voltage: 12V and 3.3V auxiliary

- Maximum Current: 100 mA

- Typical Power Consumption: 24.9 W with passive cables

- Optimized for PCIe Gen 5.0 x16 slots

Temperature Tolerance

- Operational range: 0°C to 55°C

- Non-operational storage range: -40°C to 70°C

Humidity Specifications

- Operational humidity: 10% to 85%

- Non-operational humidity: 10% to 90%

High-Performance Data Center Network Adapter Category

The NVIDIA ConnectX-7 adapter card category represents a new generation of ultra-high-bandwidth, low-latency network interface solutions designed for modern hyperscale data centers, cloud service providers, AI factories, and high-performance computing environments. Products in this category, including the Nvidia 900-9X7AH-0078-DTZ ConnectX-7 HHHL Adapter Card, are engineered to support extreme data throughput, deterministic latency, and advanced offload capabilities that enable next-level scalability for Ethernet and InfiniBand infrastructures. These adapter cards are optimized for environments where massive parallel workloads, accelerated computing, and data-intensive applications must coexist with maximum efficiency and reliability.

Evolution of High-Speed Network Interface Cards

Network interface cards have evolved rapidly as data center architectures have shifted from traditional client-server models to distributed, containerized, and GPU-accelerated workloads. The ConnectX-7 generation builds upon previous Nvidia 900-9X7AH-0078-DTZ ConnectX architectures by introducing support for 200Gb Ethernet and NDR200 InfiniBand while leveraging PCI Express Gen 5.0 x16 connectivity. This evolution allows network adapters in this category to deliver significantly higher throughput per slot, reduced CPU utilization through intelligent offloads, and improved power efficiency compared to earlier generations.

Positioning of ConnectX-7 in Modern Network Fabrics

Within the broader category of data center network adapters, Nvidia 900-9X7AH-0078-DTZ ConnectX-7 products are positioned at the top tier for performance-critical deployments. They are designed to serve as foundational components in scalable network fabrics that interconnect servers, GPU nodes, storage systems, and edge devices. By supporting both Ethernet and InfiniBand protocols on a single adapter, this category offers unmatched flexibility for organizations that operate hybrid or converged network environments.

Dual-Port QSFP112 Connectivity Architecture

A defining characteristic of the Nvidia 900-9X7AH-0078-DTZ ConnectX-7 HHHL adapter card category is the inclusion of dual QSFP112 ports. These ports enable connectivity at speeds of up to 200Gb per port, supporting both 200GbE Ethernet and NDR200 InfiniBand standards. QSFP112 technology represents a major leap forward in optical and copper interconnect design, enabling higher signal integrity, reduced power consumption per bit, and improved port density in high-performance switches and servers.

Advantages of QSFP112 in High-Density Deployments

QSFP112 interfaces are optimized for environments where rack space and port density are critical considerations. Adapter cards in this category allow data centers to achieve higher aggregate bandwidth without increasing the number of physical network interfaces. This results in simplified cabling, reduced airflow obstruction, and improved thermal efficiency. The dual-port configuration also supports link aggregation, redundancy, and multi-fabric connectivity, enhancing overall network resilience.

Ethernet and InfiniBand Protocols

The ability to support both Ethernet and InfiniBand protocols distinguishes this category from single-protocol network adapters. Ethernet connectivity enables seamless integration with existing IP-based networks, while InfiniBand support provides ultra-low latency and lossless communication for HPC and AI workloads. This dual-mode capability allows organizations to standardize on a single adapter platform across multiple use cases, reducing inventory complexity and operational overhead.

PCI Express 5.0 x16 Interface Design

The integration of a PCIe 5.0 x16 host interface is a core attribute of the ConnectX-7 adapter card category. PCIe Gen 5 doubles the bandwidth of PCIe Gen 4, enabling up to 128 GT/s of bidirectional throughput. This ensures that the network adapter can fully utilize the available bandwidth of 200Gb links without becoming constrained by the host interface.

Benefits of PCIe Gen 5 for Data-Intensive Workloads

PCIe 5.0 support allows adapter cards in this category to efficiently handle massive data transfers between the network and system memory. This is particularly important for GPU-accelerated workloads, distributed storage systems, and real-time analytics platforms where data movement can become a bottleneck. The high-bandwidth host interface also enables advanced offload features to operate at full efficiency without saturating the PCIe bus.

Compatibility with Next-Generation Server Platforms

Servers equipped with modern CPUs and chipsets increasingly rely on PCIe Gen 5 to support emerging accelerators and high-speed peripherals. The ConnectX-7 HHHL adapter card category is designed to integrate seamlessly with these platforms, ensuring long-term compatibility and investment protection. This makes the category suitable for future-ready data center builds that prioritize scalability and longevity.

Half-Height Half-Length Form Factor Optimization

The HHHL form factor used in this adapter card category provides a balance between performance and physical compatibility. Half-height, half-length designs are widely supported across enterprise and hyperscale server platforms, allowing these adapters to be deployed in a broad range of chassis configurations. This form factor ensures that high-performance networking can be introduced without requiring specialized or oversized server enclosures.

Thermal and Mechanical Considerations

Adapter cards in this category are engineered with advanced thermal management features to handle the heat generated by high-speed networking components. Efficient heat sinks, optimized airflow paths, and support for active or passive cooling solutions ensure stable operation under sustained high load. Mechanical design considerations also ensure secure installation and reliable electrical connectivity within dense server environments.

Advanced Offload and Acceleration Capabilities

A major differentiator of the NVIDIA ConnectX-7 adapter card category is the extensive set of hardware offload and acceleration features. These capabilities reduce the processing burden on host CPUs by handling network-related tasks directly on the adapter. This results in lower latency, higher throughput, and improved overall system efficiency.

RDMA and GPUDirect

Remote Direct Memory Access support enables direct memory-to-memory data transfers between systems without CPU intervention. When combined with NVIDIA GPUDirect technologies, this allows data to move directly between network interfaces and GPU memory. This capability is critical for AI training, inference, and scientific computing workloads where minimizing latency and maximizing data throughput directly impacts application performance.

Storage and Virtualization Offloads

This category of adapter cards includes hardware acceleration for storage protocols and virtualization workloads. Offloads for NVMe over Fabrics, virtual switching, and encapsulation protocols enable high-performance networking in software-defined data centers. These features allow operators to scale virtual machines and containers while maintaining consistent network performance.

Security and Reliability Features

Security and reliability are fundamental requirements for enterprise and cloud data centers. The ConnectX-7 adapter card category incorporates advanced security features to protect data in motion and ensure system integrity. Hardware-based encryption, secure boot mechanisms, and isolation features help safeguard sensitive workloads against evolving threats.

Resilience and High Availability Design

Dual-port connectivity enables redundant network paths, supporting high availability architectures and fault tolerance. In the event of a link or switch failure, traffic can be rerouted seamlessly, minimizing downtime. This resilience is essential for mission-critical applications that demand continuous operation.

Use Cases Across Data Center Segments

The Nvidia 900-9X7AH-0078-DTZ ConnectX-7 HHHL adapter card category is designed to address a wide range of deployment scenarios. From hyperscale cloud environments to enterprise private data centers and research institutions, these adapters provide the performance and flexibility required to support diverse workloads.

Artificial Intelligence and Machine Learning Infrastructure

AI and machine learning workloads place extreme demands on network infrastructure due to the need for fast synchronization between compute nodes. Adapter cards in this category enable high-bandwidth, low-latency communication that accelerates distributed training and inference. Support for InfiniBand NDR200 and advanced GPU-centric features makes this category a cornerstone of modern AI factories.

High-Performance Computing Clusters

HPC environments rely on deterministic latency and lossless communication to achieve optimal performance. The ConnectX-7 category supports these requirements through InfiniBand technology, RDMA acceleration, and scalable fabric integration. This enables researchers and engineers to run complex simulations and data-intensive computations more efficiently.

Cloud and Hyperscale Networking

In cloud data centers, network adapters must deliver consistent performance at massive scale. The dual-protocol nature of this category allows cloud providers to deploy unified networking solutions that support both traditional Ethernet services and specialized high-performance workloads. Hardware offloads further reduce CPU overhead, enabling higher tenant density and improved cost efficiency.

Scalability and Future-Proof Networking

Scalability is a defining attribute of the Nvidia 900-9X7AH-0078-DTZ ConnectX-7 adapter card category. By supporting emerging standards such as PCIe Gen 5 and QSFP112, these adapters are designed to accommodate future increases in bandwidth demand. This ensures that data center networks built around this category can evolve without requiring frequent hardware replacements.

Integration with Software-Defined Networking Ecosystems

Modern data centers increasingly rely on software-defined networking and automation frameworks to manage complex infrastructures. Adapter cards in this category are compatible with leading networking stacks and orchestration platforms, enabling programmable control over network behavior. This integration allows operators to dynamically optimize performance, security, and resource allocation.