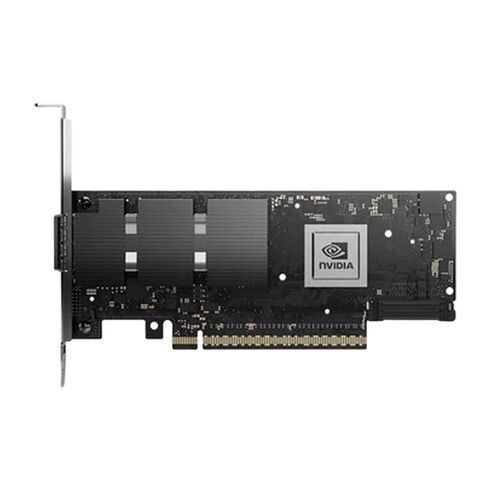

900-9X81E-00EX-ST0 Nvidia 2x400GBE 2 Port ConnectX-8 C8180 HHHL PCI E 6 X16 800GBS IB Network Adapter

- — Free Ground Shipping

- — Min. 6-month Replacement Warranty

- — Genuine/Authentic Products

- — Easy Return and Exchange

- — Different Payment Methods

- — Best Price

- — We Guarantee Price Matching

- — Tax-Exempt Facilities

- — 24/7 Live Chat, Phone Support

- — Visa, MasterCard, Discover, and Amex

- — JCB, Diners Club, UnionPay

- — PayPal, ACH/Bank Transfer (11% Off)

- — Apple Pay, Amazon Pay, Google Pay

- — Buy Now, Pay Later - Affirm, Afterpay

- — GOV/EDU/Institutions PO's Accepted

- — Invoices

- — Deliver Anywhere

- — Express Delivery in the USA and Worldwide

- — Ship to -APO -FPO

- — For USA - Free Ground Shipping

- — Worldwide - from $30

Nvidia ConnectX-8 C8180 Network Adapter

The Nvidia 900-9X81E-00EX-ST0 is a high-performance dual-port ConnectX-8 C8180 HHHL PCIe Gen6 x16 network adapter engineered for advanced cloud, AI, and HPC workloads. Offering speeds up to 800Gb/s, it delivers unmatched scalability and efficiency for next-generation data centers.

General Information

- Manufacturer: Nvidia

- Part Number: 900-9X81E-00EX-ST0

- Device Type: High-speed Network Adapter

Technical Specifications

Performance Features

- Supports 200/100/50G PAM4 modulation

- Data rates: 800Gb, 400Gb, 200Gb, 100Gb

- Infiniband XDR/NDR/HDR/HDR100/EDR compatibility

- IBTA v1.7 compliance

- 16 million I/O channels

- MTU range: 256 to 4,000 bytes, 2GB messages

Ethernet Network Interface

- Supports 100/50G PAM4 and 25/10G NRZ

- Speeds: 400Gb, 200Gb, 100Gb, 50Gb, 25Gb

- Maximum bandwidth: 800Gb/s (2x400Gb with splitter cable)

- Up to 8 split ports supported

Protocol Compatibility

Infiniband Protocols

- XDR: 4 lanes × 200Gb/s per port

- NDR: 4 lanes × 100Gb/s per lane

- NDR200: 2 lanes × 100Gb/s per lane

- HDR: 50Gb/s per lane

- HDR100: 2 lanes × 50Gb/s per lane

- EDR: 25Gb/s per lane

- FDR: 14.0625Gb/s per lane

- SDR: 1x/2x/4x at 2.5Gb/s per lane

Ethernet Standards

- 400GAUI-4 C2M, 400GBASE-CR4

- 200GAUI-2/4 C2M, 200GBASE-CR4

- 100GAUI-2/1 C2M, 100GBASE-CR4/CR2/CR1

- 50GAUI-2/1 C2M, 50GBASE-CR/R2

- 40GBASE-CR4/R2

- 25GBASE-R, 10GBASE-R, 10GBASE-CX4

- 1000BASE-CX, CAUI-4 C2M, 25GAUI C2M

- XLAUI C2M, XLPPI, SFI

Host Interface

- PCIe Gen6 x16 via edge connector

- Additional x16 MCIO connector (requires auxiliary cable)

- NVIDIA Multi-host support (up to 4 hosts)

- MSI/MSI-X support

Optimized Cloud Networking

Acceleration Features

- Stateless TCP offloads: IP/TCP/UDP checksum, LSO, LRO, GRO, TSS, RSS

- SR-IOV virtualization

- ASAP2 accelerated switching for SDN and VNF

- OVS acceleration

- Overlay network acceleration: VXLAN, Geneve, NVGRE

- Connection tracking with L4 firewall and NAT

- Hierarchical QoS, header rewrite, flow mirroring

- Flow-based statistics and flow aging

Advanced AI & HPC Networking

High-Performance Features

- RDMA and RoCEv2 acceleration

- Programmable congestion control

- NVIDIA GPUDirect RDMA and GPUDirect Storage

- In-network computing

- High-speed packet reordering

MPI Accelerations

- Burst-buffer offloads

- Collective operations offloads

- Rendezvous protocol offloads

- Enhanced atomic operations

Media Type: Optical Fiber

Platform Security

- Secure boot with hardware root of trust

- Secure firmware updates

- Flash encryption

- Device attestation (SPDM 1.2)

Cryptography

- Inline crypto acceleration: IPsec, TLS, MACsec, PSP

Management & Control

- Network control sideband interface (NC-SI)

- MCTP over SMBus and PCIe PLDM for monitoring and firmware updates

- Redfish device enablement

- Field-replaceable unit (FRU) support

- SPDM protocols and data models

- SPI to flash

- JTAG IEEE 1149.1 and 1149.6

Network Boot Options

- Infiniband or Ethernet boot

- PXE boot

- iSCSI boot

- UEFI support

900-9X81E-00EX-ST0 Network Adapter Overview

The Nvidia 900-9X81E-00EX-ST0 2x400GBE 2 Port ConnectX-8 C8180 HHHL PCI Express 6 x16 Super NIC represents a specialized category of ultra-high-speed network adapters designed for modern data centers, hyperscale computing environments, and advanced high-performance computing infrastructures. This category focuses on next-generation Ethernet and InfiniBand networking hardware capable of delivering unprecedented throughput, extremely low latency, and intelligent offload capabilities. Such adapters are engineered to meet the growing demands of artificial intelligence training, large-scale data analytics, cloud-native workloads, and distributed storage systems that require deterministic performance at massive scale.

Within this category, network interface cards are no longer passive connectivity components. Instead, they function as intelligent networking engines that offload complex tasks from host CPUs, accelerate data movement between compute nodes, and enable seamless scaling across thousands of servers. The Nvidia ConnectX-8 family defines a new tier of network adapters where bandwidth density, protocol versatility, and hardware-based acceleration converge to support both Ethernet and InfiniBand fabrics at extreme speeds. This positions the category as a foundational element for next-generation data center architectures.

ConnectX-8 Architecture and Evolution of Smart Network Interface Cards

The ConnectX-8 architecture marks a significant advancement in the evolution of smart network interface cards. This category of adapters builds upon multiple generations of Mellanox and Nvidia networking innovation, integrating enhanced packet processing pipelines, advanced congestion control mechanisms, and deep telemetry capabilities directly into the silicon. The result is a network adapter that operates as an active participant in workload orchestration rather than a simple data conduit.

Adapters in this category leverage PCI Express Gen 6 x16 interfaces to ensure that host-to-device communication does not become a bottleneck when operating at aggregate speeds of up to 800 gigabits per second. The architecture is optimized to handle simultaneous streams of Ethernet and InfiniBand traffic, enabling flexible deployment scenarios across heterogeneous environments. This adaptability is critical for data centers that must support both legacy networking protocols and emerging high-speed interconnect standards without compromising performance or efficiency.

PCI Express Gen 6 Integration and Host Interface Optimization

A defining characteristic of this category is the integration of PCI Express Gen 6 technology, which significantly increases the available bandwidth between the network adapter and the host system. By utilizing a full x16 lane configuration, adapters such as the ConnectX-8 C8180 ensure that data transfer rates between the NIC and the CPU or GPU complex remain balanced with the extreme network throughput provided by dual 400GB ports. This balance is essential for avoiding congestion and maintaining predictable latency under heavy workloads.

The host interface optimization extends beyond raw bandwidth. Advanced DMA engines, multi-queue support, and intelligent interrupt moderation reduce CPU overhead and improve system responsiveness. These features are particularly important in environments running containerized workloads, virtual machines, or microservices, where efficient resource utilization directly impacts operational costs and service quality.

Dual-Port 400GBE and 800Gbs Aggregate Bandwidth Capabilities

This category of network adapters is defined by its ability to deliver dual-port 400 Gigabit Ethernet connectivity, resulting in an aggregate bandwidth of up to 800 gigabits per second. Such performance levels enable data centers to consolidate network connections, reduce cabling complexity, and increase overall fabric efficiency. By providing multiple high-speed ports on a single HHHL form factor card, these adapters maximize bandwidth density within standard server chassis.

The dual-port configuration also enhances resiliency and flexibility. Network architects can deploy active-active configurations, implement advanced load balancing strategies, or segment traffic across multiple fabrics to optimize performance and fault tolerance. This makes the category particularly attractive for mission-critical applications where uptime, scalability, and deterministic performance are essential.

Support for 400 Gigabit Ethernet Standards

Adapters in this category support the latest 400 Gigabit Ethernet standards, ensuring compatibility with modern switches, transceivers, and cabling solutions. This includes support for advanced modulation schemes and error correction techniques that maintain signal integrity over high-speed links. The ability to operate reliably at 400GBE speeds is crucial for large-scale deployments where even minor performance degradations can have significant downstream effects.

In addition to raw throughput, the category emphasizes low and consistent latency. Hardware-based packet scheduling, congestion management, and flow control mechanisms work together to deliver predictable performance even under bursty or highly parallel traffic patterns. This is particularly valuable for latency-sensitive workloads such as real-time analytics, financial modeling, and distributed AI training.

InfiniBand XDR and Converged Networking Capabilities

Beyond Ethernet, this category encompasses full support for InfiniBand XDR, offering ultra-low latency and high message rates for tightly coupled compute clusters. InfiniBand XDR enables efficient scaling of high-performance computing and artificial intelligence workloads by minimizing communication overhead between nodes. The ability to operate in both Ethernet and InfiniBand environments positions these adapters as versatile building blocks for converged network architectures.

Converged networking allows organizations to standardize on a single adapter platform while supporting multiple fabrics and protocols. This reduces hardware diversity, simplifies management, and lowers total cost of ownership. The ConnectX-8 based category is designed to seamlessly switch between or simultaneously support different networking modes, making it suitable for hybrid deployments and evolving infrastructure requirements.

Form Factor, Thermal Design, and Deployment

The HHHL PCI Express form factor defines another important aspect of this category. By adhering to standard half-height, half-length dimensions, these adapters can be deployed across a wide range of server platforms without requiring specialized chassis or cooling solutions. This compatibility simplifies integration into existing data center infrastructures while enabling straightforward upgrades to higher network speeds.

Thermal design considerations are critical at these performance levels. Adapters in this category incorporate advanced heat dissipation techniques, including optimized heat sinks and airflow management, to maintain reliable operation under sustained high нагруз. Efficient thermal performance ensures longevity and stability, even in densely packed server environments.

Scalability Across Data Center Architectures

Scalability is a core principle of this category, enabling organizations to build networks that grow alongside their compute and storage resources. The high bandwidth density and protocol flexibility of ConnectX-8 based adapters support leaf-spine architectures, flat fabrics, and custom topologies designed for specific workload requirements. This adaptability makes the category suitable for both enterprise data centers and hyperscale deployments.

As workloads evolve and data volumes increase, the ability to scale network capacity without disruptive changes becomes increasingly valuable. This category provides a forward-looking foundation that supports future expansion and emerging technologies.

Future-Proofing Data Center Investments

By adopting network adapters in this category, organizations position themselves to meet future performance demands without frequent hardware refreshes. Support for the latest networking standards, combined with flexible firmware and software ecosystems, ensures long-term compatibility and adaptability. This future-proofing is particularly important in environments where infrastructure investments must deliver value over extended lifecycles.

The Nvidia 900-9X81E-00EX-ST0 ConnectX-8 C8180 Super NIC exemplifies this category’s focus on innovation, performance, and scalability. As data centers continue to evolve toward more distributed, accelerated, and intelligent architectures, this class of network adapters will remain a critical enabler of next-generation computing.