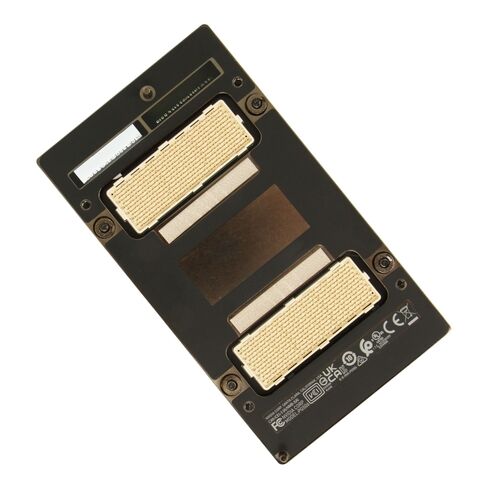

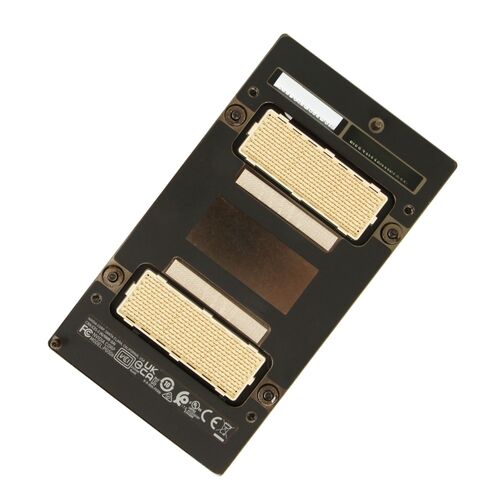

965-2G506-0030-200 Nvidia A100 Tensor Core 80GB 500W Sxm4 GPU

- — Free Ground Shipping

- — Min. 6-month Replacement Warranty

- — Genuine/Authentic Products

- — Easy Return and Exchange

- — Different Payment Methods

- — Best Price

- — We Guarantee Price Matching

- — Tax-Exempt Facilities

- — 24/7 Live Chat, Phone Support

- — Visa, MasterCard, Discover, and Amex

- — JCB, Diners Club, UnionPay

- — PayPal, ACH/Bank Transfer (11% Off)

- — Apple Pay, Amazon Pay, Google Pay

- — Buy Now, Pay Later - Affirm, Afterpay

- — GOV/EDU/Institutions PO's Accepted

- — Invoices

- — Deliver Anywhere

- — Express Delivery in the USA and Worldwide

- — Ship to -APO -FPO

- — For USA - Free Ground Shipping

- — Worldwide - from $30

Overview of NVIDIA 965-2G506-0030-200 A100 80GB GPU

The NVIDIA 965-2G506-0030-200 A100 Tensor Core 80GB 500W SXM4 GPU represents one of the most advanced data-center graphics accelerators available today. Designed for enterprise-level workloads, this professional-grade component delivers exceptional computational output for AI, machine learning, deep-learning frameworks, data analytics, cloud infrastructures, and high-performance computing projects. With its massive 80GB HBM2e memory and powerful Tensor Core architecture, this GPU supports scalable performance across demanding parallel workloads.

General Information

- Manufacturer: Nvidia

- Part Number: 965-2G506-0030-200

- Product Type: GPU

Technical Specifications

- Chipset Manufacturer: Nvidia

- Product Class: Professional / Data-Center Grade

- Brand Series: Tesla

- Model Number: A100

- Graphics Memory Capacity: 80GB HBM2e

- Power Rating: 500W SXM4 Form Factor

- Architecture: NVIDIA Ampere with Tensor Cores

Powerful Tensor Core Architecture

- Third-generation Tensor Cores for intelligent processing

- Improved sparse matrix computation for boosted efficiency

- Speed-enhanced matrix multiplication for neural networks

- High-bandwidth HBM2e memory enabling faster data movement

SXM4 Design

- Improved cooling for nonstop performance

- Higher power delivery for intensive operations

- Compact and scalable GPU-to-GPU communication

Compatibility and Integration

- NVIDIA CUDA ecosystems

- TensorFlow, PyTorch, and MXNet

- NVIDIA NCCL for multi-GPU communication

- NVIDIA AI Enterprise software suite

The Nvidia 965-2G506-0030-200 A100 Tensor Core 80GB GPU

The Nvidia 965-2G506-0030-200 A100 Tensor Core 80GB 500W SXM4 accelerator card represents one of the most powerful high-performance computing graphics modules available for enterprise-class workloads. As part of the advanced Nvidia A100 family, this SXM4 GPU is engineered to push the limits of data processing, artificial intelligence acceleration, cloud-scale computation, and deep learning optimization. With an expansive 80GB HBM2e memory capacity and cutting-edge Tensor Core technology, this model provides extraordinary throughput for hyperscale data centers, AI research institutes, cloud infrastructure deployments, and multi-tenant GPU clusters.

Ampere-Based Computing Capabilities

The Nvidia 965-2G506-0030-200 A100 GPU is based on the acclaimed Nvidia Ampere architecture, offering a revolutionary leap in data processing and floating-point performance. The Ampere platform introduces improved instructions, enhanced parallelism, and superior multi-instance GPU (MIG) functionality that allows resource partitioning across workloads. These features collectively create a new standard for universal data center acceleration, supporting an extensive range of contemporary computational challenges.

HBM2e Memory Integration

One of the most distinguishing characteristics of the Nvidia A100 80GB SXM4 GPU is its HBM2e memory configuration. With 80GB of high-bandwidth memory and exceptionally fast throughput, this card supports real-time analytics, complex neural networks, and massive dataset training cycles. The large memory capacity ensures that deep learning models, high-resolution simulations, and parallel workloads can be executed with minimal bottlenecks and nearly instantaneous access to required datasets.

Tensor Core Acceleration

Tensor Cores are among the most critical innovations within the Nvidia A100 GPU lineup. The third-generation Tensor Cores create unmatched speed for AI inference, training, and scientific research tasks. Through new precision formats like TF32 and enhanced FP16 support, the GPU can enable models to train significantly faster, allowing data scientists to iterate more quickly on complex architectures.

High-Performance Computing (HPC)

In the realm of supercomputing, the Nvidia 965-2G506-0030-200 A100 80GB SXM4 GPU offers exceptional double-precision capabilities, making it suitable for climate modeling, quantum mechanics, fluid dynamics simulations, medical research, and genomics. With its enhanced FP64 throughput, the card is ideal for workloads requiring numerical accuracy and consistent stability.

Multi-Instance GPU (MIG) Capabilities

The multi-instance GPU (MIG) feature allows the Nvidia A100 80GB SXM4 accelerator to be divided into multiple isolated GPU instances. This enables data centers to run multiple AI or HPC workloads simultaneously without performance interference.

SXM4 Form Factor and Power Efficiency

The SXM4 form factor delivers superior thermal performance compared to conventional PCIe GPUs. Its high-bandwidth power delivery and optimized cooling layout make it ideal for dense GPU clusters inside enterprise servers.

Efficient 500W Power Consumption

Although the Nvidia A100 80GB GPU draws up to 500W, its power-to-performance ratio is significantly better than previous generations. The Ampere design ensures that the GPU delivers maximum computational output per watt, contributing to sustainable data center operations.

Nvidia CUDA Toolkit and Libraries

CUDA serves as the foundation for developing and optimizing GPU-accelerated applications. With extensive libraries for linear algebra, deep learning, random number generation, and scientific computing, developers can unlock the full performance potential of the A100.

Reliability and Data Integrity Features

The Nvidia A100 80GB SXM4 GPU is built to operate consistently under heavy loads, 24/7, inside demanding enterprise data centers. With fault-tolerant mechanisms and advanced thermal control, it ensures long-term reliability and predictable performance.

High-End Server Ecosystems

From hyperscale data centers to high-frequency trading firms, the Nvidia A100 series provides essential performance improvements required for modern workloads. Its architecture supports both present and future-generation demands.