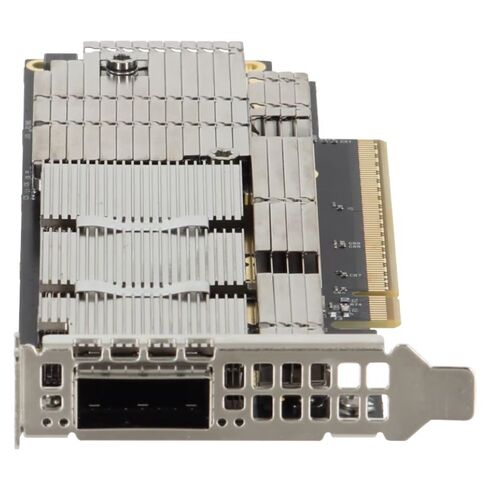

900-9X766-003N-SQ0 Nvidia ConnectX-7 NDR IB Single-Port 400GBPS OSFP PCIe 5.0 X16 Network Adapter

- — Free Ground Shipping

- — Min. 6-month Replacement Warranty

- — Genuine/Authentic Products

- — Easy Return and Exchange

- — Different Payment Methods

- — Best Price

- — We Guarantee Price Matching

- — Tax-Exempt Facilities

- — 24/7 Live Chat, Phone Support

- — Visa, MasterCard, Discover, and Amex

- — JCB, Diners Club, UnionPay

- — PayPal, ACH/Bank Transfer (11% Off)

- — Apple Pay, Amazon Pay, Google Pay

- — Buy Now, Pay Later - Affirm, Afterpay

- — GOV/EDU/Institutions PO's Accepted

- — Invoices

- — Deliver Anywhere

- — Express Delivery in the USA and Worldwide

- — Ship to -APO -FPO

- — For USA - Free Ground Shipping

- — Worldwide - from $30

Same product also available in:

| SKU/MPN | Warranty | Price | Condition | You save |

|---|---|---|---|---|

| 900-9X766-003N-SQ0 | 1 Year Warranty | $1,472.00 | Excellent Refurbished | You save: $515.20 (26%) |

| 900-9X766-003N-SQ0 | 1 Year Warranty | $1,520.00 | Factory-Sealed New in Original Box (FSB) | You save: $532.00 (26%) |

Nvidia 900-9X766-003N-SQ0 ConnectX-7 Adapter

The Nvidia 900-9X766-003N-SQ0 ConnectX-7 is a high-performance single-port network interface card designed for cutting-edge datacenter environments. Offering 400Gbps NDR InfiniBand and advanced Ethernet connectivity, this PCIe 5.0 x16 adapter delivers next-level bandwidth for modern workloads, cloud computing, artificial intelligence, and HPC clusters.

Key Manufacturer Details

- Brand: Nvidia

- Part Number: 900-9X766-003N-SQ0

- Model: ConnectX-7 NDR IB PCIe Adapter

- Crypto: Disabled

- Secure Boot: Enabled

Physical Design & Dimensions

- Engineered for compatibility and efficiency, the adapter is designed in a compact half-height, half-length PCIe form factor, making it suitable for space-constrained rack environments.

Card Measurements

- Height: 2.71 inches (68.90mm)

- Length: 6.6 inches (167.65mm)

Connectivity & Networking

- Port: Single OSFP InfiniBand and Ethernet

- Default Mode: InfiniBand NDR

- Compatibility: InfiniBand + Ethernet interoperability

InfiniBand Modes

- NDR: 4 lanes × 100Gb/s per lane

- NDR200: 2 lanes × 100Gb/s per lane

- HDR: 50Gb/s per lane

- HDR100: 2 lanes × 50Gb/s

- EDR: 25Gb/s per lane

- FDR: 14.0625Gb/s per lane

- SDR: 1×/2×/4× at 2.5Gb/s per lane

Ethernet Capabilities

- The adapter 900-9X766-003N-SQ0 a broad spectrum of Ethernet standards ranging from legacy compatibility to state-of-the-art speeds, making it ideal for multi-generational infrastructures.

- 400G: 400GAUI-4 C2M, 400GBASE-CR4

- 200G: 200GAUI-4 C2M, 200GBASE-CR4

- 100G: 100GAUI-2 C2M, 100GBASE-CR4/CR2/CR1

- 50G: 50GAUI-2 C2M, 50GAUI-1 C2M, 50GBASE-CR/R2

- 40G: 40GBASE-CR4, 40GBASE-R2

- 25G: 25GBASE-R, 25GAUI C2M

- 10G: 10GBASE-R, 10GBASE-CX4

- 1G: 1000BASE-CX

- Additional: XLAUI C2M, XLPPI, SFI, CAUI-4 C2M.

Power and Electrical Specifications

- Operating Voltages: 12V, 3.3VAUX

- Maximum Current Draw: 100mA

Thermal and Environmental Ratings

Temperature Tolerance

- Operational: 0°C to 55°C

- Storage: -40°C to 70°C

Humidity Ranges

- Operational: 10% – 85% RH

- Non-Operational: 10% – 90% RH

Highlighted Advantages

- Optimized for AI, deep learning, and HPC workloads

- Offers 400Gbps peak bandwidth for maximum performance

- Supports both InfiniBand and Ethernet networking protocols

- Compact PCIe half-height design for efficient rack deployments

- Secure Boot capability for trusted deployments

Form Factor and Physical

- Form factor: OSFP module (single port)

- Interface: PCIe 5.0 x16

- Dimensions: OSFP module dimensions (consult vendor datasheet for exact mm values)

- Cooling: Active or passive heatsink options depending on chassis airflow

- Weight: Lightweight module — check exact weight in product datasheet

Electrical and Thermal

- Power draw: Variable by firmware and workload — plan for elevated power on sustained line-rate use.

- Power delivery: Standard PCIe slot power plus auxiliary bus power when required.

- Thermal solution: Ensure chassis airflow meets recommended CFM; thermal throttling can occur under inadequate cooling.

Protocol and Software

- Protocols: InfiniBand NDR, RoCE v2, Ethernet at 400G

- Drivers: Broad OS support including mainstream Linux kernels and enterprise distributions; vendor-supplied drivers and OFED stacks for RDMA.

- Firmware: Updatable via vendor tools — firmware revisions affect performance, compatibility, and stability.

900-9X766-003N-SQ0 Nvidia ConnectX-7 NDR IB High Level

The 900-9X766-003N-SQ0 Nvidia ConnectX-7 NDR IB Single-Port 400GBPS OSFP PCIe 5.0 X16 Network Adapter represents a top-tier class of data center and high-performance computing (HPC) network interface cards (NICs). Designed for ultra-low latency, high-throughput environments, and heavy I/O workloads, this adapter is built around Nvidia's ConnectX-7 family and targets demanding use cases like AI/ML training, large-scale virtualization, telco infrastructure, and financial trading platforms.

Key Features of the ConnectX-7 900-9X766-003N-SQ0 Adapter

Raw Performance and Bandwidth

The adapter is rated for 400 Gbps per port using an OSFP form factor. It leverages PCIe 5.0 x16 to minimize host-bus bottlenecks and to sustain line-rate transfers for multi-gigabyte workloads. Key bandwidth-related features include:

Programmability and Ecosystem

Programmable match/action pipelines and integration with Data Processing Units (DPUs) enable advanced networking functions such as in-network telemetry, flow steering, and custom packet processing. ConnectX-7 fits into the broader Nvidia ecosystem for DPUs and accelerators.

Technical Specifications What to Expect

Below is a structured summary of the most important technical specifications for the 900-9X766-003N-SQ0 ConnectX-7 adapter, useful for capacity planning and system design.

Compatibility and Integration Considerations

Server and Motherboard Compatibility

Before purchasing, confirm the server platform supports PCIe 5.0 x16 and has sufficient physical clearance for an OSFP module. While PCIe 5.0 backward compatibility with PCIe 4.0/3.0 exists, reduced host bandwidth will cap overall achievable throughput if the server does not implement PCIe 5.0 lanes.

Switch and Fabric Compatibility

This adapter integrates with modern leaf-spine switches that support 400GbE or InfiniBand fabrics. Verify switch OSFP port support, transceiver compatibility (QSFP-DD vs OSFP differences), and whether passive or active cables and optics are required.

Transceivers and Cabling

OSFP modules can use direct-attach cables (DAC), active optical cables (AOC), or transceiver modules. Match the adapter’s optical and electrical specifications when selecting cables — using incorrect interconnects will limit performance or prevent link-up.

AI/ML Training and Multi-GPU Systems

900-9X766-003N-SQ0 For training deep learning models across many GPU nodes, this adapter provides the bandwidth and RDMA offload required for efficient parameter synchronization and off-host communication. Typical deployments:

Storage and NVMe over Fabrics (NVMe-oF)

NVMe-oF deployments benefit from RDMA and hardware acceleration to achieve low-latency, high-IOPS storage networks. Use the ConnectX-7 adapter as an accelerator for NVMe targets and initiators to boost storage fabric performance.

Cloud, Virtualization, and Telco Infrastructure

In cloud and telco environments where multi-tenancy, DPDK acceleration, and SR-IOV are crucial, ConnectX-7’s virtualization offloads and representor support reduce hypervisor overhead and increase VM-to-network throughput.

Performance Tuning and Best Practices

Firmware and Driver Management

Keep firmware and drivers up to date: performance patches, security fixes, and stability improvements often come through driver and firmware releases. Best practices include:

Jumbo Frames and MTU Considerations

Increasing MTU (jumbo frames) reduces per-packet CPU overhead and can improve throughput for large sequential transfers. However, ensure all fabric elements — switches, routers, and end-hosts — support the chosen MTU.

Network Security and Isolation

Use hardware-enforced isolation features like SR-IOV for tenant separation in virtualized environments. Leverage ACLs and flow steering to isolate management traffic from data traffic. Keep firmware signed and only install vendor-provided, validated firmware to avoid supply-chain risks.

Log Management and Alerting

Integrate NIC-level logs with centralized log systems. Configure alerts for link flaps, CRC errors, and thermal throttling events to allow proactive remediation.